Facebook (Kopec Rework)

As part of the Ethics of Content Labeling Project, we present a comprehensive overview of social media platform labeling strategies for moderating user-generated content. We examine how visual, textual, and user-interface elements have evolved as technology companies have attempted to inform users on misinformation, harmful content, and more.

Authors: Jessica Montgomery Polny, Graduate Researcher; Prof. John P. Wihbey, Ethics Institute/College of Arts, Media and Design

Last Updated: June 3, 2021

When it comes to content labeling and moderation, Facebook Inc. utilizes a metric of “harm” to fact-check and rate content containing misinformation, and implement the appropriate labels based on its criteria. When the Facebook platform first adopted fact-check labels in 2016, they were limited to text-based banners. Now, interstitials and click-through mechanisms on Facebook and Instagram provides a clearer indication of review and increased friction against user engagement with harmful misinformation.

Facebook Inc. Overview

When it comes to content labeling and moderation, Facebook Inc. utilizes a metric of “harm” to fact-check and rate content containing misinformation, and implement the appropriate labels based on its criteria. When the Facebook platform first adopted fact-check labels in 2016, they were limited to text-based banners. Now, interstitials and click-through mechanisms on Facebook and Instagram provides a clearer indication of review and increased friction against user engagement with harmful misinformation.

Visible indicators of labeling have evolved steadily by design, while updates on policies regarding the implementation of labels for Facebook and Instagram have evolved as a reaction to real-world events. The misinformation shared around important events, most notably the 2020 U.S. Elections and COVID-19, were determined by Facebook Inc. to constitute a concerning level of harm.

The following analysis is based on open-source information about the platform. The analysis only relates to the nature and form of the moderation, and we do not here render judgments on several key issues, such as speed of labeling response (i.e., how many people see the content before it is labeled); the relative success or failure of automated detection and application (false negatives and false positives by learning algorithms); and actual efficacy with regard to users (change of user behavior and/or belief.)

The following analysis is based on open-source information about the platform. The analysis only relates to the nature and form of the moderation, and we do not here render judgments on several key issues, such as speed of labeling response (i.e., how many people see the content before it is labeled); the relative success or failure of automated detection and application (false negatives and false positives by learning algorithms); and actual efficacy with regard to users (change of user behavior and/or belief.)

Facebook Platform Content Labeling

Facebook platform examples have been captured primarily on desktop screenshots, due to the diversity and multiplicity of content on the platform

Facebook attempts to combat misinformation by removing and reducing the visibility of harmful content, as well as informing users with authoritative sources. A large part of the Facebook content assessment strategy relies on third-party fact-checkers. These fact-checking organizations are all signatories of the International Fact-Checking Network, a mandatory condition of collaborating with Facebook Inc. The platform’s community standards, under the section “Integrity & Authenticity,” include the moderation of false news, as outlined by the community standards. Platform guidelines for misinformation moderation were presented in a foundational Newsroom announcement from 2019, “Remove, Reduce, and Inform“:

- Remove. In the general Community Standards, Facebook provides information on the content subject to removal – hate speech, violent and graphic content, adult nudity and sexual activity, sexual solicitation, and cruel and insensitive content.

- Reduce. Regarding false news, reduction includes (a) “Disrupting economic incentives for people, Pages and domains that propagate misinformation,” (b) “Using various signals, including feedback from our community, to inform a machine learning model that predicts which stories may be false,” (c) “Reducing the distribution of content rated as false by independent third-party fact-checkers.”

- Inform. “Empowering people to decide for themselves what to read, trust and share by informing them with more context and promoting news literacy.” Strategies on informing, including the context button, page transparency, and context warning labels are included in these tables.

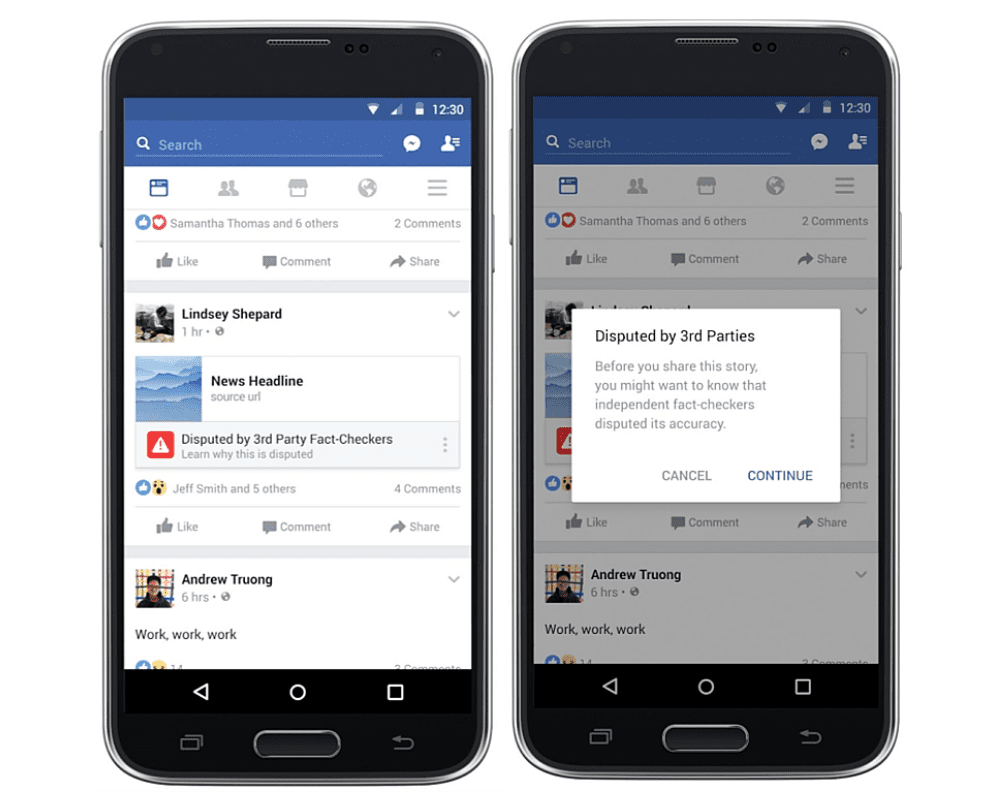

The first iteration of content warnings, labeled as “Disputed with 3rd Party Fact-checkers.” A red exclamation icon displays next to the plain text warning, within a banner under the link preview.Link: https://about.fb.com/news/2016/12/news-feed-fyi-addressing-hoaxes-and-fake-news/

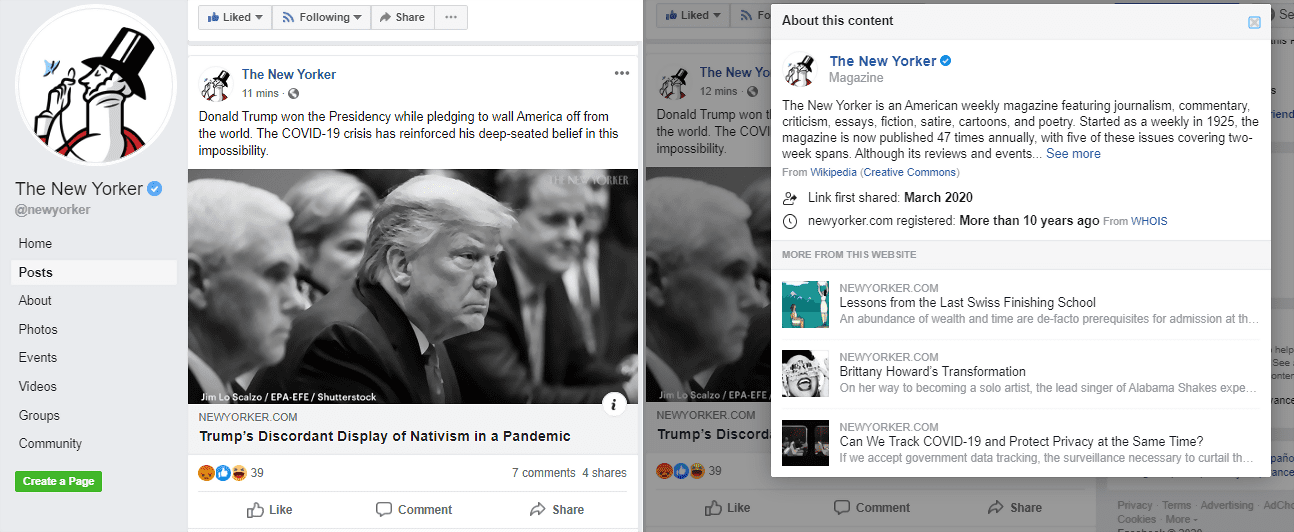

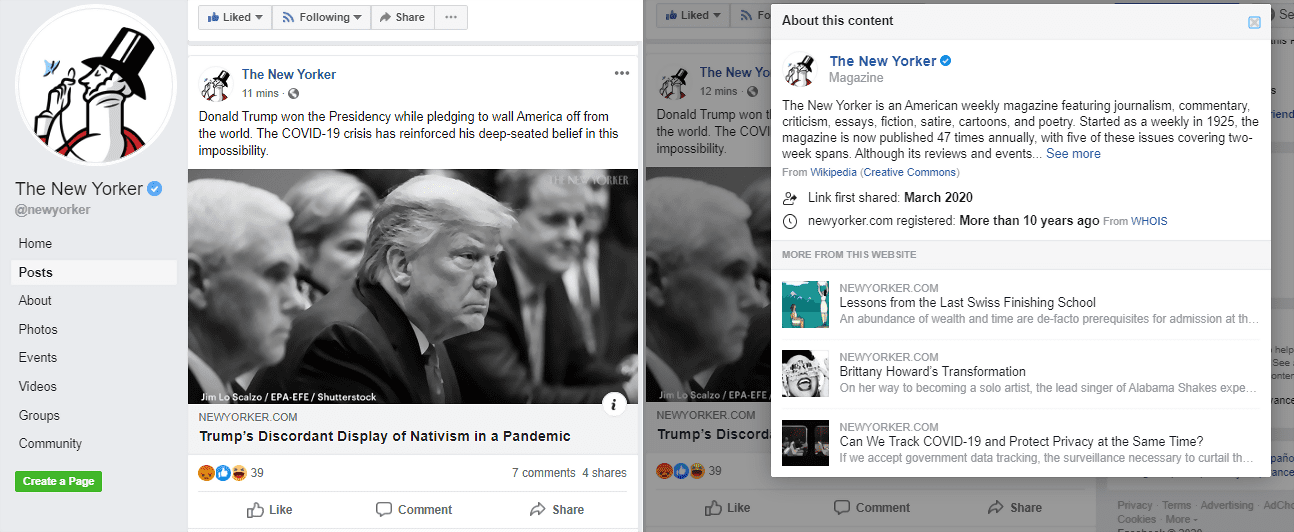

Facebook provides information on third-party links, with publisher context on the news sources and content creators. This is a click-through button icon [(i)], with information such as the publisher’s Wikipedia entry, related articles on the same topic, the number of Facebook shares and to which pages or profiles, as well as a link to the publisher’s home page. Link: https://www.facebook.com/newyorker/posts/10157422309333869

The evolution of the labeling strategy is inflected by certain key moves and company announcements. In December of 2016, Facebook published a Newsroom announcement, “Addressing Hoaxes and Fake News,” stating that content flagged for misinformation would be labeled as “Disputed by 3rd Party Fact-checkers” in a banner below the content. However, there was no interstitial over the link image, and there was no evaluation of the veracity of the content provided. This first version is a stagnant label, and it does not provide much friction when scrolling through the Facebook feed.

The evolution of Facebook platform labels and policies focuses on the metric of harm, to protect the safety and integrity of its users. These strategies adjust in response to content with a high likelihood of causing harm, such as medical misinformation and false news that interrupts the democratic process. In efforts to increase information transparency on the platform, Facebook launched publisher context labels in a Newsroom announcement in the spring of 2018. The in-platform context includes an abundance of verified information on the publishers, which are accessible after users click through the link.

Facebook provides information on third-party links, with publisher context on the news sources and content creators. This is a click through button icon [(i)], with information such as the publisher’s Wikipedia entry, related articles on the same topic, the number of Facebook shares and to which pages or profiles, as well as a link to the publisher’s home page. Link: https://www.facebook.com/newyorker/posts/10157422309333869

Facebook Platform and the 2020 U.S. Elections

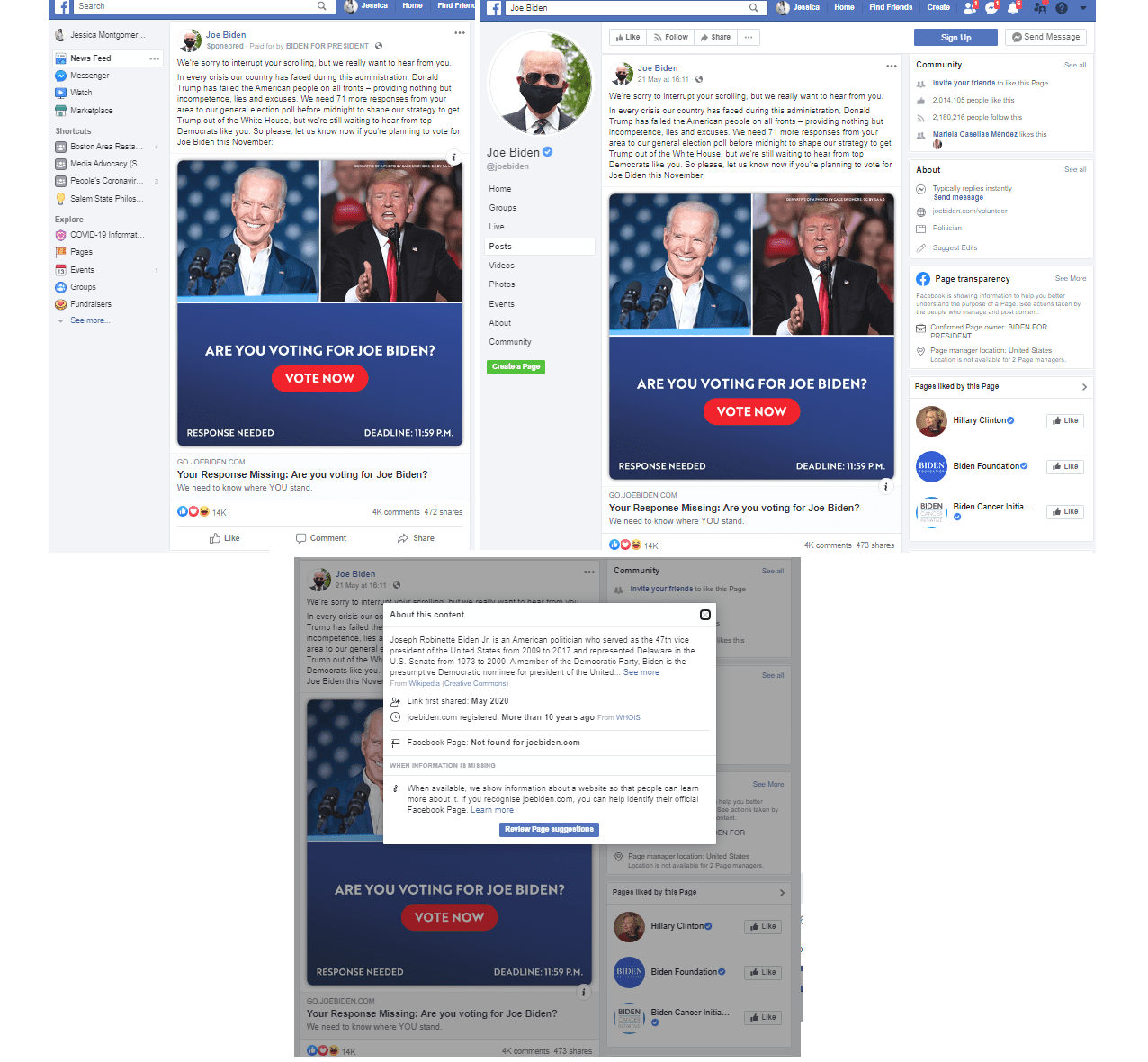

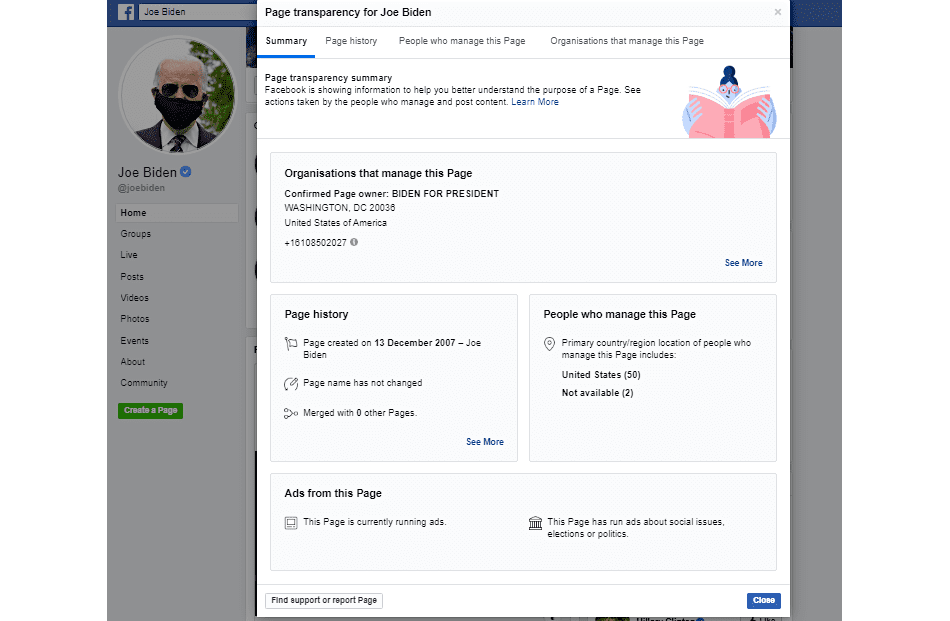

Increased action for transparency on public pages and third-party linked publishers soon expanded to advertisement transparency in April of 2018, in particular for political ads on Facebook. Advertisements had grey subtext beneath the post publisher title that the advertisement is “paid for by” an individual or organization, which also provides information on sponsor verification and a third-party link to further information on political candidates or social issues. This notice also acts as a click-through button to view the verified sponsor’s page and view Facebook advertising policies. The verified page on the candidate or sponsoring organization must also be available for public view. These pages will have available “Page Transparency,” with information on page metrics, such as when the page was created and page moderators.

Under the title for the Facebook page that published the content, in grey text states “Sponsored” and “Paid for by.” Users can click on “Sponsored” to go to the advertising information and policies web page on Facebook, and the sponsor’s name which follows “Paid for by” can be clicked through to see the sponsor’s Facebook page. Link: https://www.facebook.com/joebiden/posts/10157016663521104

When a user goes to a sponsor’s Facebook Page, they can scroll down to see the “Page Transparency” information. Link: https://www.facebook.com/joebiden/

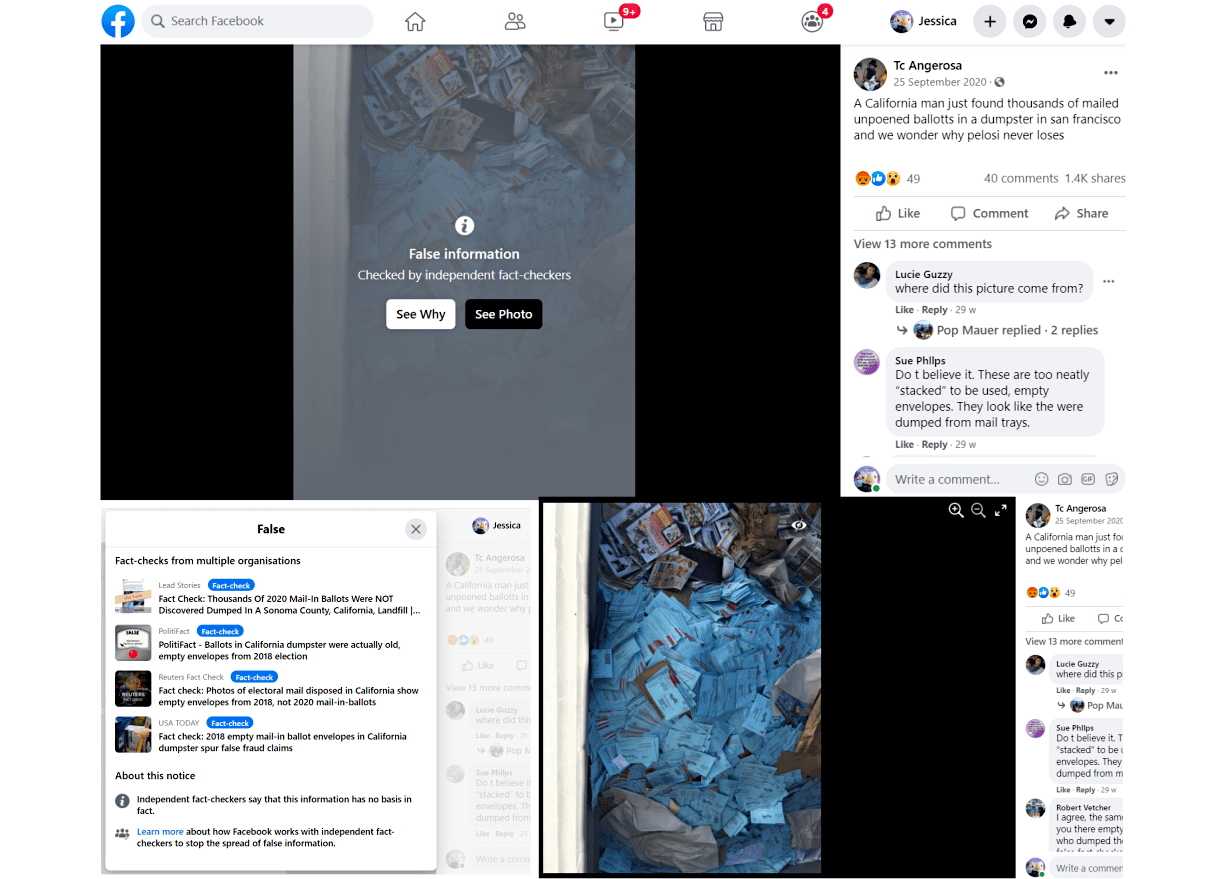

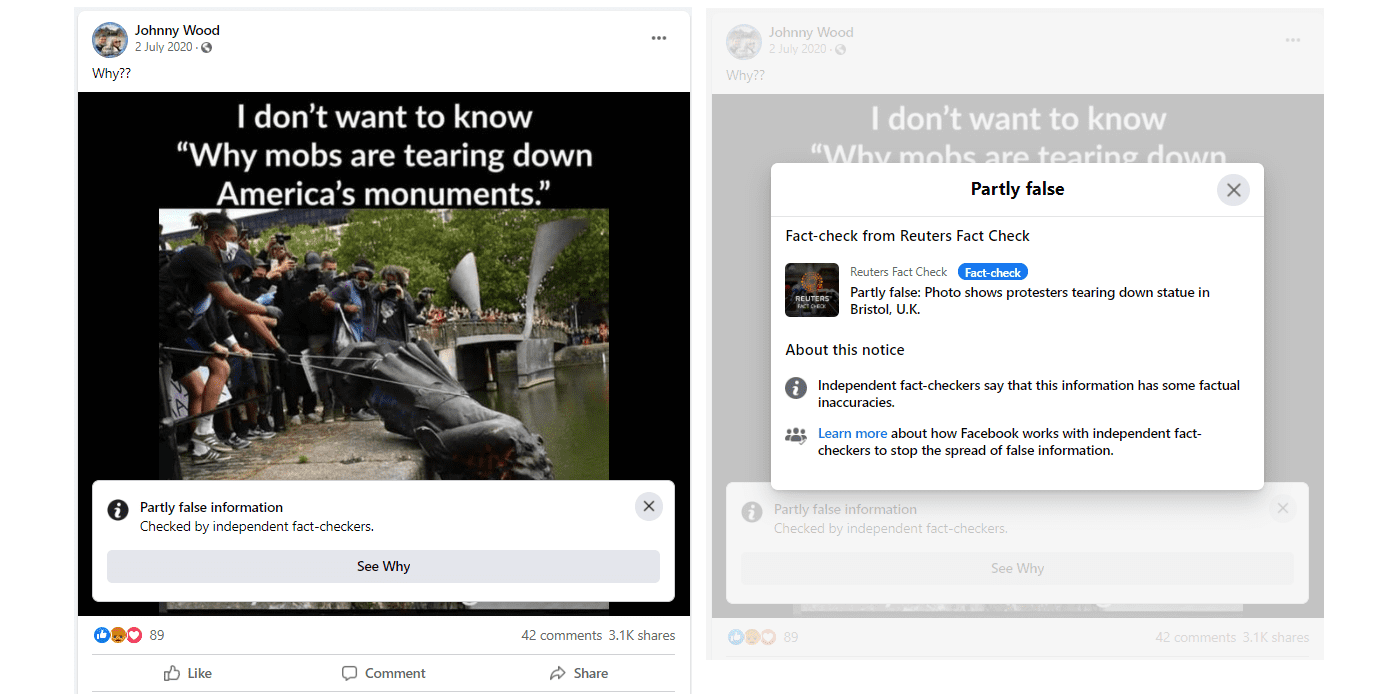

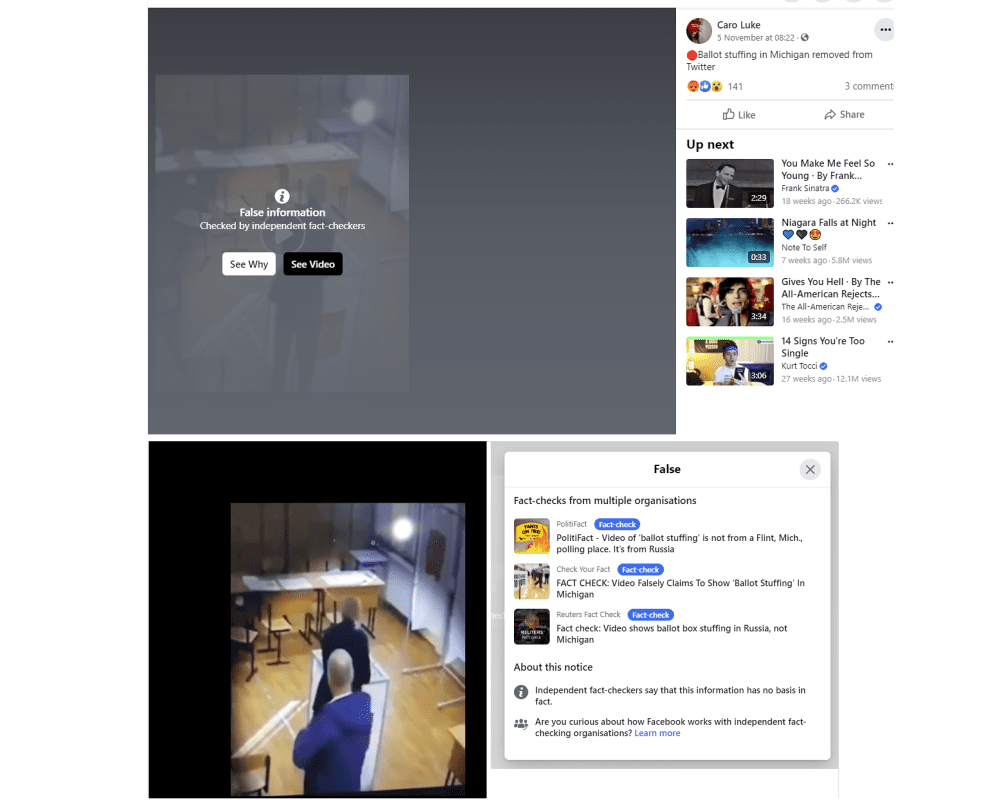

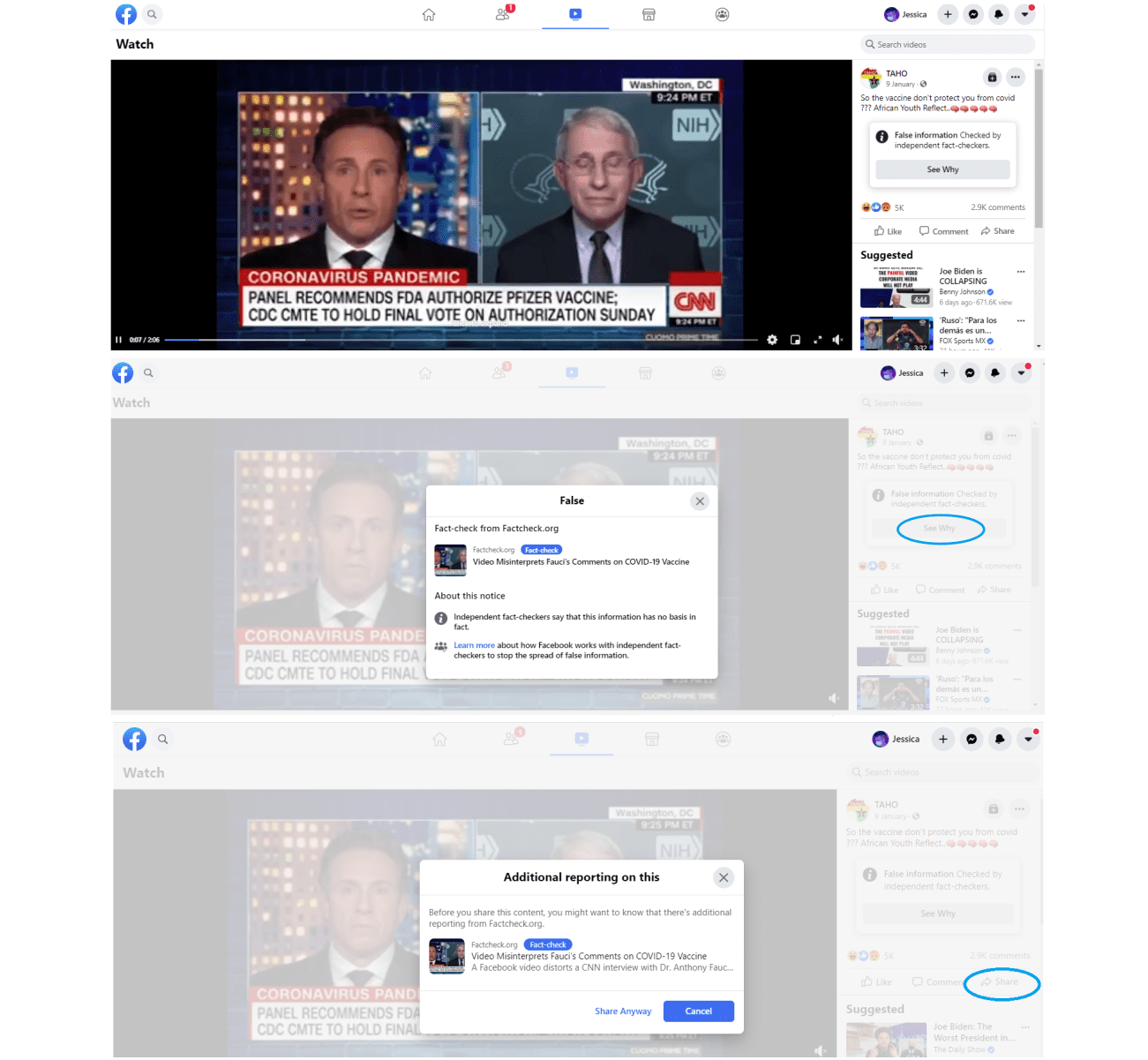

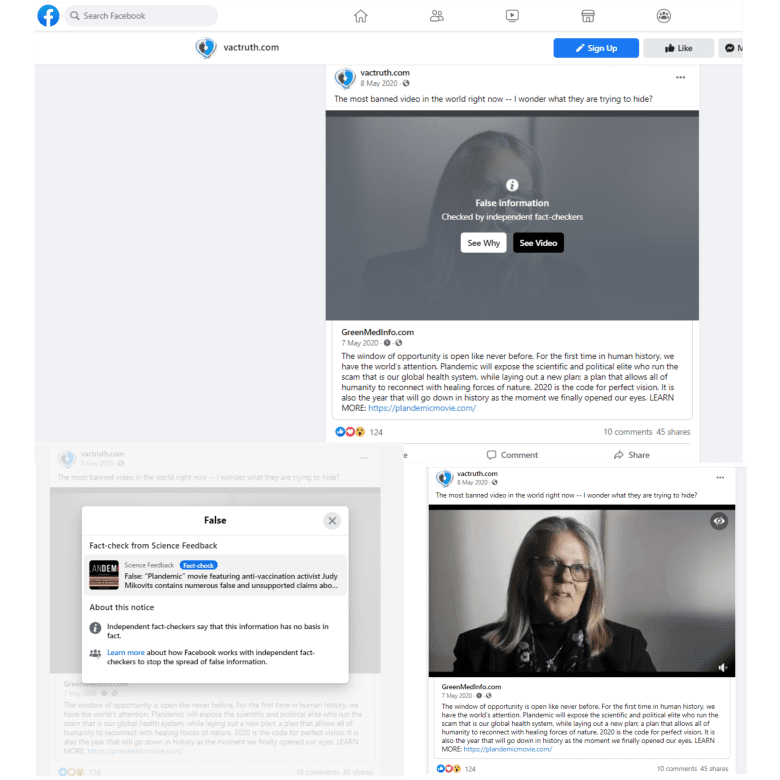

In October of 2019, the majority of labeling and content moderation updates went into effect, as Facebook Newsroom announced strategies for misinformation and harmful content regarding political topics, “Helping to Protect the 2020 US Elections.” Third-party fact-checks began having a range of ratings, most prominently “false information” and “partly false information.” In this updated version, misinformation perceived as harmful is hidden behind a blurred and grey interstitial, to prevent users from viewing the content before acknowledging the warning for misinformation. When users opt to click through and “see” the content, the interstitial and warning labels disappear entirely, only to be reinstated if the page is refreshed or the link is opened in a different tab.

An update from the 2016 implementation. A blurred interstitial appears over content that has been “Checked by independent fact-checkers,” and will also indicate an evaluation such as “False information” on top of the interstitial. Users may choose to click on either see the content beyond the interstitial, or “See Why” for information on the fact-check. This click-though opens to verified articles from the third party fact-checkers. The click-through also includes “About this notice” which briefly explains the evaluation, and includes a link to the Facebook policies on fact-checks. Link: https://www.facebook.com/photo.php?fbid=10158399300208617&set=a.495905068616&type=3

An update from the 2016 implementation. A horizontal banner rests on the bottom portion of the labeled content, with plain text notifying “Partly False information”. Users may choose to click on to “See Why” for information on the fact-check. This click-though opens to verified articles from the third party fact-checkers. The click-through also includes “About this notice” which briefly explains the evaluation, and includes a link to the Facebook policies on fact-checks.

Link: https://www.facebook.com/johnny.wood.169/posts/10157696275499195

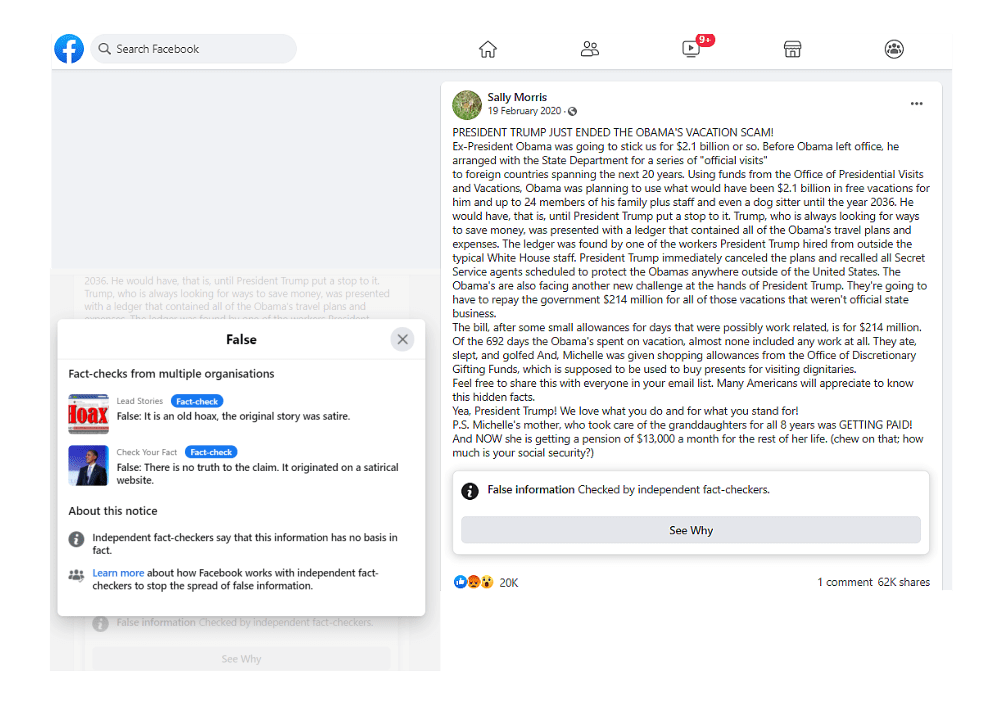

Throughout 2020, fact-check content labels on Facebook began to appear on text-only user posts. Beforehand, content warning labels only appeared as interstitials over images, video, and visual elements for third-party links. Since the the text-only labels appear as banners at the bottom of posts, this may provide less friction than content with interstitials. This is an important turning point in Facebook’s moderation approach.

Text-only user content that receives a label appears as a banner below the post, with a click-through option “See Why” to view the fact check verified information.

Link: https://www.facebook.com/sally.morris.313/posts/2719497178146273

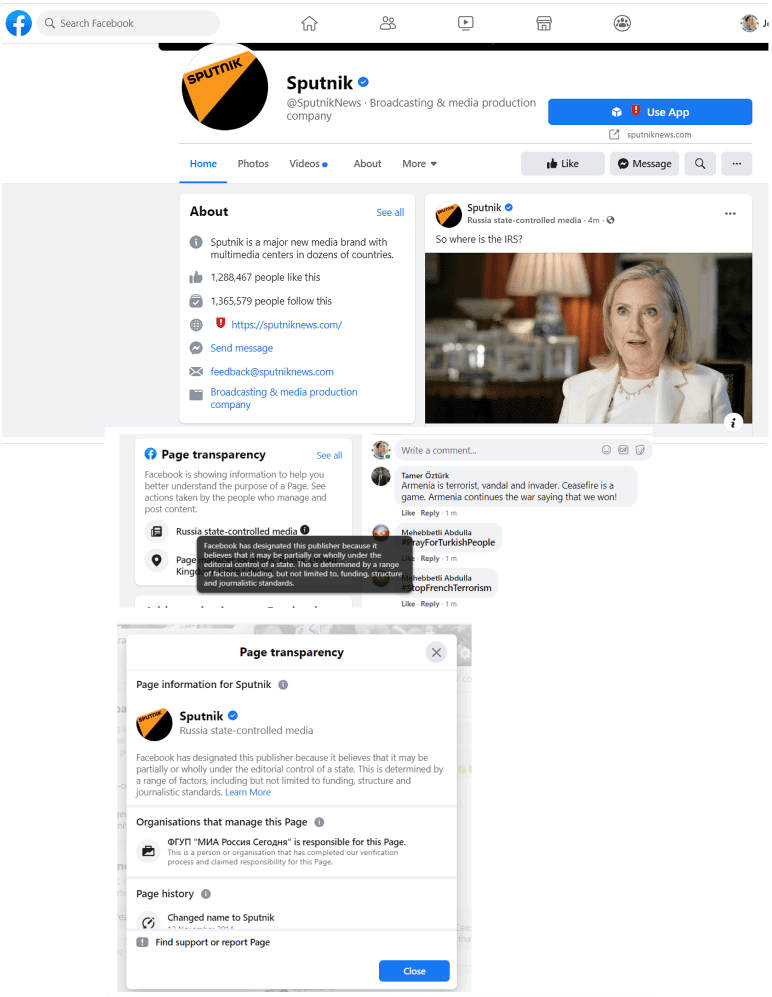

The increase in content transparency outlined in the announcement “Helping to Protect the 2020 US Elections” also includes the labeling of “state-controlled media.” This label is visible in grey subtext under the publisher title for individual content, and on their Facebook pages when scrolling down to view the “page transparency” section. The label for state-controlled media is more apparent on individual content, as opposed to relying on users to scroll down to page transparency in the page view.

The label displaying “state-controlled media,” as well as the country or government sponsoring that media, will display on relevant posts beneath the page name that published the content. Click-though on this notice will provide “About Page” information, which is also available on the Page Transparency that also indicates that the page is “state-controlled media.”

Link: https://www.facebook.com/SputnikNews/?ref=page_internal

Content that is “manipulated” is differentiated in Facebook standards from misinformation. The platform made an announcement in January 2020 that the platform would remove manipulated media rather than label it. When following a Facebook link to removed content, a full-page message appears with a link to Facebook content policies. Along with manipulated content, the focus on removal strategies was more heavily implemented with the growing impact of viral topics in the United States, such as COVID-19 and the U.S. 2020 elections, to prevent the spread of misinformation perceived as harmful.

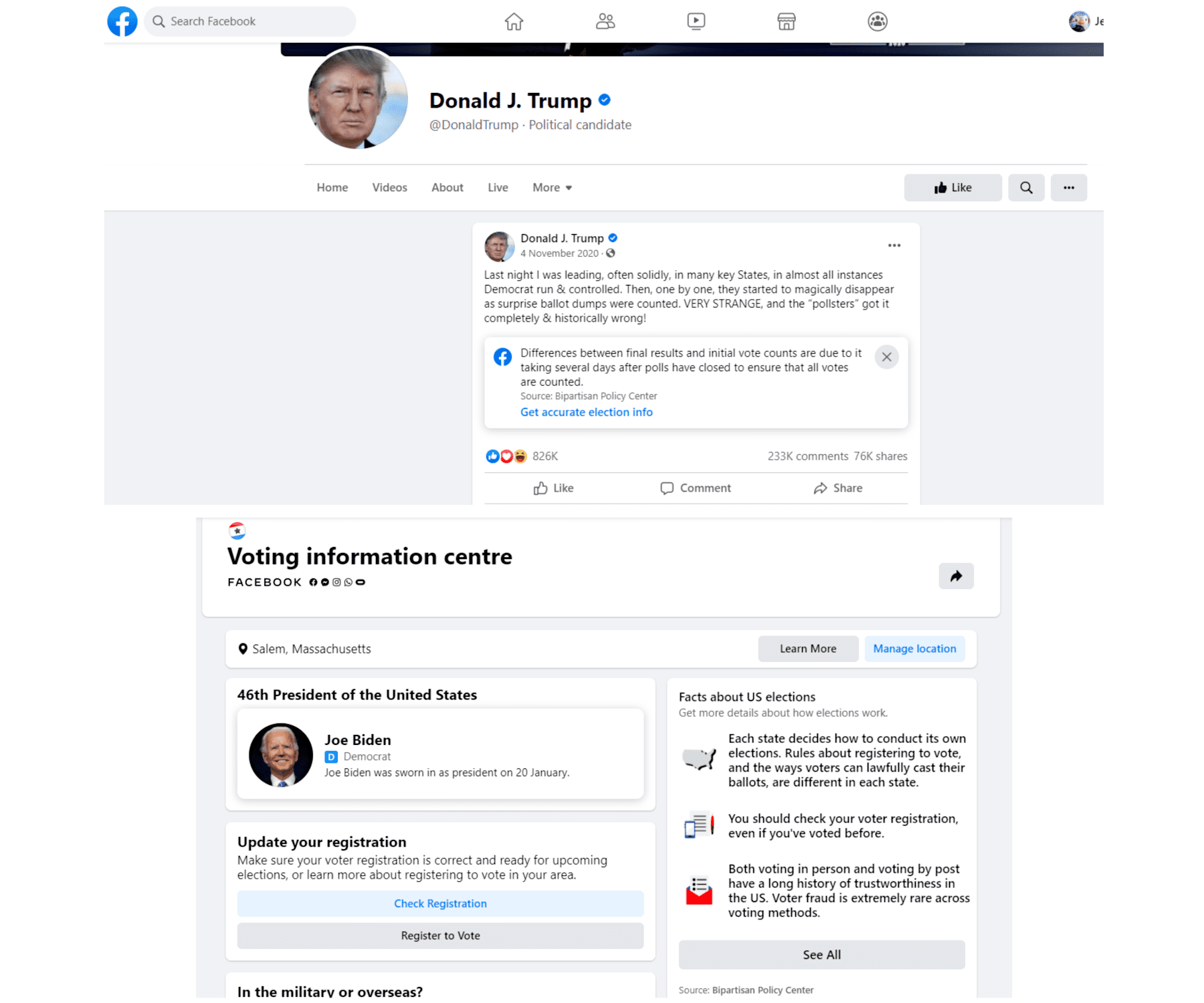

As the 2020 presidential elections drew nearer, Facebook expanded its content moderation policies regarding political content and misinformation. Particular criteria for political misinformation were further clarified regarding third party fact-check ratings, such as misinformation on voting participation and fraudulent claims on the election process. In the Facebook Newsroom announcement “New Steps to Protect the US Elections,” in September of 2020, two new types of political content labels were implemented. First, the company began labeling content that may inhibit civic engagement, such as “fraud” or “delegitimizing voting methods,” with an interstitial content warning. Second, Facebook rolled out a label for “falsely declared victories,” displayed as a banner below posts with click-through information on election data, as opposed to an interstitial.

Facebook made a point to specifically fact-check content relevant to the 2020 Elections. Content warnings – with interstitials, and click through verified information – are placed over “content that seeks to delegitimize the outcome of the election or discuss the legitimacy of voting methods, for example, by claiming that lawful methods of voting will lead to fraud.” Link: https://www.facebook.com/caro.luke.1/videos/408870990138041/

For “any candidate or campaign tries to declare victory before the final results are in,” Facebook will provide a content warning banner, below the post, with a link to authoritative election results. Link: https://www.facebook.com/DonaldTrump/posts/10165757656305725

Facebook Platform COVID-19 Response

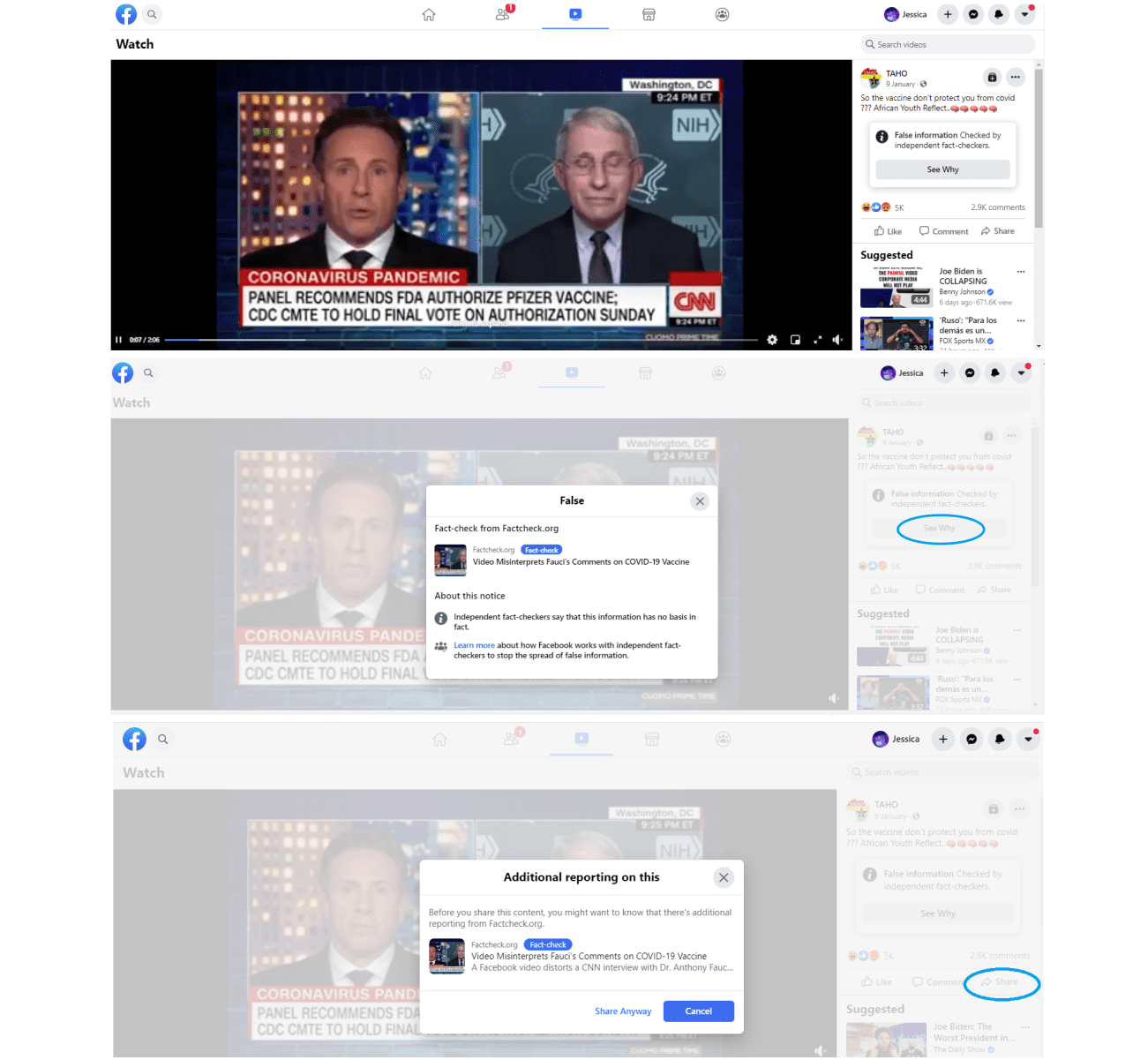

On March 25, 2020, Facebook announced its content moderation approach in response to COVID-19, including the ongoing application of third-party fact-check labels. The visual presentation of content warning labels did not change based on COVID-19 content. Harmful COVID-19 misinformation is placed behind interstitials, with the fact check rating on top, and click-through options for verified information. Applying established Facebook policy regarding misinformation to COVID-19 content was an attempt to provide consistency and transparency to content labeling, including the fact-check ratings, for users encountering labels on the Facebook feed.

When sharing content from Facebook that has been rated by third party fact-checking, a notice pops up providing “Additional reporting on this.” This pop-up provides the fact-checked verified article for correct information, and highlights the open to “Cancel” in a bold blue button, while diminishing the lighter text to “share anyway.” Link: https://www.facebook.com/ezenwegbu.desmondo/posts/2803611089859451

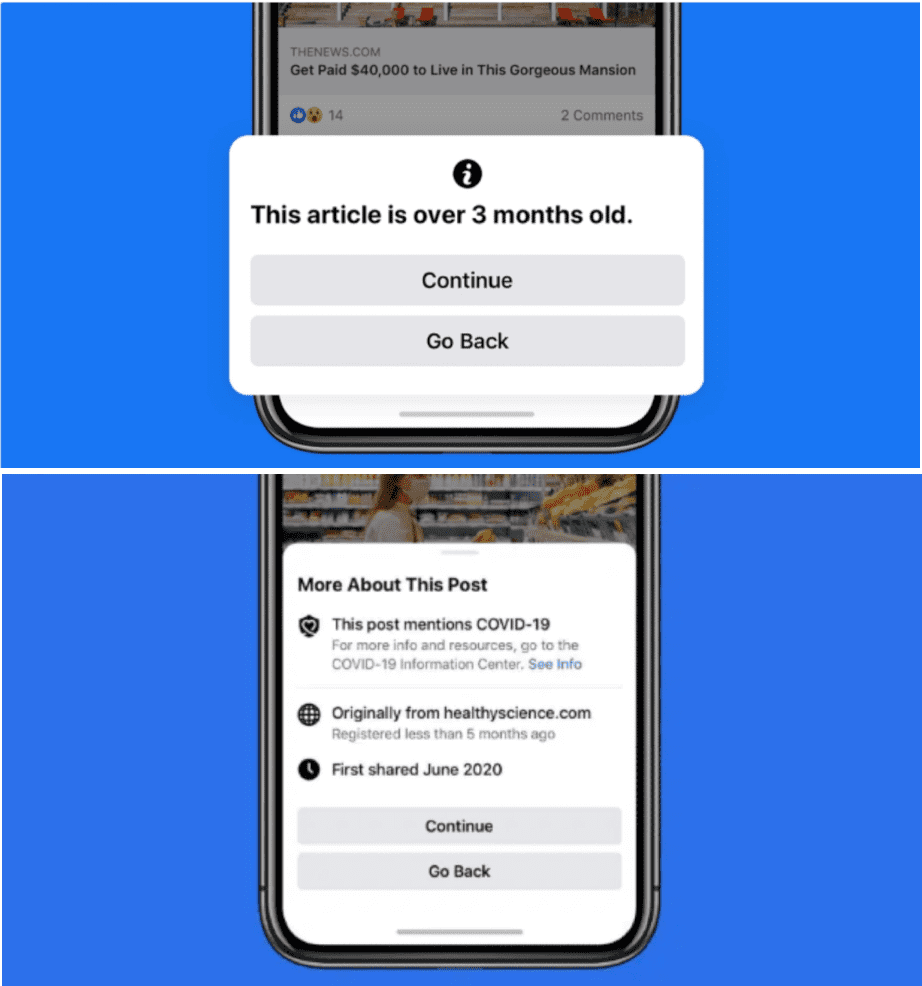

Facebook attempted to increase friction to misinformation content in response to COVID-19 in a variety of areas of its business. For instance, the Facebook chat system “Messenger” adds labels for forwarded content, and also places a forwarding limit in the application. In April 2020, Facebook also included a pop-up notification for anyone who shared a piece of information that had been labeled with a content warning. Facebook encouraged users to “Cancel” their interaction with a bright blue button on this pop-up notice, but users are still allowed to continue to “Share Anyway” with the button option in muted grey. The April 2020 Newsroom announcement “An Update on Our Work to Keep People Informed and Limit Misinformation About COVID-19” also explains how these pop-up notices become more broadly used to provide contextual information on article links, in which a notice appears to warn the user that the article they wish to share is outdated. Users also retain access to view the publisher context information, implemented earlier on.

For links on Facebook related to COVID-19 information, users will receive a prompt when sharing these links. This notice notifies the user how “old” the article is, which may contextualize outdated information. For the Publisher Context button (i) on these links, Facebook provides information on when and by whom the article was published. Captured from Facebook Newsroom. Link: https://about.fb.com/news/2020/06/more-context-for-news-articles-and-other-content/

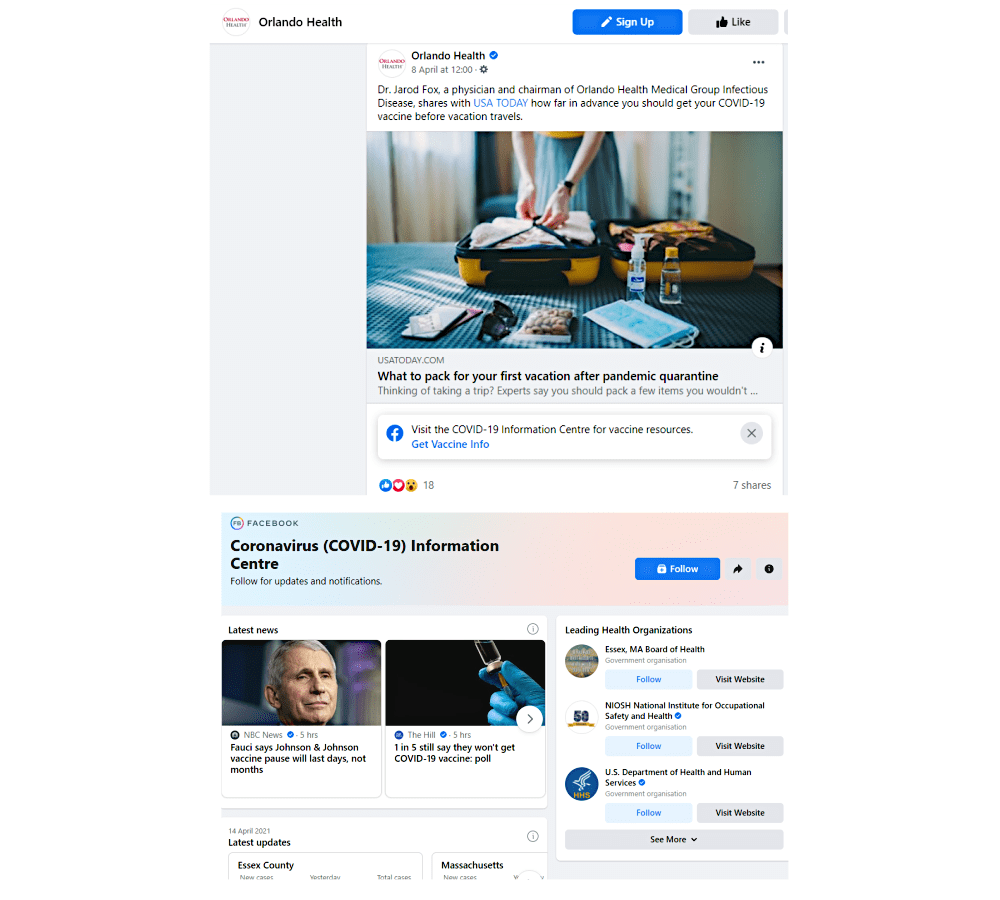

In March 2021, Facebook shared new platform strategies to encourage users to get the COVID-19 vaccine by labeling posts that reference vaccination. Content that mentions COVID-19 vaccination procedure, vaccination site information, or even users celebrating their vaccination, will appear with a banner label below the content, which provides click-through access to authoritative information on vaccines from the World Health Organization. Any harmful vaccination content remains subject to third party fact-check and removal guidelines for COVID-19 misinformation.

For content that mentions the COVID-19 vaccine, a banner appears under the content in plain text, with a click-through “Learn More” option highlighted in blue which directs users to World Health Organization page. Link: https://www.facebook.com/orlandohealth/posts/10158411842304737

Other Expanding Labels on the Facebook Platform

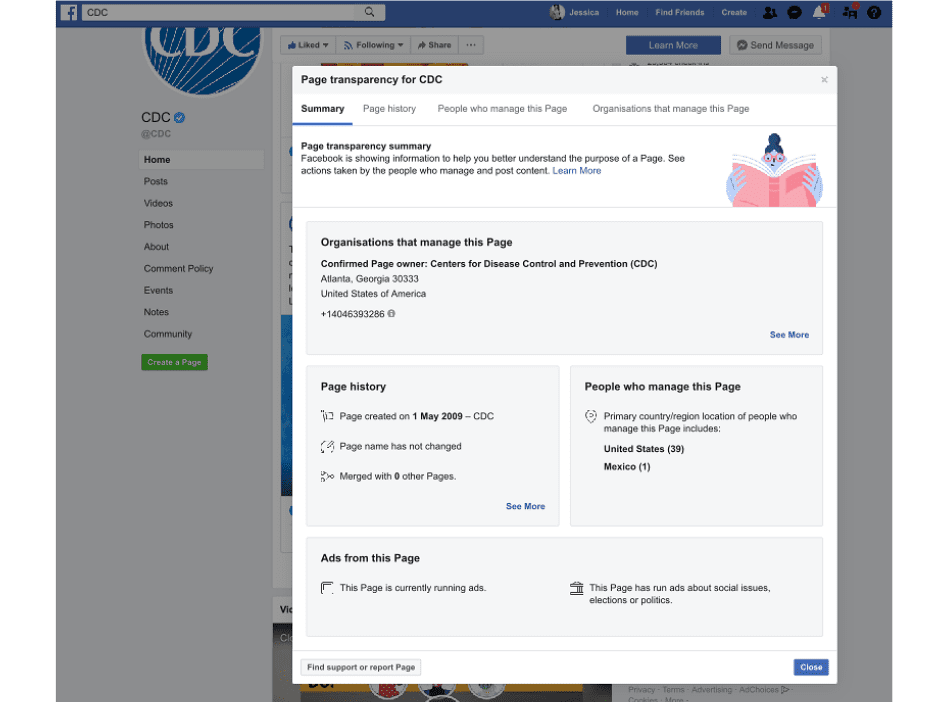

While new labels are added, and some functions are retained, Facebook frequently updates its user interface, changing the visual impact of labels and authoritative information. For instance, in the 2020 interface update – known as FB5, or the “New Facebook” – the “Page Transparency” page became more compact and brighter, with bolder text. There are also more statistics and information readily available in a page’s “About” sidebar section, such as membership and related links.

“Page Owner” transparency updates include a broader overview of page statistics, as well as more interactive links for information gathering.

Link: https://www.facebook.com/CDC/

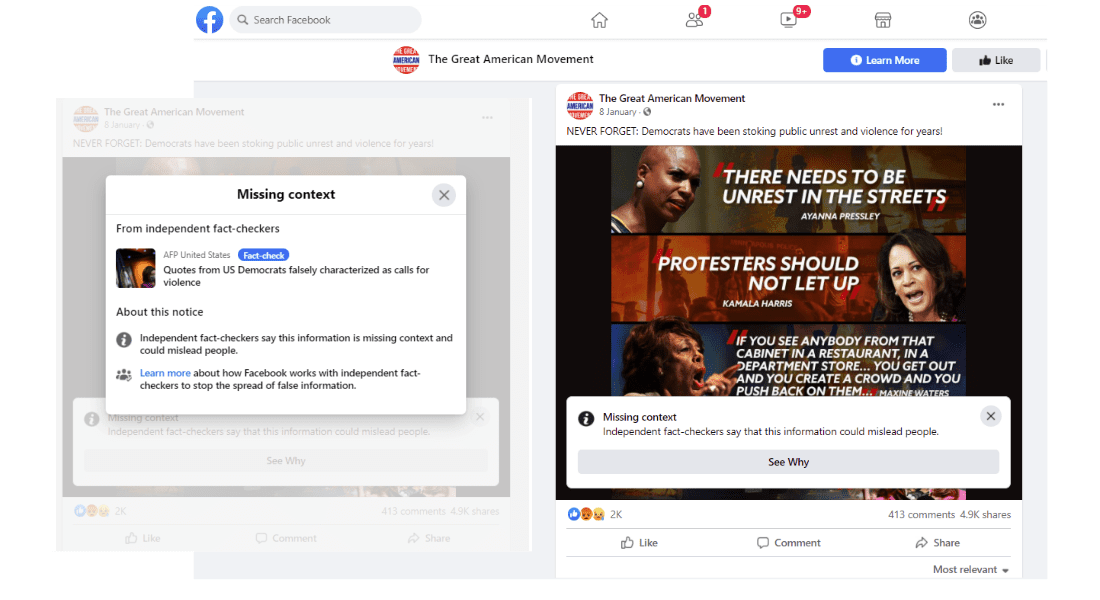

While manipulated media was previously removed from the platform all together, in August of 2020 Facebook announced new fact-check ratings for altered media and content that is missing context. “Altered” photos and videos are displayed behind an interstitial, with the rating placed on top. However, “Missing context” labels are displayed as a banner below the post, providing potentially less friction.

Content rated with a banner for “Missing Context” can potentially misinform users without additional context. Users can click on “See Why” for verified fact-check information and a link to content policies on Facebook. Link: https://www.facebook.com/GreatAmericanMovement/posts/2759538157639798

For content that has been altered in a way that can “mislead people,” an interstitial appears over content that is an “Altered Photo” or “Altered Video.” Users can click through to view the content, or “See Why” for verified fact-check information and a link to content policies on Facebook. Link: https://www.facebook.com/ezenwegbu.desmondo/posts/2803611089859451

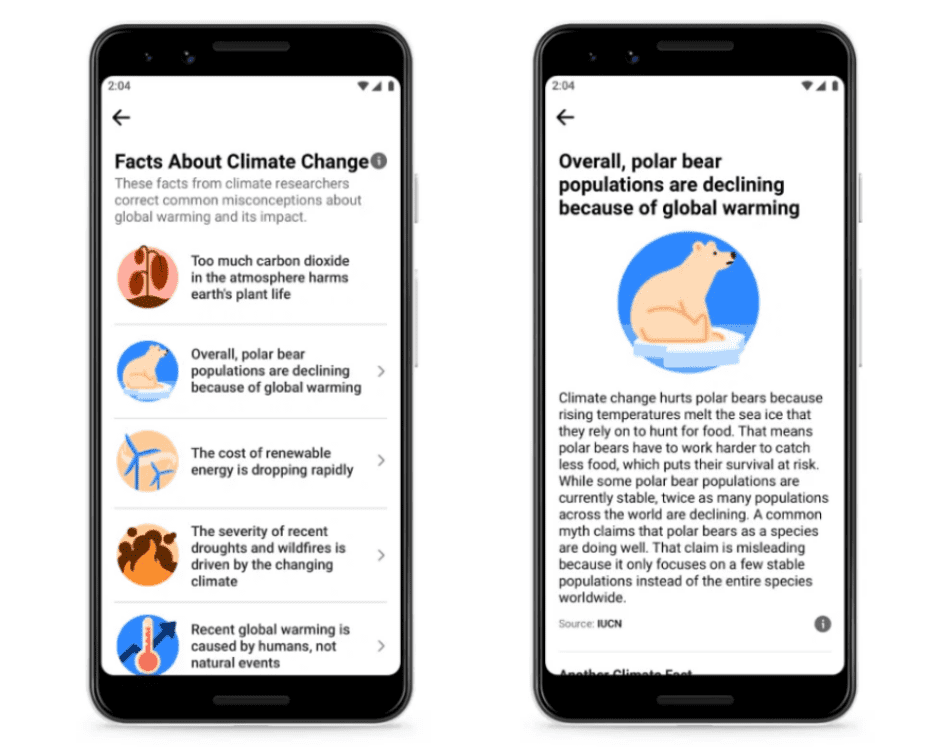

At the start of 2021, new labels on climate change-related information began to be applied, which direct users to Facebook’s Climate Change Information Center. Facebook’s goal is not to only moderate misinformation, but to connect users with credible climate change information, as stated in the Newsroom announcement.

A context banner will appear below posts that discuss climate change and environmental topics, with a plain text warning and a click-through “Explore” option in blue text to redirect users to Facebook Climate Science Information Center. Captured from Facebook Newsroom. Links: https://about.fb.com/news/2021/02/connecting-people-with-credible-climate-change-information/

Groups and Pages on the Facebook Platform

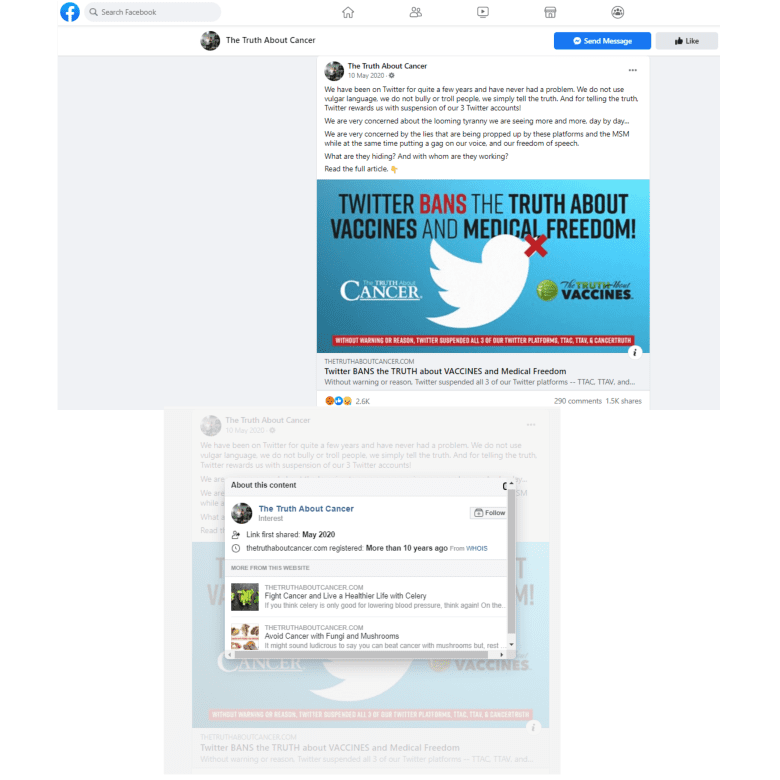

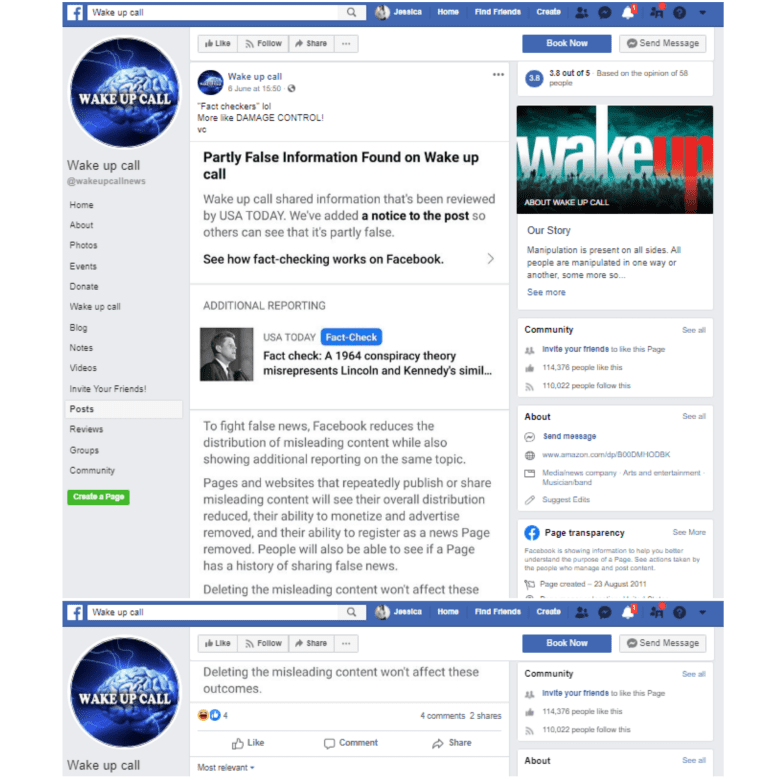

With the increased volume of misinformation and hoaxes amid the presidential elections and global pandemic, content moderation policies in Facebook Groups evolved. Traditionally, Facebook regarded Groups as “private” spaces, with lessened content review. But in September 2020, Facebook announced new group moderation strategies, in “Our Latest Steps to Keep Facebook Groups Safe.” Page and group content would now be subject to fact check content warning labels, as well as increasing transparency with “publisher context” on Groups that provided information such as date of formation and number of members.

“Publisher Context” within Groups appears just as it does for content on the main feed, when a user shares a third-party link.

Link: https://www.facebook.com/thetruthaboutcancer/posts/3002554243171198

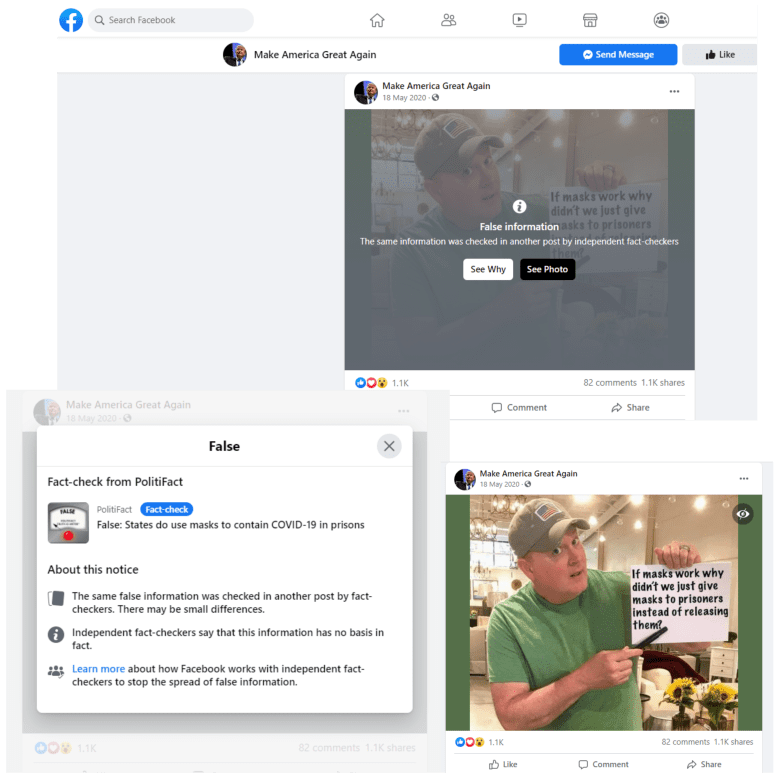

The increased Group moderation was in response to the growing political tensions with the context of the 2020 U.S. Election and related social issues. The Community Standards Enforcement Report that Facebook published in August 2020 included an abundance of policy changes regarding hate speech, and moderation against hate and terrorist groups. NewsGuard, a journalistic fact-checking organization, had observed a number of particularly dangerous groups on Facebook spreading COVID-19 misinformation. Content labels in groups appear in the same format as content in an open feed, although the implementation appears to be less consistent.

Facebook implements fact-checking content more regularly on the regular feed, than those that appear in Groups or private Pages. As with all content warnings, fact-checked content will appear behind an interstitial with an evaluation.

Link: https://www.facebook.com/DJTMAGA/posts/2930576500324941 …

…Users may choose to click on either see the content beyond the interstitial, or “See Why” for verified information on the fact-check and “About this notice” for internal links. Link: https://www.facebook.com/vaccinetruth/posts/10157486219567989

Groups on Facebook are regarded as viral hubs of misinformation, in addition to hate speech, harassment, and organizing in harmful ways. While some content is moderated, there is also still an abundance of images and links with harmful misinformation that get viewed by thousands of Group members.

Misinformation policy enforcement in groups and private Pages are not equitable or consistent. What may appear behind a content warning in one Group may be re-posted elsewhere without a warning. Link: https://www.facebook.com/wakeupcallnews/posts/3869092963106392

Instagram Overview

Developers Kevin Systrom and Mike Krieger created Instagram in 2010 as a photo and video sharing platform, but it was soon acquired by Facebook Inc. in 2012. Users can interact with posts on the feed by liking them, commenting on them, or bookmarking them to an archive, as well as exploring the featured “hashtag” system. Instagram also became one of the first platforms to heavily feature ephemeral 24-hour stories on user accounts. Facebook Inc. has considered Instagram as a compatible social media platform with Facebook, and the two platforms share many policies and moderation implementations. Users can link and share content between these two platforms relatively easily, with settings to link their accounts.

Instagram Content Labeling

Instagram examples are captured with mobile screenshots, since most of the warnings and click-through interactivity for content labeling is only available on the mobile application.

Since Instagram merged with Facebook, many of their content strategies and guidelines are shared, including Instagram’s policies on misinformation and third-party fact checkers. The Instagram Community Guidelines are presented more broadly than Facebook.

- Removal is applied to content for violations such as (a) “nudity,” (b) “self-harm,” (c) “support or praise terrorism, organized crime, or hate groups,” (d) offering “sexual services,” “firearms”, or otherwise exchanging illicit or controlled material illegally, and (e) “content that contains credible threats or hate speech.”

- Reducing content entails “filtering it from Explore and Hashtags, and reducing its visibility in Feed and Stories.”

- Informing users is reinforced on Instagram, as with Facebook, using the content warnings.

Instagram’s initial outlook on content moderation focused on enabling transparency for user profiles. This focus is outlined in the company update from August 2018, which provides users with tools to authenticate Instagram pages. “About This Account,” which can be accessed by clicking the midline ellipsis when viewing a profile page, informs users regarding when a page was created, advertising that has been published through the page, and other information on the page’s public online details.

Instagram and the U.S. 2020 Elections

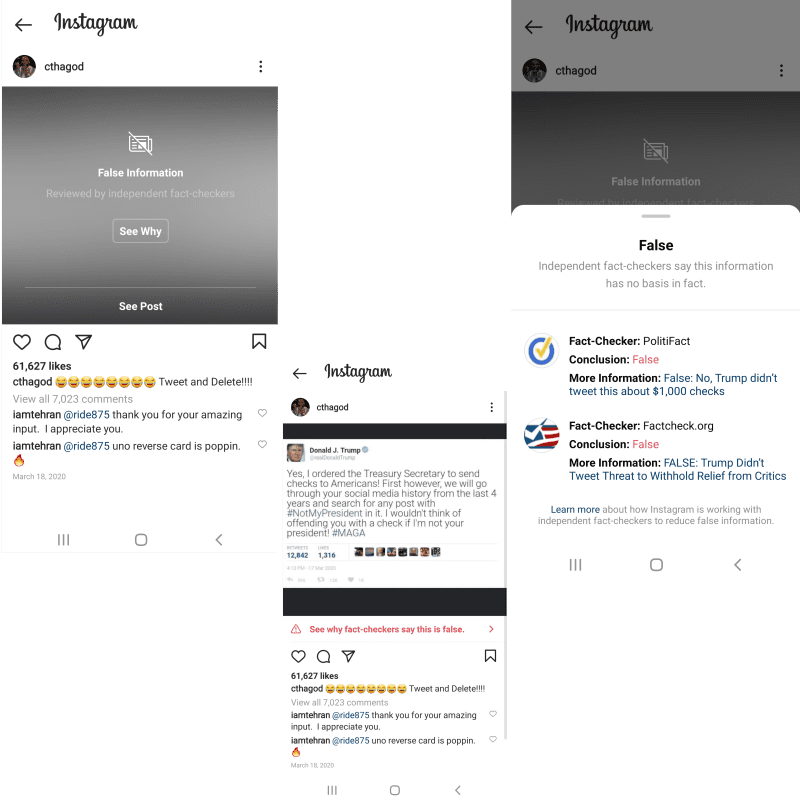

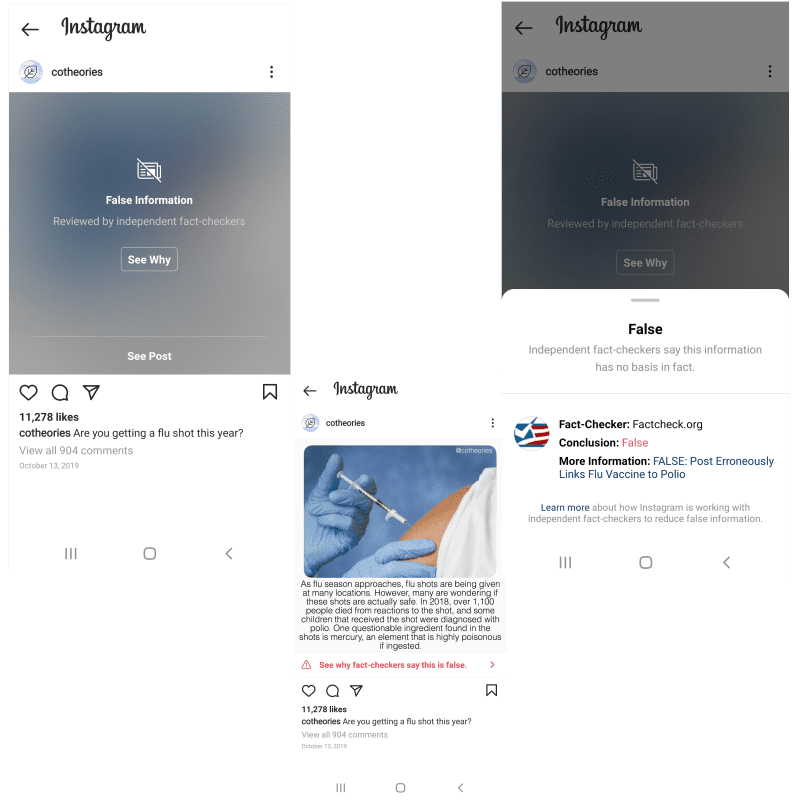

Instagram began implementing content warning labels and fact check ratings in October 2019. These updates were a direct reaction to upholding content integrity for the 2020 U.S. Election on both Facebook and Instagram. Until this point, Facebook Inc., which began content labeling and fact check review three years prior on the Facebook platform, had not implemented their policies or procedures in content moderation to the Instagram platform.

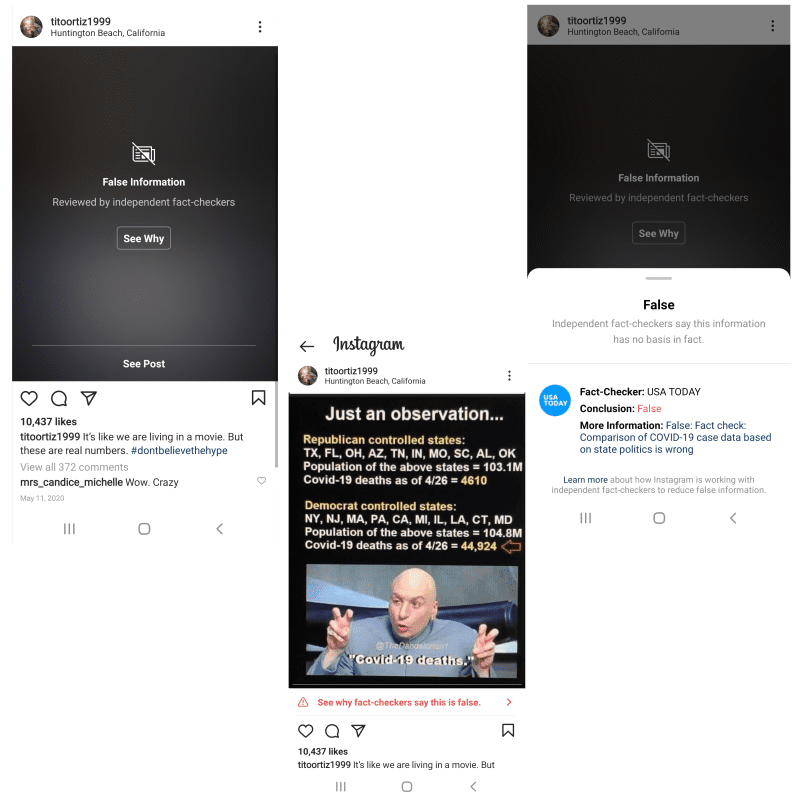

A blurred interstitial appears over content that has been “Checked by independent fact-checkers,” and will also indicate that this content contains “False information.” Users may choose to click on either see the content beyond the interstitial, or “See Why” for information on the fact-check. The “See why” button will provide users with the fact-check articles, as well as the information on why the content is rated as “False.” These evaluations are the same ones implemented on the Facebook platform. Link: https://www.instagram.com/p/B94WxG3lkoA/

Fact check ratings on Instagram follow the same evaluation criteria on Facebook, and any Instagram posts with a content warning label have the rating in front of a blurred grey interstitial over the post. Users can click through to “See Why” for rating information and verified articles. When the user opts to view the content, the content warning remains in view as a banner below the content in bright red text. This is different than viewing labeled content on Facebook platform, which does not retain a warning when users opt-in to view content. The additional warning in this step provides more friction for Instagram content.

For misinformation content on Instagram, a blurred interstitial appears over content that has been “Checked by independent fact-checkers,” and will also indicate an evaluation such as “False information” or “Partly False information.” Users may choose to click on either see the content beyond the interstitial, or “See Why” for information on the fact-check. The “See why” button will provide users with the fact-check articles, as well as the information on the given evaluation, such as “False Information” or “Partly False Information.” These evaluations are the same ones implemented on the Facebook platform. Link: https://www.instagram.com/p/B3kcFNyAyHb/?fbclid=IwAR1YQoavaVu

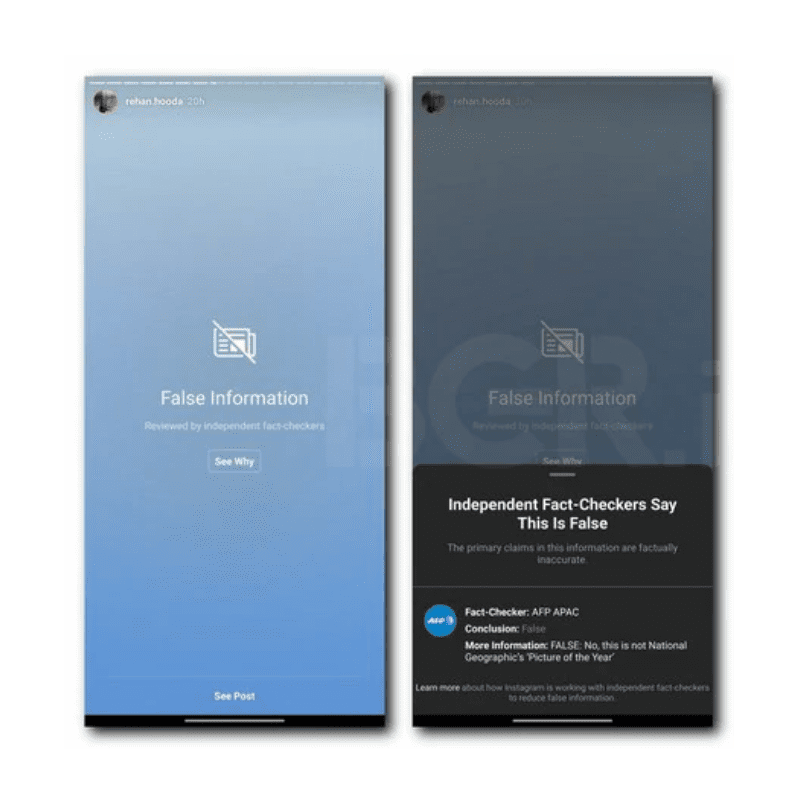

In addition to content posted on profiles and onto the feed, Instagram also reviews content on users’ ephemeral stories. As with feed content, the platform can implement content warning labels on stories, where the full frame of the story is hidden behind a blurred interstitial, and the rating is placed in front with “See Why” and “See Post” click-through options.

Just as with feed content, ephemeral stories will be labeled with an interstitial displaying an evaluation that has been “Reviewed by Independent fact-checkers.” The “See why” button will provide users with the fact-check articles and a brief evaluation summary. Captured from Facebook Newsroom. Link: https://about.fb.com/news/2019/10/update-on-election-integrity-efforts/

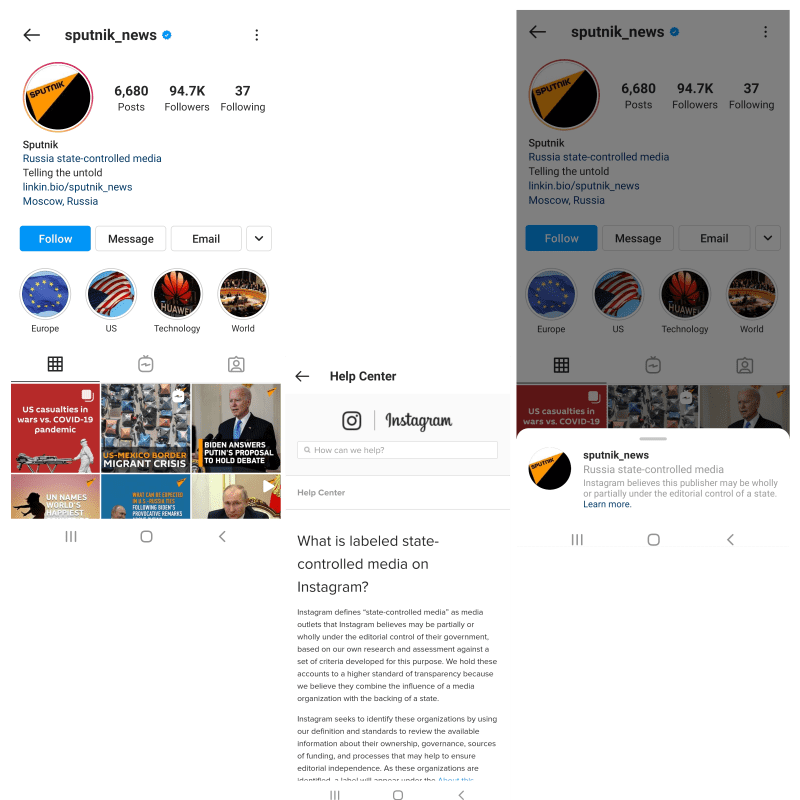

Along with these updates in response election misinformation, Instagram expands transparency alongside Facebook by adding a “state controlled media” label to relevant media profile pages, presented as blue click-through text underneath the profile page name.

Instagram labels “media outlets that are wholly or partially under the editorial control of their government as state-controlled media.” This label appears beneath the profile name, as an active link. This link will prompt a pop-up brief labeling information, with another link to Instagram policies regarding state-controlled media. Link: https://instagram.com/sputnik_news?igshid=16b4e7b8wmtke

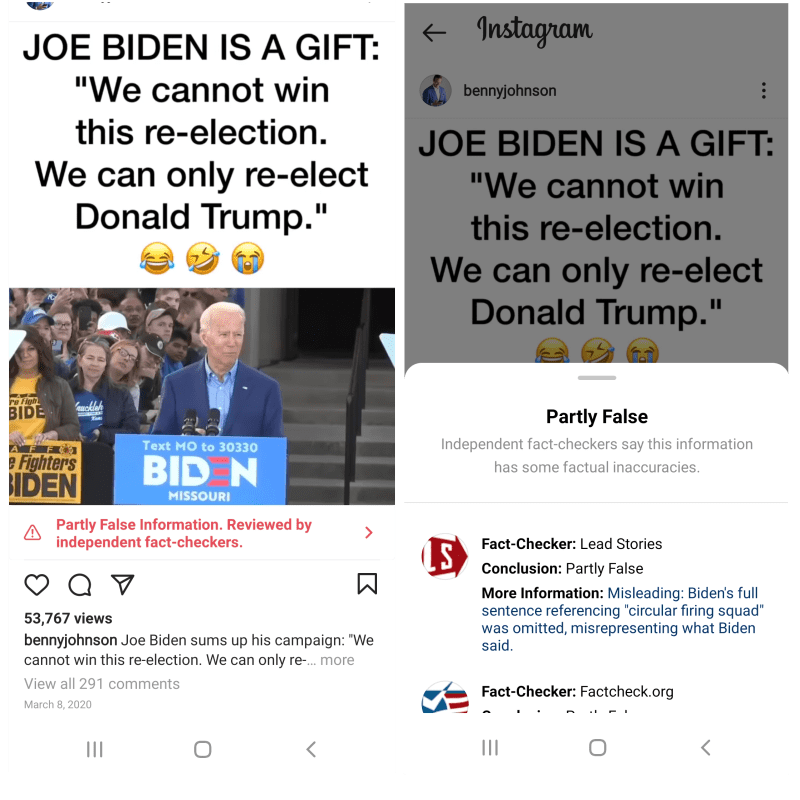

In December of 2019, Instagram updated misinformation moderation with fact checking and labeling content, and clarified policies on content beyond the elections and political topics. Given that Instagram is functionally a subsidiary, all facets of Instagram fact-checking refer to Facebook Inc., such as the ratings for “False information” and “Partly False information.”

A banner appears under the post, to indicate that this content contains “Partly False information.” Users may click on the banner to “See Why” for information on the fact-check. This will provide users with the verified articles from fact-checkers, as well as the information on why the content is rated as “Partly False.” These evaluations are the same ones implemented on the Facebook platform.

Link: https://www.instagram.com/p/B9ev9CHlaM_/?utm_source=ig_embed

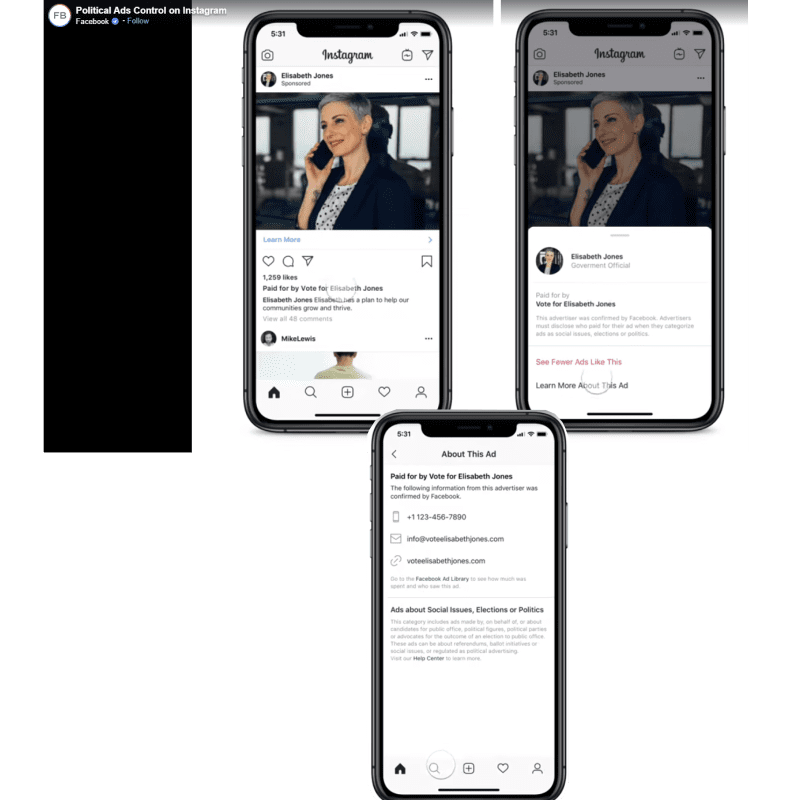

Drawing closer to the U.S. presidential elections, in June 2020 Instagram updated its advertising polities, in a Facebook Inc. Newsroom announcement, “Launching The Largest Voting Information Effort in US History.” This includes a “Paid for By” disclaimer for sponsored content, and improving interface for data comparison in the Facebook Inc. Ad Library.

Advertisements are labeled as “sponsored” under the publisher’s name. Below the Like-count for the post is a “Paid for By” notice, which then displays a pop-up with the verified sponsor and a link to the sponsor’s page information. Also displayed with sponsor information is Instagram’s policies for “Ads about Social Issues, Elections, or Politics” and a link to the platform’s Help Center. Captured by Facebook Newsroom. Link: https://about.fb.com/news/2020/06/voting-information-center/

Instagram and COVID-19

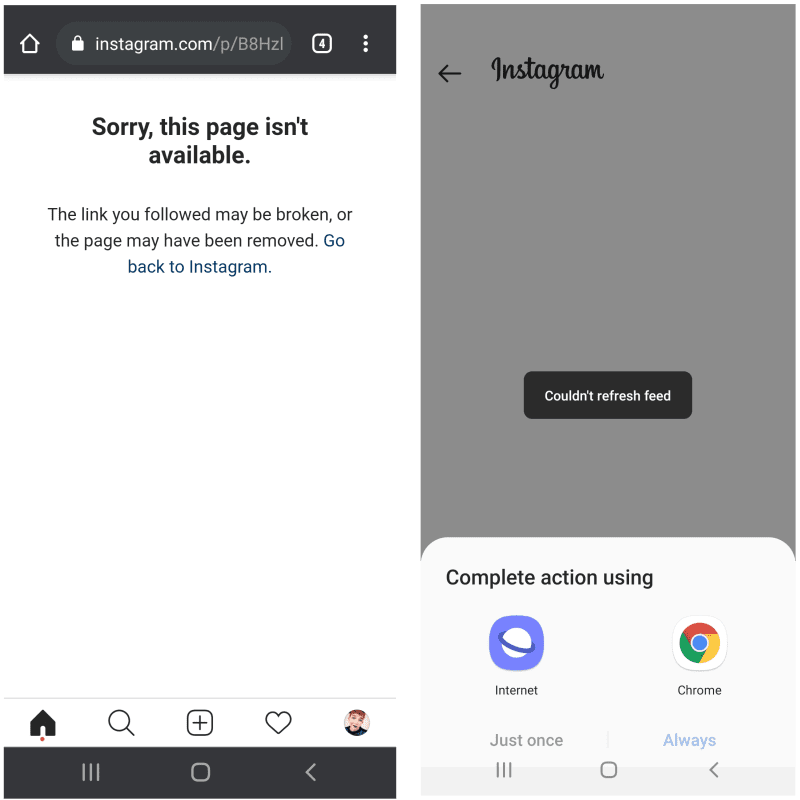

On the 24th of March, 2020, Instagram released its announcement regarding COVID-19 content and safety. Under the metric of harm, content is removed with a full-page message displaying, “Sorry, this page isn’t available,” and a link to return to the Instagram home page.

When a piece of content is removed from Instagram, the page link appears with a notice that “Sorry, this page isn’t available.” In smaller text informs users that “The link you followed may be broken, or the page may have been removed,” with a link to return to their main feed. There is no link to Instagram’s removal policies. Link: https://www.instagram.com/p/B8HzlkfhyiO/?utm_source=ig_embed

Unlike Facebook removal pages, there is no direct link provided for further information on platform policies. COVID-19 content is subject to the same implementation as other misinformation and fact check review, with rated content hidden behind a blurred interstitial. All COVID-19 content policy on Instagram refers to Facebook. However, Instagram is more intent on downranking and removing content from Explore and hashtag networks, as compared to Facebook content reduction policies. Their responsibility for guidelines and fact-checking on conspiracies and misinformation also lies primarily with the World Health Organization.

A blurred interstitial appears over COVID-19 content that has been “Checked by independent fact-checkers,” and will also indicate that this content contains “False information.” Link: https://www.instagram.com/p/CACRfgonnen/?utm_source=ig_embed

Other Expanding Labels on Instagram

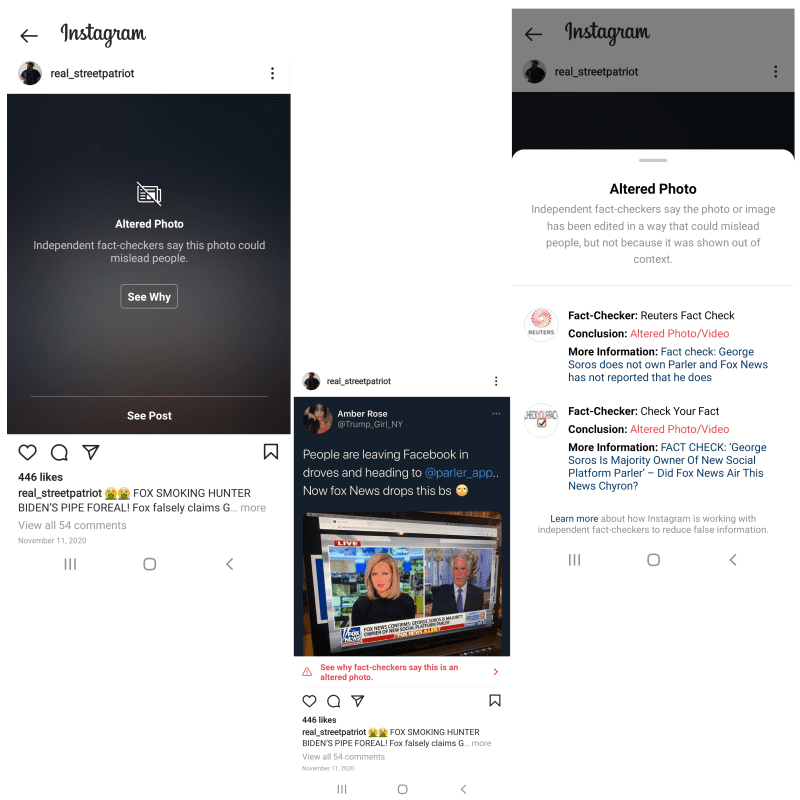

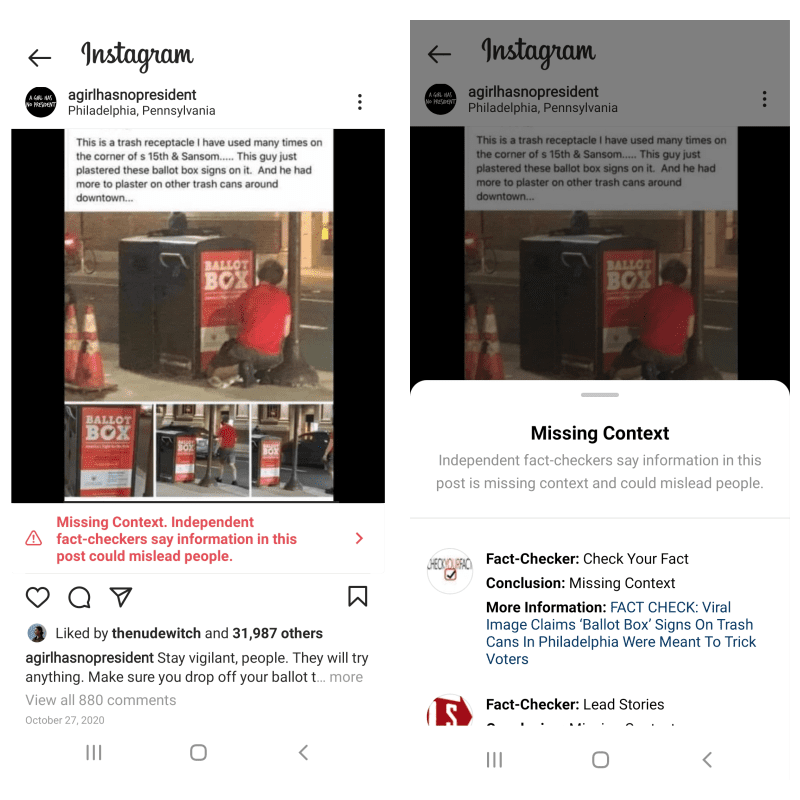

Instagram also adopts the new “Altered” and “Missing Context” labels in August of 2020. “Altered” content is hidden behind a blurred interstitial with a rating on top, and click through options to “See Why” and “See Post.” On Instagram, the “Missing Context” label appears as a banner below the content, with bright red text and click-through options for verified contextual information.

For the “Altered” label, an interactive click-through content is only available to users on the Instagram mobile app. Content that has been altered in a way that can “mislead people” will have a blurred interstitial with the warning that this is an “Altered Photo” or “Altered Video.” Users can click through to view the content, or “See Why” for information on the verified fact-check information and a link to Facebook Inc. policies with Instagram. Link: https://www.instagram.com/p/CHds18PAkuv/?utm_source=ig_embed

For the “Missing Context” label, interactive click-through content is only available to users on the Instagram mobile app. Content rated with a banner for “Missing Context” can potentially misinform users without additional context. Users can click on “See Why” for verified fact-check information and a link to Facebook Inc. policies with Instagram. Link: https://www.instagram.com/p/CG2aethncKr/

Facebook and Instagram Research Observations

Visual design for Facebook and Instagram have an internal consistency that is clear and recognizable by users across platforms, which is especially important as the two continue to merge together, to some degree, with regard user interface and functionality. This includes the font type and blurred interstitials when it comes to warning labels, as well as keeping the label taxonomy analogous, such as “altered” content, and the “false” and “partly false” fact-check ratings.

These platforms approach moderation, based on the metric of “harm” to its users, by increasing friction, embedded into the content itself. Whether an interstitial evaluation or an arrow icon, Facebook Inc. implements visual indicators for misinformation and harmful content to its users. The requirement of click-through actions on Facebook and Instagram especially — while it may deter some users from seeking out the authoritative information — is at least one more action to prevent users from interacting with harmful content. That, at least, appears to be the theory.

It is especially important to note the real-world events that prompted change in policy and application of content labels across Facebook Inc. platforms. Instagram picked up more moderation policies in the 2020 timeline, in synchronization with metrics of harm and viral misinformation on the Facebook platform. Overall, Facebook and Instagram both have similar functional features as social media platforms — stories, content feed, profiles, live casting — but there are key differences in utility and information sharing that should call for more defined areas of content review and evaluation.

A few examples of differences would be that Facebook can have content shared within private Groups; that Instagram is more heavily reliant on hashtag use; and, while both platforms can post video and image content, Facebook also has text-only content to review. The most apparent labeling design choice on Instagram is that, even when a user clicks-through to view fact check rated content, the content warning label remains visible in the red text banner below the post. This reinforces the validity of the rating, and increases friction against a user sharing the content.

What is lacking in Facebook Inc. policy considerations is the distinction between Instagram and Facebook platforms regarding content type and user behavior. As Facebook Inc. applies the same content labeling methods to both platforms, the appearance of their content labels also indicates a lack of transparency in how Facebook Inc. conducts evaluations and fact-checking content with appropriate contextual considerations. By using the same visual designs, users are not provided with enough information to understand how the spread of misinformation and interaction with harmful content may be different on each platform.

The visual designs are a clear and concise implementation of labeling, fact-checking, and content moderation across Facebook Inc., that incorporates multiple actions within content before users interact with the content, making it more likely that they view corrected information. However, Facebook Inc. needs to present more transparency on how it approaches the contextual implications of the platforms themselves, beyond the isolated piece of content being moderated.