Twitter Inc.

The Evolution of Social Media Content Labeling: An Online Archive

As part of the Ethics of Content Labeling Project, we present a comprehensive overview of social media platform labeling strategies for moderating user-generated content. We examine how visual, textual, and user-interface elements have evolved as technology companies have attempted to inform users on misinformation, harmful content, and more.

Authors: Jessica Montgomery Polny, Graduate Researcher; Prof. John P. Wihbey, Ethics Institute/College of Arts, Media and Design

Last Updated: June 3, 2021

Twitter Inc. Overview

The microblogging service Twitter was founded in 2006, as a platform for people to share shorthand text posts on a public forum. Co-founder and CEO Jack Dorsey published his first Tweet on March 21, 2006: “just setting up my twttr.” In addition to 280 characters of text (formerly 140), Tweet content includes photos, videos, GIFs, links, trending hashtags and account handle tagging. Users can reply with a comment, or “retweet,” to add to the content thread. Many high-profile accounts exist on Twitter for political officials, celebrities, and journalists who partake in the practically instantaneous information, social, and news network.

Regarding the evaluation of content on Twitter, the platform has provided information on content labeling and removal through their page “Twitter Rules and Policies.” Whereas other platforms have provided links to fact-check websites and external information sources, Twitter curated in-platform information pages when it chooses to moderate misinformation. An evolving variety of notices and labels curated by the platform moderators attempt to implement friction between users and perceived harmful misinformation.

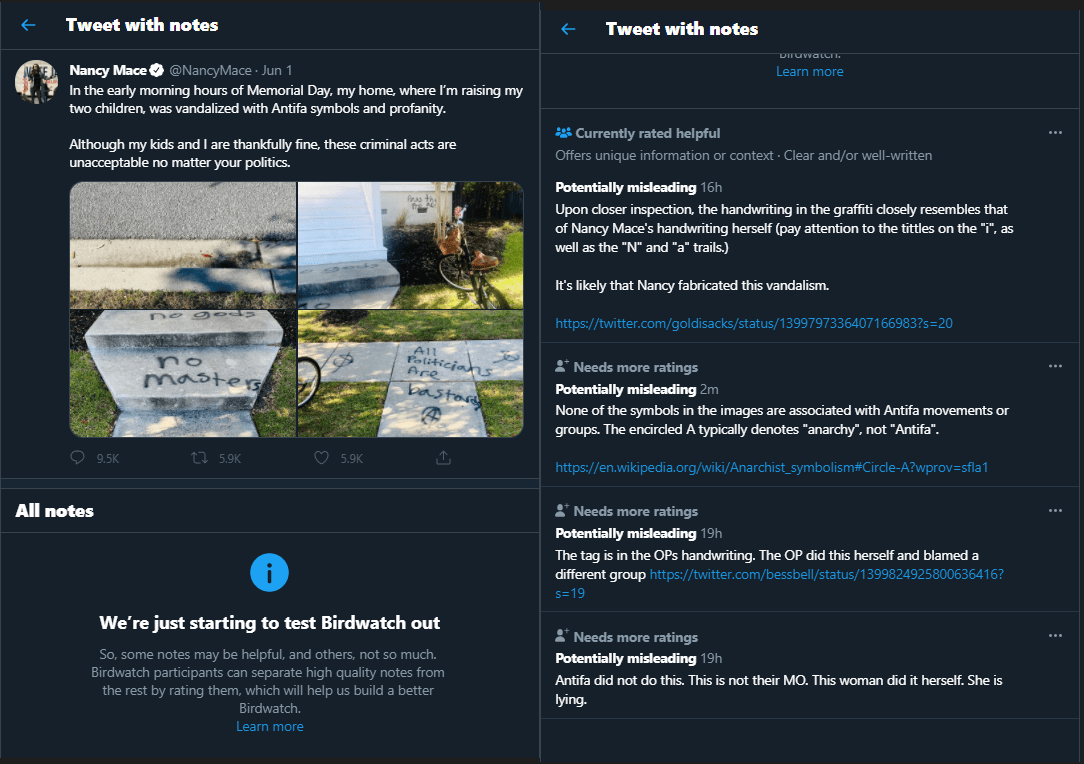

In January of 2021, Twitter also began experimenting with a citizen-led fact-check moderation initiative, known on the platform as Birdwatch. Volunteer moderators on Twitter attempt to provide informational context to misleading content, and aim to respond faster to content that is flagged within their own communities.

The following analysis is based on open source information about the platform. The analysis only relates to the nature and form of the moderation, and we do not here render judgments on several key issues, such as: speed of labeling response (i.e., how many people see the content before it is labeled); the relative success or failure of automated detection and application (false negatives and false positives by learning algorithms); and actual efficacy with regard to users (change of user behavior and/or belief.)

Twitter Content Labeling

As early as 2015, Twitter’s curation style guide provided insight on how the company approaches content that contains hate speech, graphic content, and different identities and vulnerable social groups. Twitter also provided resources on how the platform will moderate synthetic and manipulated media in “General Policies and Guidelines,” which refers to media designed to spread misinformation and false news. Entirely synthetic media has been more often subject to removal, whereas Twitter stated in its guidelines that “subtler forms of manipulated media, such as isolative editing, omission of context, or presentation with false context, may be labeled or removed on a case-by-case basis.”

The general platform guidelines also have provided an outline of notices on Twitter, a comprehensive glossary for which labels can provide contextual information, content warning, or transparency. Rather than providing links to outside sources or identifying fact-checkers in context and warning labels, most of the time contextual information has been provided with an internal page curated by Twitter that highlights other external sources. The beginning of more label-centric content moderation started in 2017, when Twitter updated its policies due to the growing concern of “fake news” and misinformation. The Twitter Rules address content that is synthetic, manipulated, sensitive, or spam; action taken against such content is most dependent on whether the content is likely to cause “harm.”

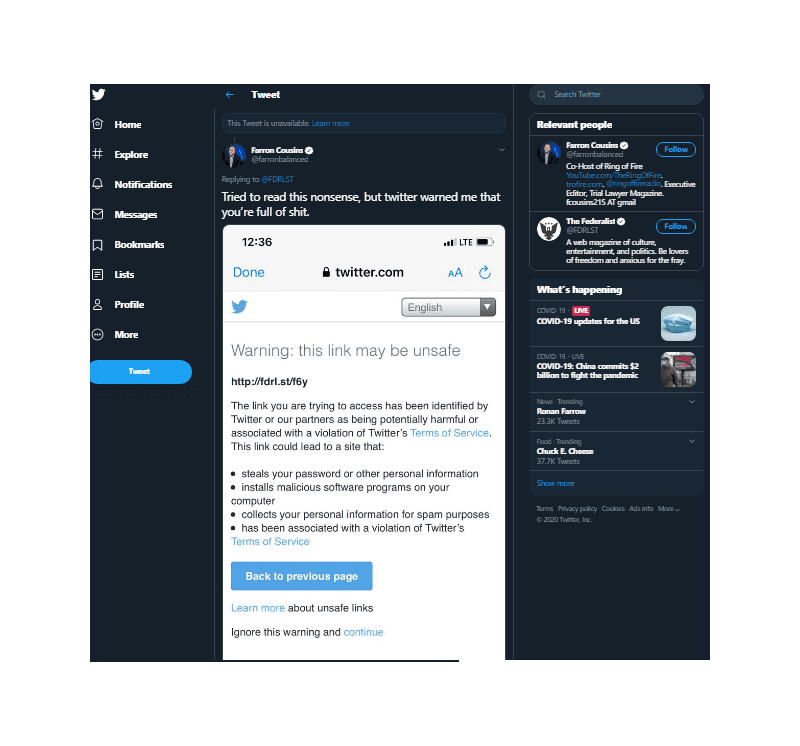

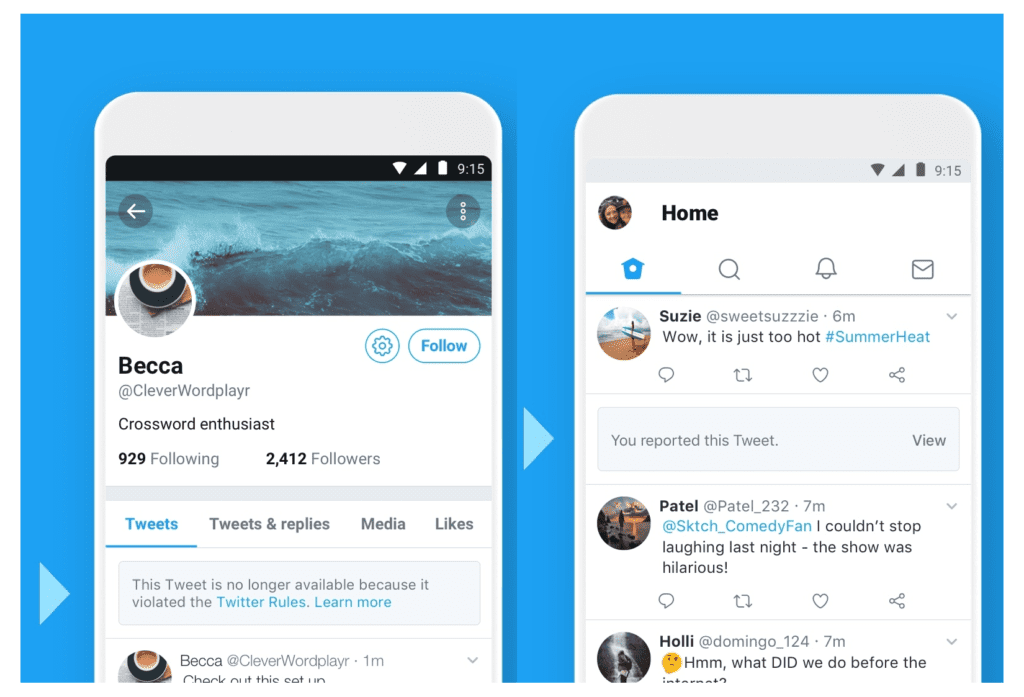

More specifically, in October of 2018 Twitter introduced its users to the particular labels applied to content that violates the Twitter Rules. When a piece of content was removed for this reason, a label replaced the Tweet with a notification that “it violated the Twitter Rules” and provided a link to these platform policies.

To uphold Twitter Inc.’s principle of integrity and protection of public conversation, the platform introduced a new notice in the summer of 2019 that indicated content of public interest. This label appeared as a warning to Twitter Rules violators, who have held an important public position such as a government official or political leader. The aim was to inform users on misinformation and hold public speakers accountable, while allowing discourse around these prominent public figures.

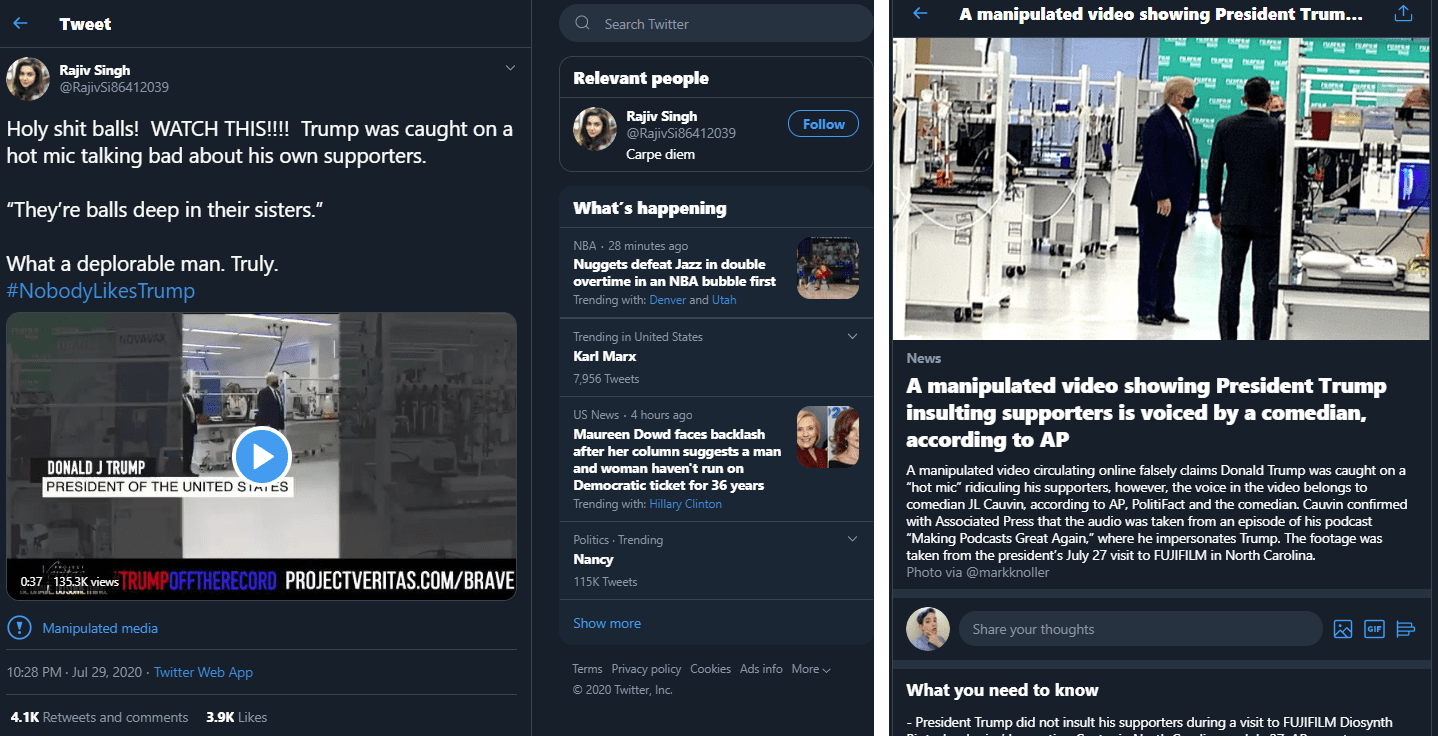

Misinformation and disinformation had also been present on social media platforms as synthetic and manipulated media, re-defined by Twitter in November 2019 for the platform as “any photo, audio, or video that has been significantly altered or fabricated in a way that intends to mislead people or changes its original meaning.” Twitter began to apply labels to “Manipulated media” content, which also included a link to news sources and internal Tweets that provide information on how or why the content was manipulated.

Twitter curated the criteria to identify synthetic and manipulated media, and implemented actions to label or remove content. In addressing synthetic and manipulated media, Twitter updated its content labeling approach in early 2020. The platform evaluated synthetic and manipulated media via the metric of harmfulness, and it took action as stated in its synthetic and manipulated media policy:

- Applying a label to the Tweet

- Showing a warning to users before they Retweet or like the Tweet

- Reduce the visibility and decrease the recommendation of the Tweet

- Provide additional explanations or clarifications for more context

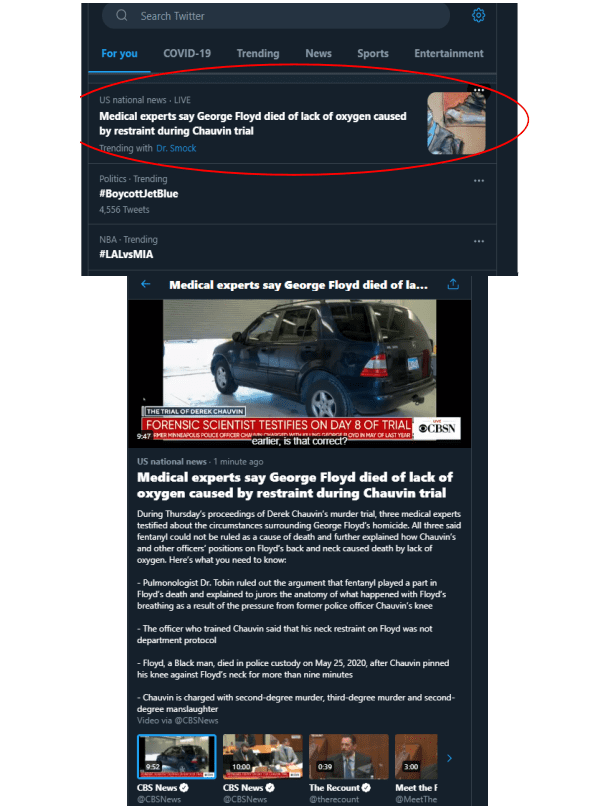

Not only did Twitter apply labels to Tweets and user content, but also to platform curated content for contextual information. “Trends” are content that is most popular on the platform, calculated by interactions on relevant Tweets and how often users will create content on the topic, as indicated by keywords and hashtags. In September 2020, trending topics began to be labeled with additional context. Twitter pinned a “representative Tweet,” often the source of the trend or the person of interest in the trending topic, to trending items so that users would have more context on why a certain topics and content were trending.

As content labeling policies evolved on Twitter, so did strategies for moderation capacity. In January of 2021, Twitter announced a pilot moderation approach to include community members to correct misleading information, as part of the Birdwatch initiative. The Birdwatch community of volunteer citizen moderators operated on a separate website, a live archive of threads from Birdwatch members that comment and retweet misinformation corrections. The idea was that these volunteers were watching the trends and communities more closely on Twitter, and so their response to misinformation and harmful content would be faster than the platform moderators that oversee Twitter in its entirety.

Twitter and the 2020 U.S. Elections

Twitter made the decision to prohibit sponsored political content on the platform. The rules and policies outlined differences between politically sponsored content, which was prohibited, and political content discussed by sponsored news organizations, which was allowed. As opposed to in-platform labeling, email notifications regarding disinformation were the initial method of moderation. To address disinformation on the U.S. 2020 Elections, Twitter began in 2016 with email notifications to users who had interacted with propaganda content curated by Russian government-affiliated profiles. Then in 2018, Twitter reinstated another investigation, which was again acted upon with email notification to users.

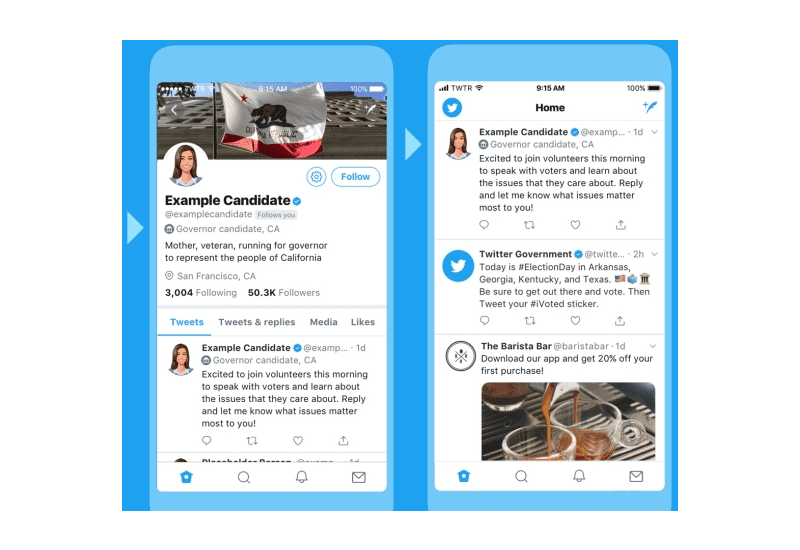

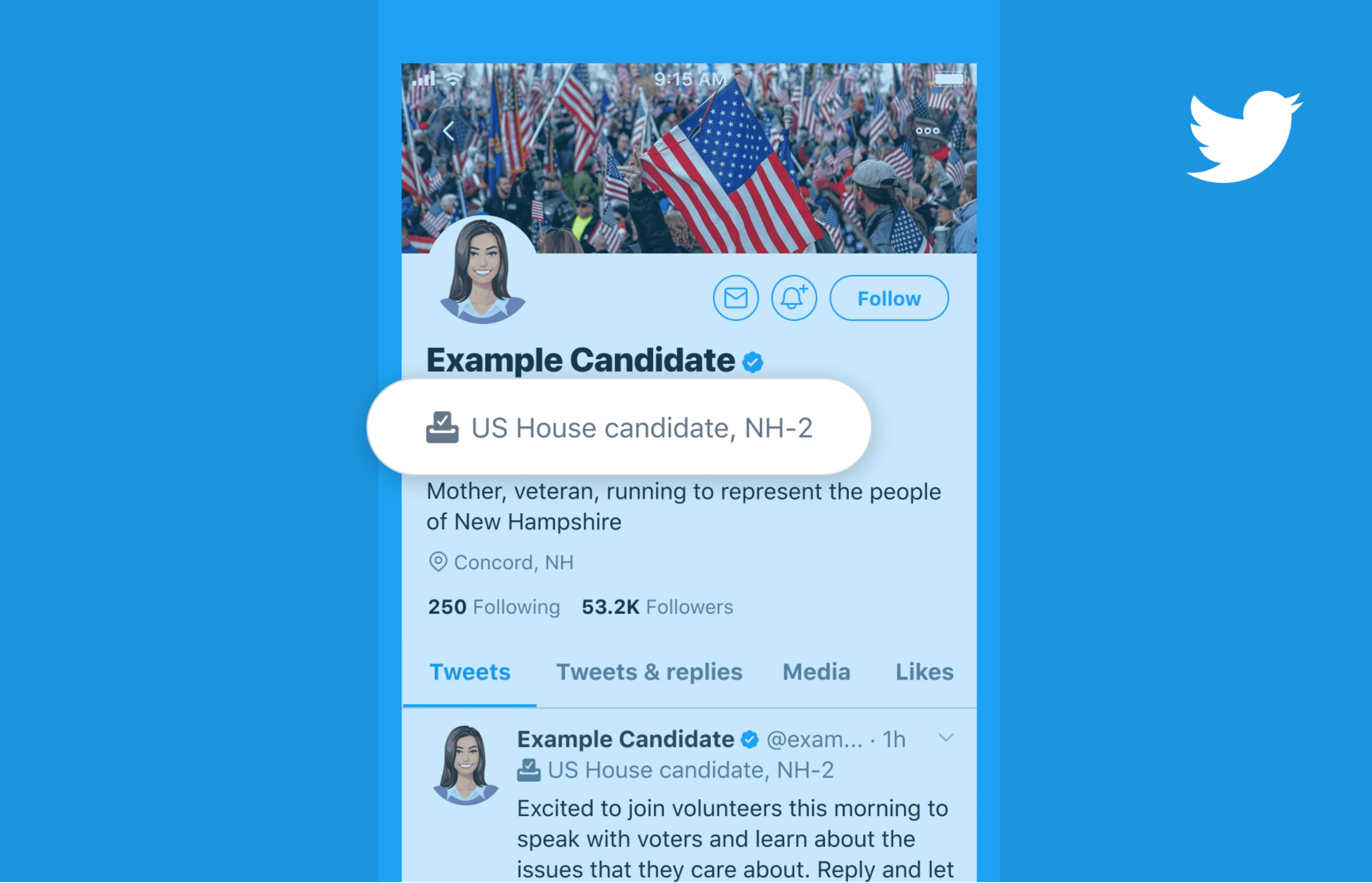

The implementation of election-specific labels began with candidate labels, introduced in May of 2018. The labels applied to “candidates running for state Governor, or for the US Senate or US House of Representatives, during the 2018 US midterm general election.”

These election candidate labels continued in 2019 to serve the 2020 Presidential election. With increases in media coverage and virality for presidential candidate topics, Twitter implemented a third-party partner, Ballotpedia, to evaluate and verify candidate eligibility and office information.

Twitter defined state-affiliated media as “outlets where the state exercises control over editorial content through financial resources, direct or indirect political pressures, and/or control over production and distribution.” Twitter had discontinued platform recommendations for such accounts, and applied notices to Tweets and profiles indicating state-affiliated media starting in August of 2020. The hope was that users would be able to discern when a piece of content is published or curated by a state entity, and Twitter was implementing this transparency to provide voters and civic actors with more contextual information.

As the election drew nearer, Twitter released more content labeling updates in September 2020. At the time, Twitter was already removing content that fell under one of the following categories:

- Information or false claims on how to participate in civic processes

- Content that could intimidate or suppress participation

- False affiliation

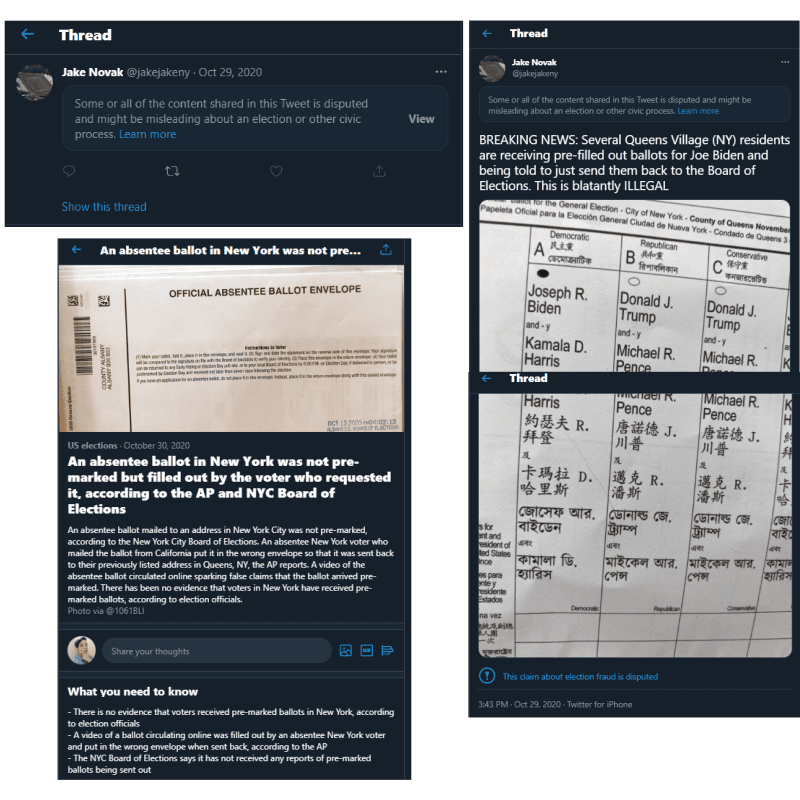

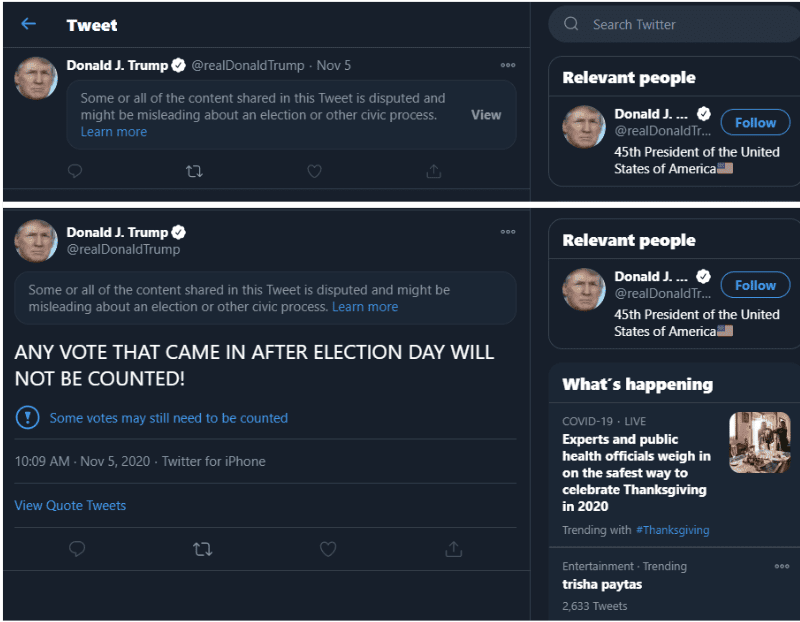

In the update, Twitter would begin to implement labels for misinformation on civic engagement and the election, for content that was considered less harmful but are still “non-specific, disputed information that could cause confusion.”

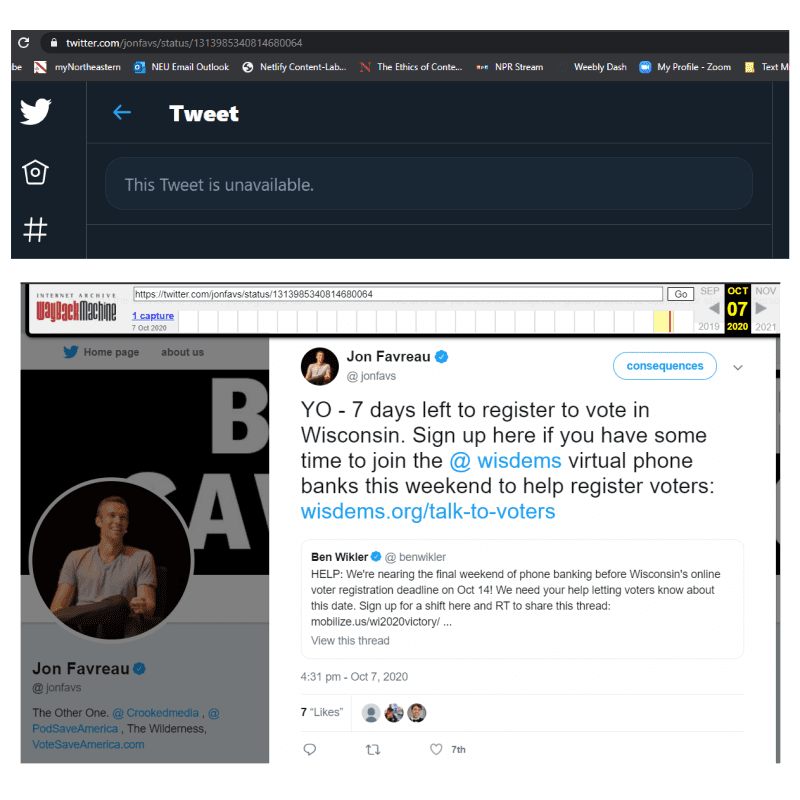

Link: https://twitter.com/jakejakeny/status/1321900543451799559

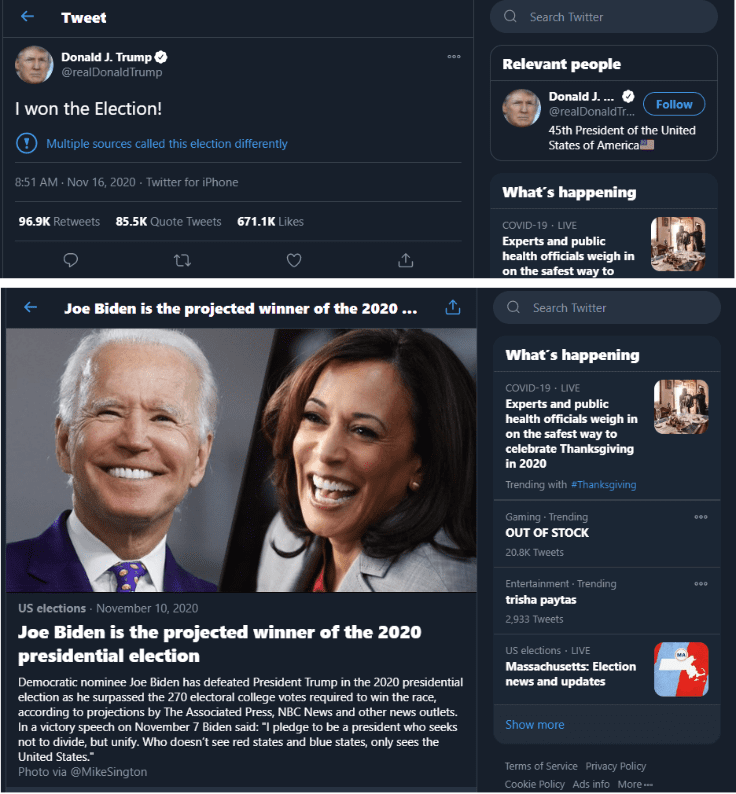

Approaching the 2020 elections, Twitter introduced additional steps on civic policies and content moderation. According to the guidelines in these updates, Twitter attempted to remove any content that may “manipulate or interfere in elections or other civic processes.” If any government official, candidate, or other person of public interest made a premature claim on election results, Twitter would implement the label with a link to authoritative sources with in-platform pages.

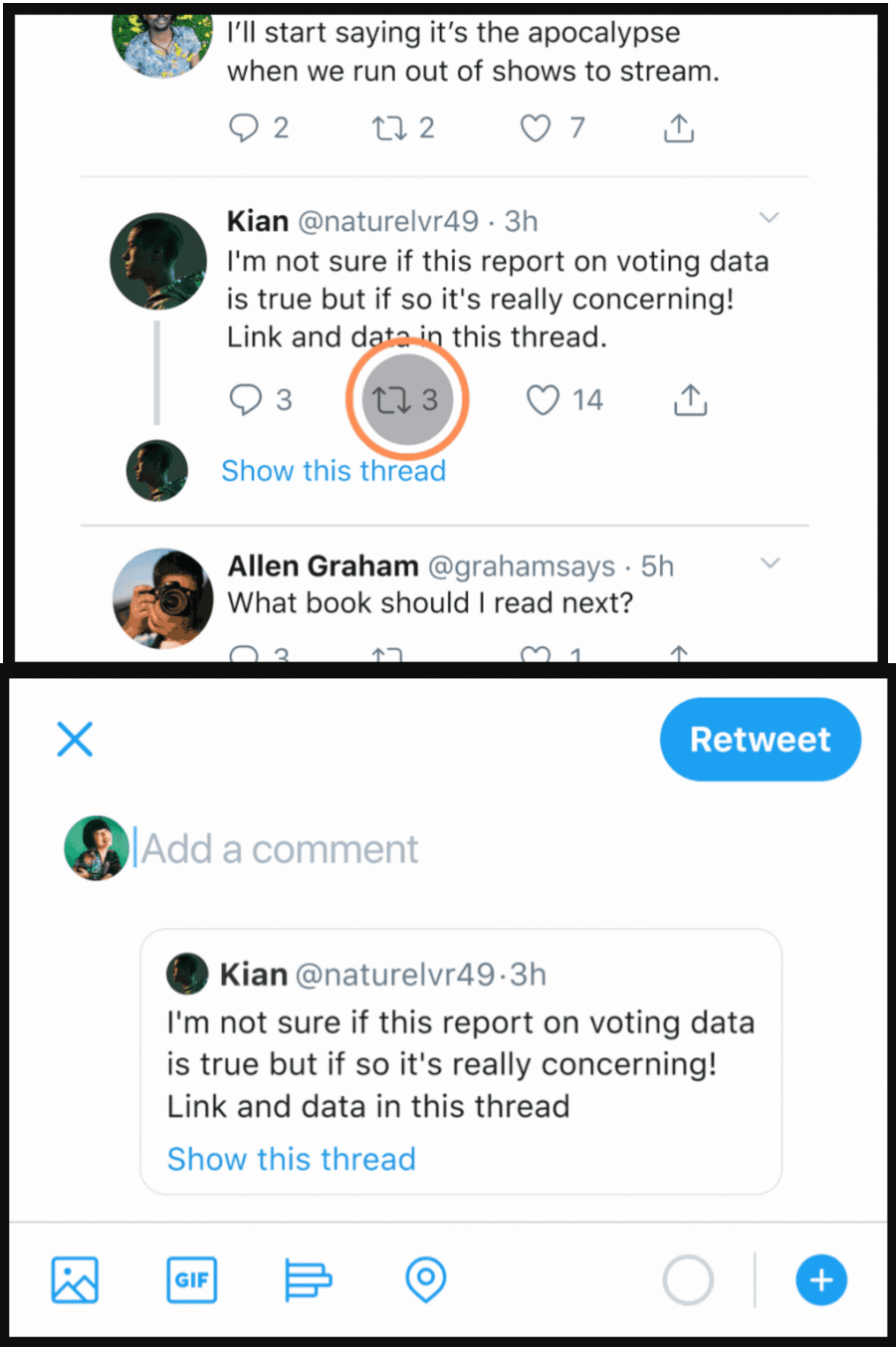

There was also expansion on the new prompt for sharing Tweets, which encouraged users to “Quote Tweet” with their own insight and commentary rather than sharing a Tweet or article without context, in an attempt to slow down viral misinformation. This was in addition to other automated implementations that prevent likes, shares, and recommendations from circulating from accounts outside of a user’s network.

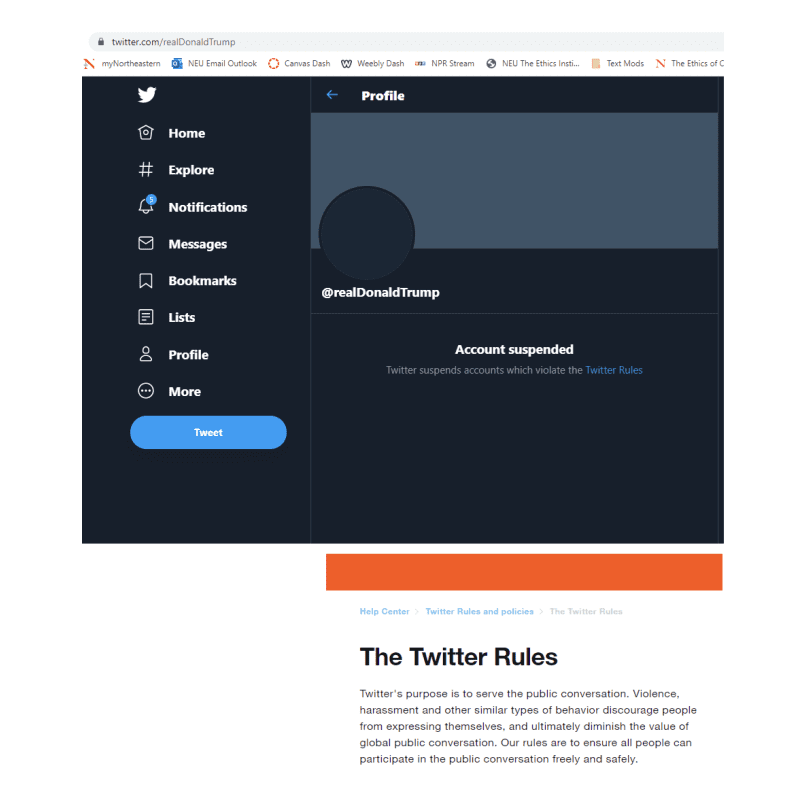

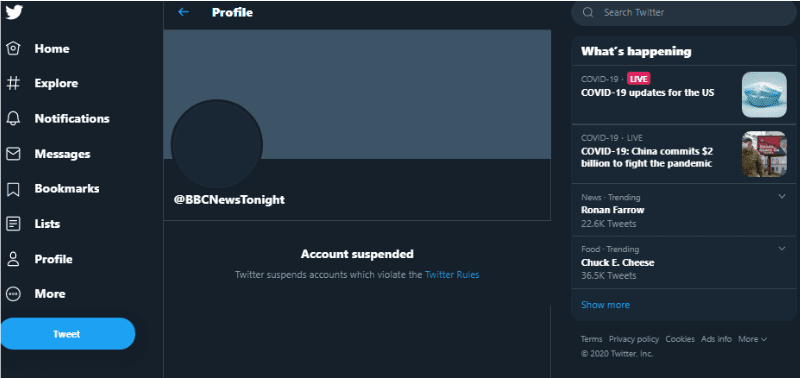

Throughout the 2020 U.S. elections, the actions of former President Donald Trump on social media platforms were creating viral discussion regarding First Amendment rights, the rights that platforms have on their content, and the particular content strategy of then-President Donald J. Trump. Labels had been applied to a number of his Tweets for violating Twitter Rules, although in the context of his being a person of public interest. Following the riot at the U.S. Capitol on Jan. 6, 2021, Twitter made a formal announcement on January 8 regarding the permanent suspension of @realDonaldTrump “due to the risk of further incitement of violence” after numerous Tweets that had been interpreted as violating the policy prohibiting the glorification of violence. While Twitter suspended numerous accounts in the interest of U.S. politics – such as the coordinated efforts against Russian Bots, and QAnon – this is the first instance of suspending a U.S. government official’s account, and it was especially notable because of the status of the office in question.

Twitter and COVID-19

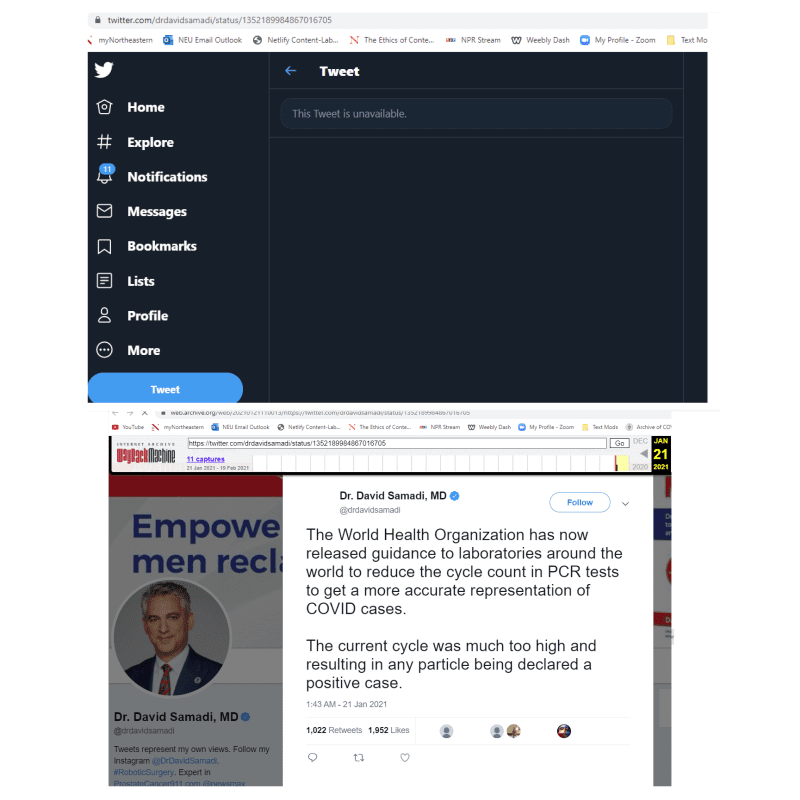

Twitter responded to COVID-19 with policy updates outlined in its blog post, “Coronavirus: Staying safe and informed on Twitter,” published in March of 2020. The company stated that these changes would include “broadening our definition of harm to address content that goes directly against guidance from authoritative sources of global and local public health information.” For re-evaluation of content under this change, Twitter provided a list of prohibited behaviors and content that went against their definition of harm, such as “specific and unverified claims that incite people to action and cause widespread panic, social unrest or large-scale disorder” and “description of alleged cures for COVID-19, which are not immediately harmful but are known to be ineffective, are not applicable to the COVID-19 context, or are being shared with the intent to mislead others, even if made in jest.” Twitter also updated misinformation evaluation as it pertains to misleading information, disputed claims, and unverified claims. Twitter continued to moderate content internally, as well as provide information with Twitter-curated information pages that host relevant articles, and recommend Twitter accounts such as WHO and CDC.

The initial content moderation enacted around these policy updates was to remove content, as Twitter’s COVID-19 strategy update at the start of April 2020 explained. Twitter aims to “prioritize removing content when it has a clear call to action that could directly pose a risk to people’s health or well-being,” and will be dependent on user reporting in addition to its content reviewing.

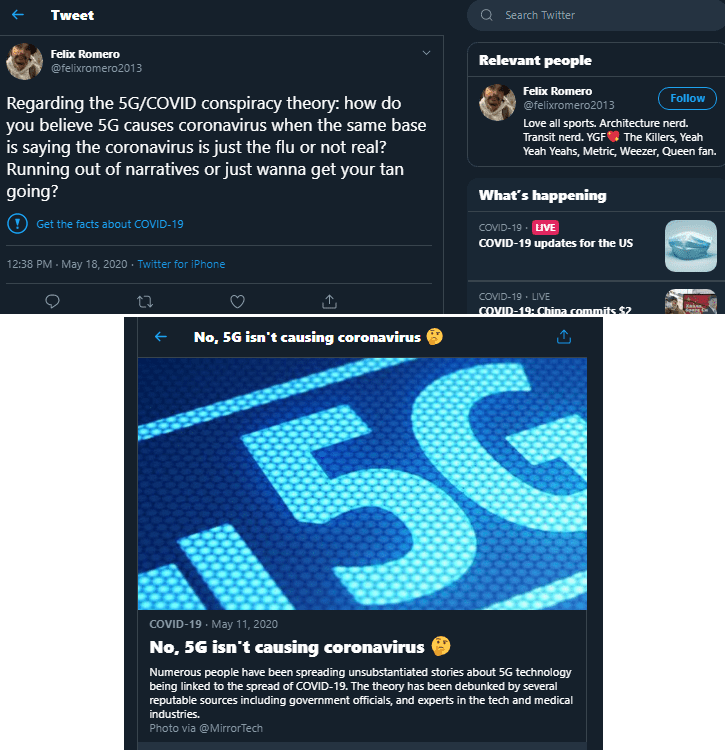

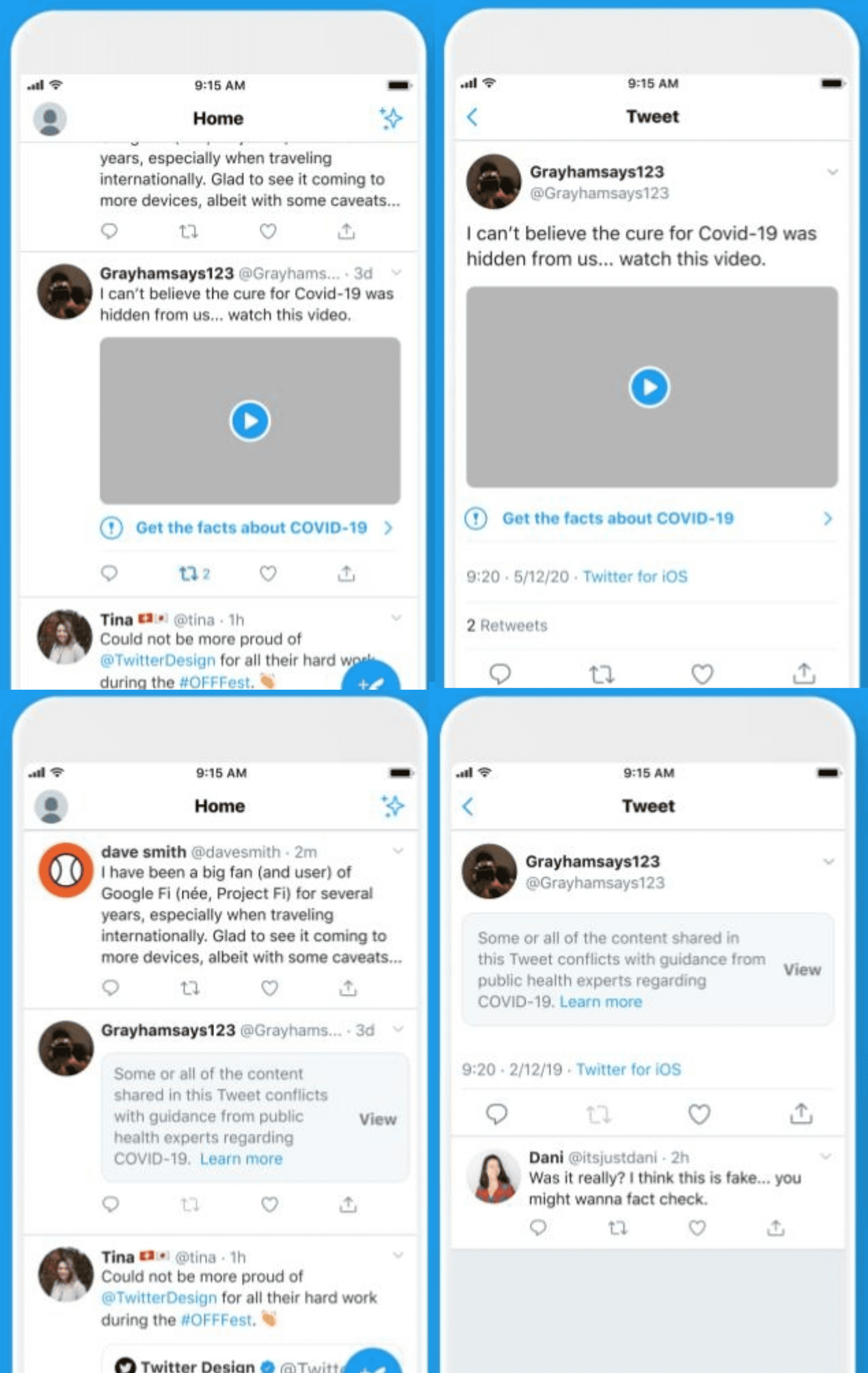

In May of 2020, Twitter announced another update in response to COVID-19 on the platform’s blog regarding misleading information. This is where content labeling specifically for COVID-19 became implemented on Twitter. Content warnings appeared over misleading information, with a panel displaying “Get the Facts about COVID-19.” Users could follow the link to a Twitter-curated page on accurate information around the Twitter conversation, and more outside links to reliable sources. However, for content that is reviewed as more likely to cause harm on Twitter, labels will appear over the content to warn users of misinformation.

In this announcement, Twitter clarified the criteria of harm for all of its platform content, not just COVID-19. The company’s approach to misleading information is guided by three narrowed categories of potentially harmful content, as stated in the announcement:

- Misleading information — statements or assertions that have been confirmed to be false or misleading by subject-matter experts, such as public health authorities.

- Disputed claims — statements or assertions in which the accuracy, truthfulness, or credibility of the claim is contested or unknown.

- Unverified claims — information (which could be true or false) that is unconfirmed at the time it is shared.

Twitter Research Observations

Twitter has provided relatively high transparency in terms of the policies behind content labeling and removal, even if implementation is often disputed and controversial. However, these policies were ambiguous as to Twitter’s relationship with third party fact-checkers, in conducting fact checks or possibly having influence on evaluation criteria for harmful content. Although Twitter did “rely on trusted partners to identify content that is likely to result in offline harm,” as stated in its misleading information update, the question of who these partners are, and the internal system administrators for labeling and fact-checking, are not publicly transparent in the platform policies on misinformation.

Information relating to content criteria overall on Twitter has been presented in public guidelines and policies, such as differentiating the different forms of manipulation and spam. The criteria to identify synthetic and manipulated media was then followed up by a comprehensive list of actions taken upon Twitter to either label or remove content. As mentioned, with regard to regulating U.S. 2020 election content, Twitter had a strict prohibition against sponsored political content. This reduced the level of moderation capacity required to oversee sponsored content of a political nature.

The platform’s response to COVID-19 content in particular was notable. For re-evaluation of content under the additional policy modifications, Twitter provided a list of prohibited behaviors and content that falls within its definition of harm. It also further refined misinformation evaluation, as it pertains to misleading information, disputed claims, and unverified claims.

The primary concern with Twitter may be the increasing complexity of its content moderation strategy. It is not hard to imagine a casual or new user struggling to navigate and understand the Twitter-notice lexicon as a whole. Having labels that are specific to certain types of content may increase the friction against harmful misinformation, because the actionable options and authoritative sources are more detailed and updated within Twitter. However, it would be difficult to understand the context of why different labels are applied, unless a user decides to read through all of the notice policies and platform updates regarding content moderation and labeling. This may deter the user from gathering the full context necessary to understand the label application.