YouTube (Google LLC)

The Evolution of Social Media Content Labeling: An Online Archive

As part of the Ethics of Content Labeling Project, we present a comprehensive overview of social media platform labeling strategies for moderating user-generated content. We examine how visual, textual, and user-interface elements have evolved as technology companies have attempted to inform users on misinformation, harmful content, and more.

Authors: Jessica Montgomery Polny, Graduate Researcher; Prof. John P. Wihbey, Ethics Institute/College of Arts, Media and Design

Last Updated: June 3, 2021

YouTube Overview

In 2005, three developers — Steve Chen, Chad Hurley, and Jawed Karim — launched the video sharing site YouTube. As they stated in the first company blog post, YouTube set out to be “the digital video repository for the Internet.” The website hosts videos uploaded by users that can be liked and commented on, and users can subscribe to the video publishers’ channel for notifications on new content. The year after the platform’s official launch, it was acquired by Google.

The platform affords a variety of functions and features for the hosting and promotion of video media. Artists and music companies can stream music and release music videos; news channels and government organizations will post and livestream important broadcasts; a myriad of video bloggers or “vloggers” provide content on special interests and entertainment; and many other types of media will intersect on the platform, such as podcast-videos and compilations from platforms such as Vine and TikTok. Advertisers can also run ads on videos, which provides revenue for both content-creators and YouTube.

Removal has been YouTube’s longstanding solution to misinformation and policy violations, along with punitive measures such as the platform’s “strike” system, which limits the violator’s presence on the platform. There are some labels, referred to as “panels,” that provide additional context for certain topics. But barriers that minimize user interaction with the labeled content — such as interstitials and click-throughs — are not typically implemented along with the panels.

The following analysis is based on open source information about the platform. The analysis only relates to the nature and form of the moderation, and we do not here render judgments on several key issues, such as: speed of labeling response (i.e., how many people see the content before it is labeled); the relative success or failure of automated detection and application (false negatives and false positives by learning algorithms); and actual efficacy with regard to users (change of user behavior and/or belief.)

YouTube Content Labeling

The YouTube Policies and Safety Guidelines highlighted the moderation of content subject to removal, such as violence, nudity, hateful content, and content unsafe for minors. These guidelines also included the removal of misleading and harmful content in the form of “spam, misleading metadata, and scams” and “impersonation.” A policy violation would result in a “strike,” a punitive system applied in three stages within a 90-day period that could result in content or channel removal.

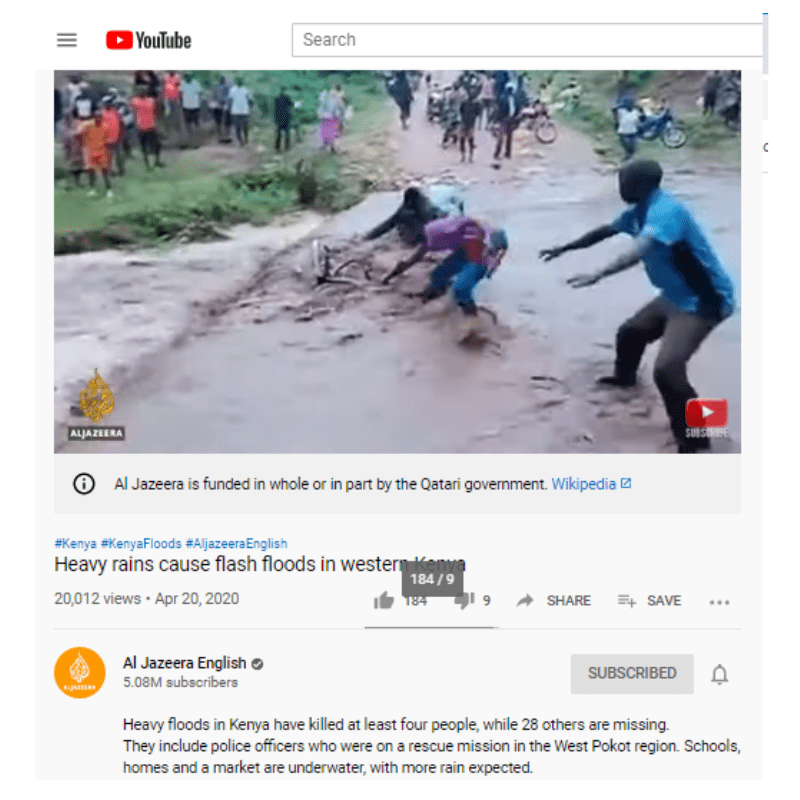

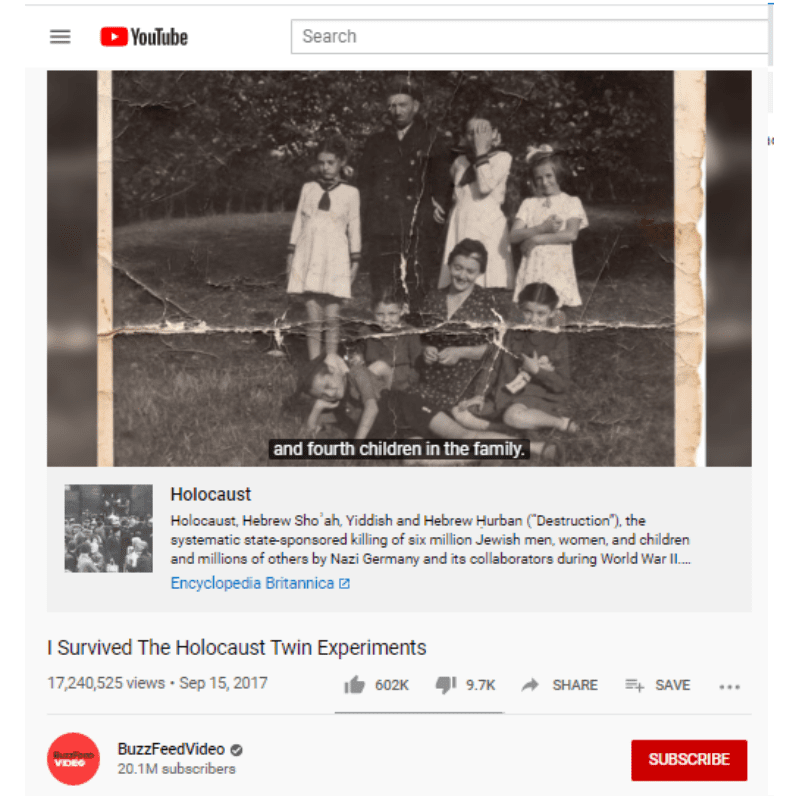

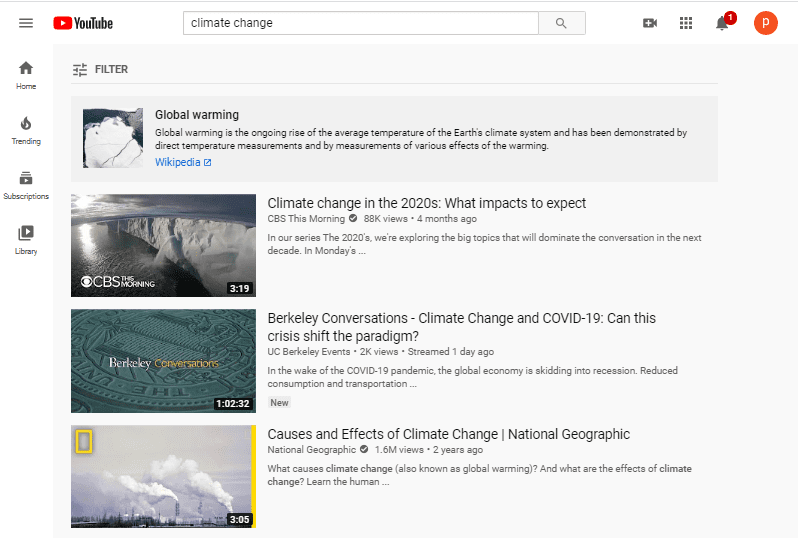

As mentioned, YouTube attempts to correct misinformation with contextual information through topical panels. Misinformation is evaluated broadly with regard to the nature and virality of topics, as opposed to fact-checking individual posts and content. Context panels have been applied to content that contains topics that the platform deemed vulnerable to misinformation. YouTube’s application of Publisher context, on the other hand, was more particular to channels, which produce content on the platform. A video would receive a label for publisher context if the channel overall is identified under a certain category of media publication, such as being state-sponsored.

Widespread content moderation on YouTube expanded in 2012 with a user-moderator program. The “Trusted Flagger Program” used volunteer moderators to flag videos, rather than just relying on general user reporting. These flagged videos would then be reviewed by YouTube content moderators according to YouTube’s Community Guidelines.

In September 2016, YouTube attempted to provide more transparency on this moderation program in a blog post “Growing our Trusted Flagger program into YouTube Heroes.” This new program, “YouTube Heroes,” was updated to inform users how the volunteer moderators not only review violating content, but also provide authoritative information by responding to questions in the help forums, and producing informative videos on YouTube tools and metrics.

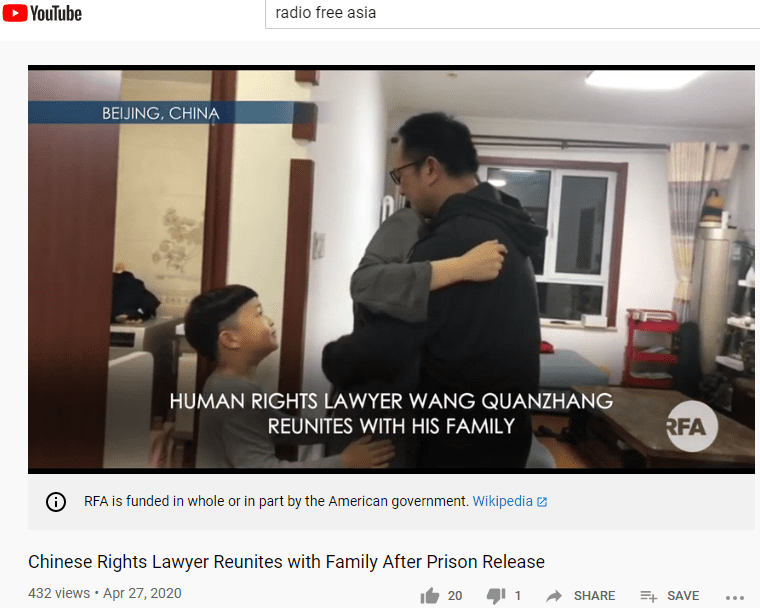

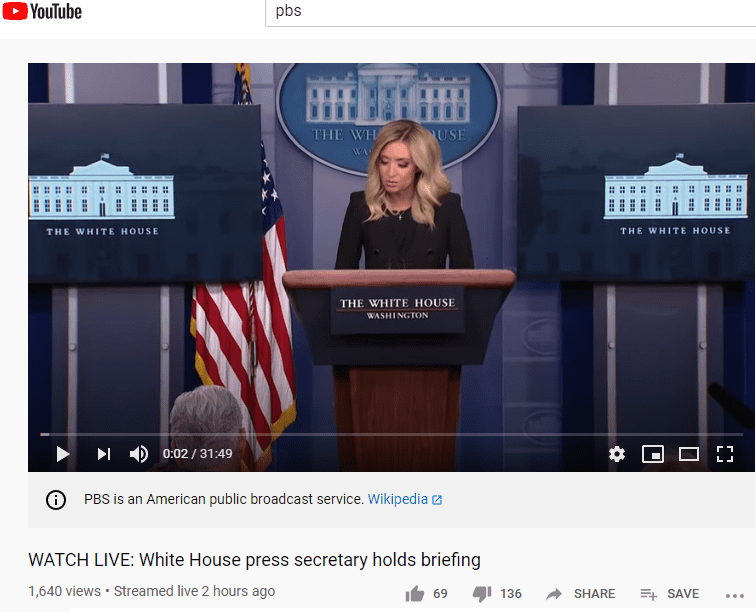

The platform’s updates in 2018 were focused on transparency around content publishers. In a February 2018 note, YouTube announced new context label notices to indicate “government or public funding” behind certain news publications and broadcast channels that were posting videos on the platform. The YouTube Help page, “Information panel providing publisher context,” explained how the notice provides users with information on these channels and stations, via online information resources such as Wikipedia.

In September of 2019, YouTube began a campaign called “the Four R’s of Responsibility” regarding content moderation on the video sharing platform. Part 1 of the blog series focused on the removal of “harmful content,” and the company touted attempts to increase the speed of the removal process for reported content and videos that violate community guidelines.

In December 2019, YouTube published another post in its content moderation series, “The Four Rs of Responsibility, Part 2,” which articulated the goal of “providing more context to users” by means of panels for topical context. These panels were targeted at content that touched on misinformation-prone topics, and included third-party links to information resources in order to provide further context on the topic. The YouTube Support section on topical context panels goes into further detail on targeting “well-established historical and scientific topics that are often subject to misinformation” for context labels. However, there were no criteria provided by YouTube to explain how the vulnerability of topics were determined. YouTube’s primary criteria for authentic and truthful information provided was the validity of the authority providing information, but YouTube policy has no criteria for the “signals to determine authoritativeness.”

Link: https://www.youtube.com/watch?v=gdgPAetNY5U

On April 28, 2020, YouTube announced new fact checking implementations in a blog post, “Expanding fact checks on YouTube to the United States.” The announcement explained that fact-checks would be applied to search inquiries on the platform, regarding topics with a “relevant fact check article available from an eligible publisher” that has debunked the information being searched on YouTube. The YouTube Help page on fact checks in search results explains that the “independent fact check” label will include information on:

- The name of the publisher doing the fact check

- The claim being fact checked

- A snippet of the publisher’s fact check finding

- A link to the publisher’s article to learn more

- Info about the publication date of the fact check article

In December of 2020, YouTube’s published an article that explained the platform’s new focus on removing hate speech and “harmful” content from video comment sections. However, there were no indicators that video comments would be subject to fact-checks or content labels for misinformation.

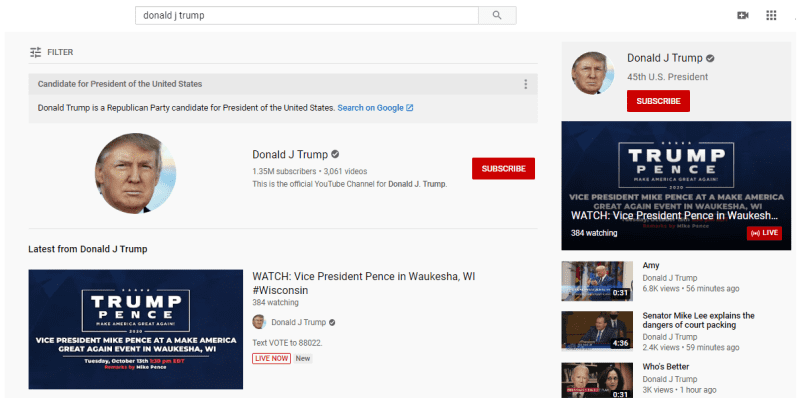

YouTube and the 2020 U.S. Elections

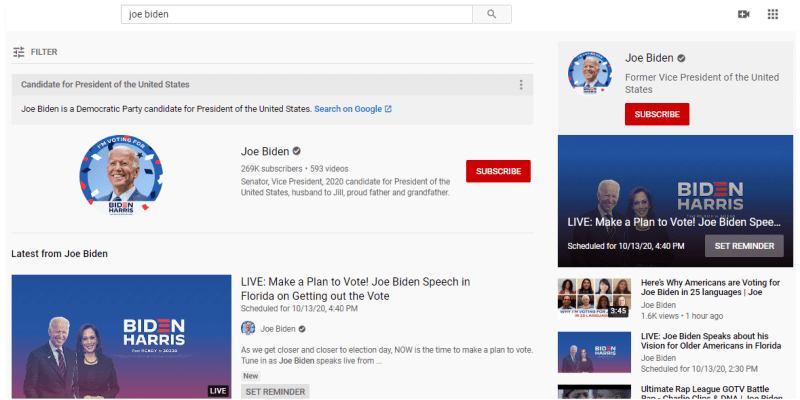

YouTube directly addressed content moderation in a blog post in February 2020 about civic processes, “How YouTube supports elections.” First, the company said it aimed to remove content that misinforms users on civic processes. YouTube also released information on expanding its panel notice implementation, to further transparency and context for platform users regarding the U.S. elections. Publisher context on state-affiliated media channels would be applied to videos in more countries; information panels would be attached to election candidates. These panels, as the full YouTube Security & Election Policies commitment explained, aimed to “provide additional context related to the elections – including information on candidates, voting, and elections results.”

YouTube published a blog post in August of 2020 about continuing content moderation policies focused on the election and civic topics. The company would continue to remove content that violates platform policies, most particularly content that encourages people to interfere with democratic processes. YouTube would also be expanding implementation of fact-check panels regarding election-specific information, as well as a new addition of information panels, “about the candidate.” Search results for election candidates provided information on “party affiliation, office and, when available, the official YouTube channel of the candidate,” according to the post.

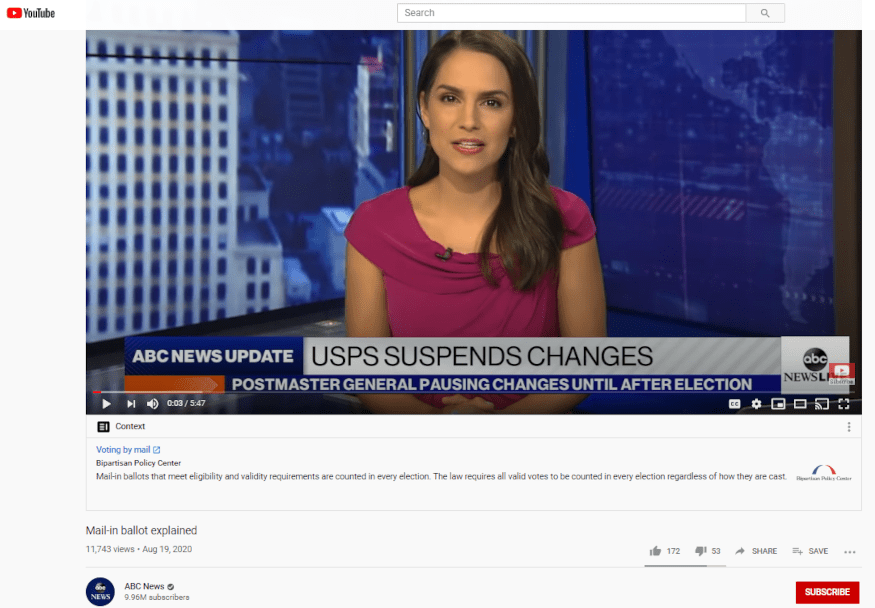

In addition to providing users with context and information on candidates, YouTube announced in September of 2020 additional content labeling measures regarding civic processes in their blog post, “Authoritative voting information on YouTube.” Context information panels on videos expanded to include civic engagement topics subject to misinformation, most particularly voting-by-mail. Alongside the candidate search panels, YouTube also implemented panels with information on voter registration and participation. When users searched for voting information on the platform, the panel’s click-through link would go to a Google page with voting information in each state regarding deadlines and registration.

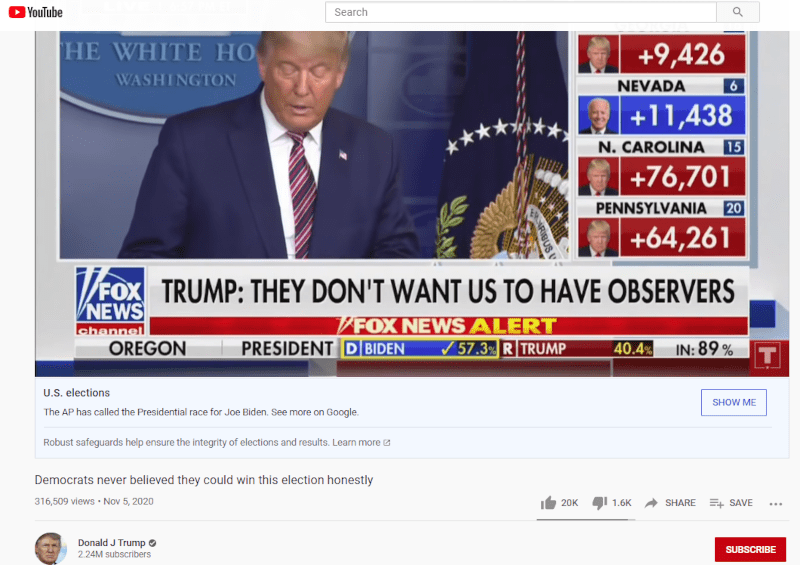

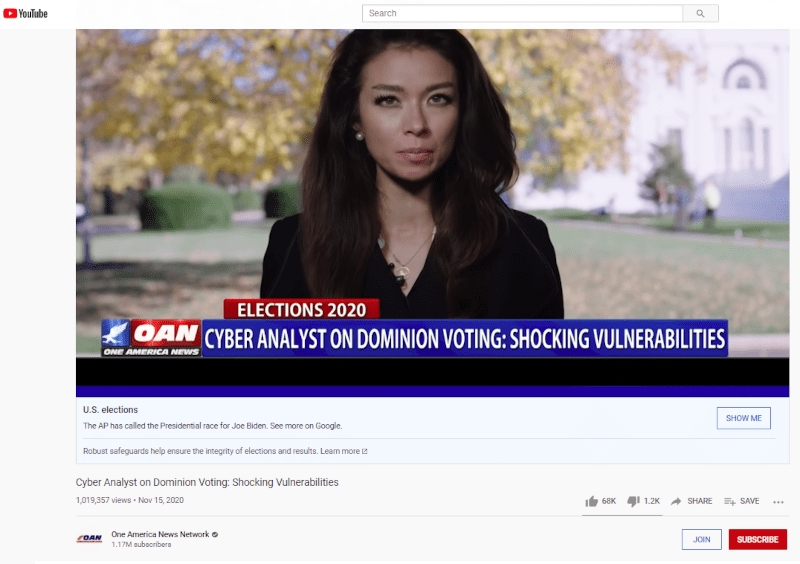

As election day drew nearer, YouTube added a new content label for misinformation addressing election results. In October 2020, YouTube announced in a blog post, “Our approach to Election Day on YouTube,” that the platform would place search panels regarding election results, as well as implement context panels on videos that mislead or misinform on election outcomes.

At the end of 2020, YouTube retracted many of its labeling policies that were initially placed in response to real-world events. In December of 2020, the YouTube blog post “Supporting the 2020 US election” refocused content moderation policies from political topics to removal of content that violates community guidelines. To update provisions of authoritative information, YouTube changed all information panels on election topics to provide “2020 Electoral College Results” with a link to the Office of the Federal Register, as opposed to a Google-curated page. Soon after President Joe Biden was sworn into office, information panels and notices for political topics were less apparent on YouTube.

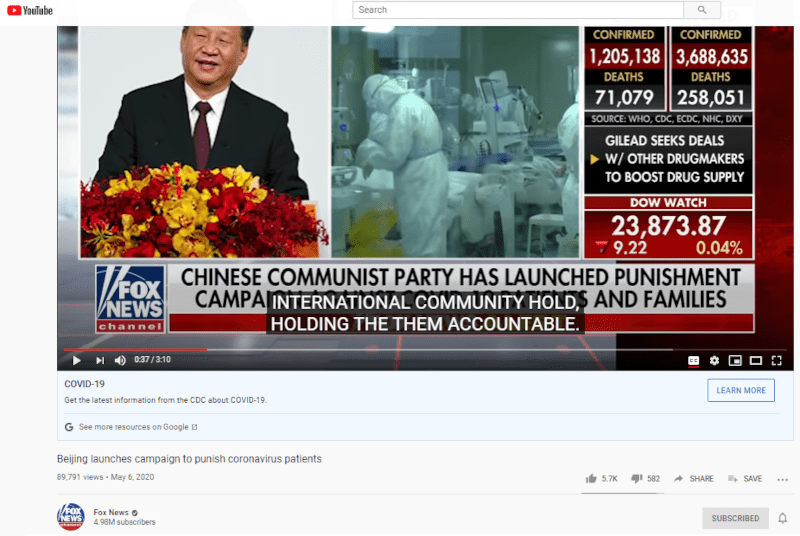

YouTube and COVID-19

When COVID-19 became a prevalent concern in the United States in March of 2020, YouTube published a blog post about support and resources for COVID-19 for content creators. The COVID-19 Medical Misinformation Policy, available on YouTube Help, outlined COVID-19 content moderation in four areas of health information: treatment, prevention, diagnostic, and transmission. The platform’s policies provided a definition and examples of each type of health content subject to removal. The blog announcement also announced new labels, as health information panels applied to content related to COVID-19. These panels provided information from government agencies, health organizations, and health experts.

During the COVID-19 pandemic, YouTube adjusted its information panels to relevant topics regarding the virus. In March of 2021, YouTube announced new information panels for the COVID-19 vaccine. Information panels would now be focused on providing “information about the development, safety and efficacy of the vaccines,” rather than personal hygiene and diagnostic facts. Overall, COVID-19 misinformation appeared to be more often removed from YouTube, as opposed to receiving a topical information panel. The blog post also stated that the platform had “removed over 850K videos for violating these policies, including more than 30K since the last quarter of 2020 that specifically violated our policy on COVID-19 vaccine misinformation.”

YouTube Research Observations

Two factors relating to YouTube’s label implementation had an apparent effect on user interaction with platform content. First, the lack of transparency and information on how the platform evaluated certain types of misinformation compares unfavorably with other platforms studied in this research project. Second, while the information panels provided authoritative sources, they do not create much friction to prevent interaction between YouTube users and misinformation content.

Different types of content moderation tactics were implemented by YouTube: publisher topic context panels, subject context, and content subject to the “strikes” system or removal under guidelines for harmful content or spam. But much of the criteria for identifying content subject to a fact-check or labeling is unclear. The factors of implementing a fact-check, or how “topics that are often subject to misinformation” are identified, were not explained in YouTube’s policies for topical context panels.

There was often vague language to define content regulation across the platform’s policies. For example, the description for harassment and bullying on YouTube Policies and Safety page stated that posts that were “mildly annoying or petty … should be ignored.” While the full policy does provide examples of harassment subject to removal, the threshold of what is beyond mild and subject to removal appears at the platform-moderator’s discretion without a clear set of criteria.

The primary aspect of labeling content on YouTube focused on providing truthful information, determined by the validity of the authority that provided the information. But the platform had no criteria available for the “signals to determine authoritativeness,” as stated in its brief evaluation methodology for fact checks in search results. Although criteria for content subject to removal was more readily available with examples, policy that addressed content subject to labeling or removal because of misinformation on the platform is vague. This raises the concern that YouTube had applied labels arbitrarily, or even (especially to those disinclined to distrust social media platforms in the first place) discriminatorily.

It is clear that real-world events prompted more thorough transparency for policies on the platform. YouTube policies for the moderation of COVID-19 content developed more detailed criteria as compared to other policies, within their four areas of health topics. Its policies provided a definition for each type of health content, with a comprehensive list of examples. YouTube was responsible for the removal of content violations, as well as for applying COVID-19 context labels to all videos that discuss these health topics in any capacity. It is clear that the pressure to respond to the real-world event at such a rapid pace required a higher quality of policy transparency from YouTube.

In spite of this area of successful development, all of the the labels visually and functionally were minimal when it came to protecting users from harmful misinformation. Links to authoritative sources with the panels only went so far, as there are no interstitials or click-through components to add friction against interacting with the content in question. While providing context is an important aspect of content labeling, greater barriers that impede immediate and potentially viral interactions reduce harm more effectively.