By Carolina Mattsson

This post describes research supported by a NULab travel grant.

Back in February, I had the privilege of attending Social Science Foo Camp, a flexible-schedule conference hosted at Facebook HQ where questions of progress in the age of Big Data were a major topic of discussion. It turns out I had a lot to say! But it wasn’t until I was there, having those conversations, that I noticed how powerful and coherent are the ideas that underlie the NULab’s focus on computational social science and digital humanities. It’s easy to know what successful digital integration looks like for a discipline when you’re surrounded by superb examples like Viral Texts and #HashtagActivism…but it turns out that it is not so clear otherwise.

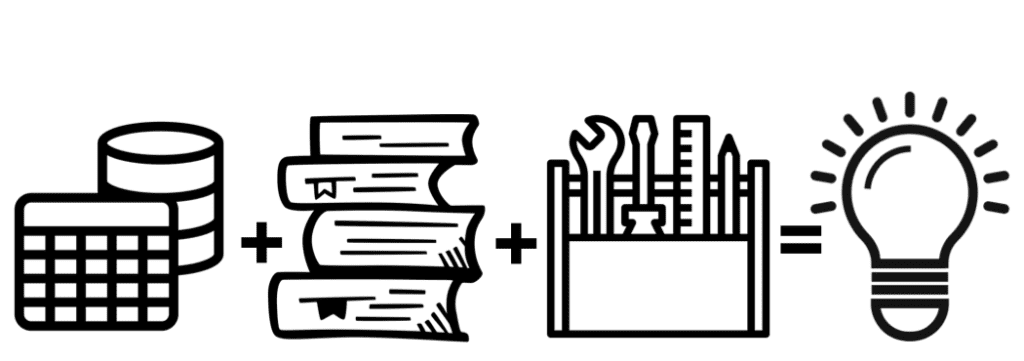

What I found compelling in these conversations is that using or advocating for Big Data is one thing, but making sense of it in the context of an established discipline to do science and scholarship is quite another. Integrating Big Data constructively takes far more than scaling up existing ways of conducting research. I have come to conceptualize “making sense of Big Data” as having three essential components: a grounded understanding of the data itself, a solid grasp of relevant disciplinary theory, and appropriate computational tools that bring theory and data together. Research that glosses over any of these elements will miss the mark, no matter how big the data or how fancy the tools or how beautiful the theory. On the other hand, bringing all these pieces to the table can be hard, very hard, a fact that has not always been recognized by either established disciplines or proponents of change.

First, knowing your data. Using any kind of social media data or administrative records or large digital corpora for scholarship requires an in-depth understanding of how that data got there in the first place and why. Now it may seem obvious that you won’t know what you’re looking at unless you know what you’re looking at, but that doesn’t make it any easier. Data collection is difficult enough in the best of cases that there are often strong disciplinary norms surrounding it: best practices in administering surveys, conducting interviews, annotating primary sources, observing chimpanzees, and even calibrating dual confocal microscopes. While a discipline’s best practices are rarely transferrable to new sources of data, the norms underlying them exist for a reason. Throwing them away to use Big Data exposes a scholar to all the pitfalls that generations before them have worked to prevent. Knowing your data means adapting the norms of a discipline to the particulars of a large digital data set, and this requires real theoretical work. Unfortunately, this is often difficult to convey. Many of those working in a discipline are accustomed to following a field’s best practices or contributing to refinements of existing techniques, but extending disciplinary norms to new kinds of data collection necessarily starts at a more basic level. This means that expectations for this kind of a contribution can be unattainably high without considerable finesse in framing.

Then, integrating relevant theory. Big Data lends itself to descriptive work and a-theoretical prediction, but this bumps up against the reality that stumbling upon something truly novel is quite rare in research. In most disciplines, scholars have been studying the relevant phenomena for decades, building up a deep descriptive lexicon and a nuanced understanding of causal processes. Assuming there would be something entirely missing from disciplinary consideration but easy to spot using Big Data betrays considerable hubris; disciplines are right to be skeptical of bold claims. Better for everyone if we acknowledge that Big Data is most likely to pick up on known phenomena, but also that observing these phenomena using new and different data still advances the discipline. The issue is that making a compelling case for this approach can be, again, quite difficult. It requires articulating how the particulars of the data give us new traction on existing theory within the discipline. Even when you’re lucky and the connections are straightforward—such as social network data to social network theory—it takes additional work to spell those connections out. Explaining such links also tends to demand more than a passing familiarity with the existing literature. When done well, the contribution ends up being almost more methodological, even when the substantive results would speak for themselves to an informed audience. Preferably, of course, even attempts to integrate relevant theory that fall short of this ideal would be recognized as contributions. In reality, for many fields, the bar can be high.

Finally, using appropriate computational tools. Knowing your data and integrating relevant theory can’t happen in practice without computational tools that can bring them together. To advance social network theory using social network data you need network analysis tools that help you measure relevant quantities. To study innovations in style using large corpora of literary works you need tools for distant reading that help you navigate relevant aspects. If you’re lucky then the appropriate tool has already been developed for a compatible purpose, but oftentimes the appropriate tool simply does not yet exist. The constraints of a new source of data under the guidance of relevant theory can immediately put a scholar outside of what’s possible with current off-the-shelf tools. Going forward then means either qualifying the scholarship accordingly or adapting the tools to suit. Both of these directions require a higher level of mastery than simply applying the tools ever would. Again, this makes interdisciplinary scholarship with new digital sources of data especially difficult both to do and to communicate.

Doing science and scholarship is never a simple endeavor to begin with, but making sense of Big Data in the context of an established discipline challenges a researcher on all three of these fronts at once. The budding fields of computational social science and digital humanities are taking this on, and that is tremendously exciting. But for those of us hoping to join in, it can also be downright daunting. Trainees need to learn the best practices, the theory, and the tools before they can master them well enough to produce publishable research. But it takes longer to learn, longer to master, and longer to publish when there is nothing you can take as given: not the data, not the theory, and not even the tools. Navigating this requires added stubbornness to push through the added challenges, and a measure of self-confidence, too. Because there will always be those who have gotten further than you in any one direction. All together, unless you know where you are going it can be very easy to get lost along the way.

This is why I have come to value so deeply the positive examples that the NULab brings together. Early on in my graduate education, NULab put a picture in my mind of what succeeding would look like—not just in my discipline but in any discipline! I saw how cool it can be when you successfully integrate Big Data with history and political science and sociology and literature and communications. Crucially, this was before I ran headlong into all the challenges I’ve described. Having several successful examples to refer back to gave me a strong sense of direction and lent me the subtle confidence that comes from trusting the process. The stubbornness, however, I probably brought to the table myself.

Carolina is a doctoral candidate in Network Science working with Prof. David Lazer. She is an NSF Graduate Research Fellow using her dissertation to develop network analysis tools for monetary transaction networks and modeling frameworks for cash-flow economics. She works extensively with collaborators in industry to apply her methods towards understanding real payment systems, such as by mapping mobile money in two developing countries. As a part of the Lazer Lab, Carolina is working to finalize several projects in computational social science that center around making digital trace and administrative data useful to researchers. Prior to joining the Network Science PhD Program, Carolina earned a B.S. in Physics and a B.A. International Relations from Lehigh University. She has roots in Sweden and New Mexico.