TikTok (ByteDance Ltd.)

Social Media Content Labeling Archive

As part of the Ethics of Content Labeling Project, we present a comprehensive overview of social media platform labeling strategies for moderating user-generated content. We examine how visual, textual, and user-interface elements have evolved as technology companies have attempted to inform users on misinformation, harmful content, and more.

Authors: Jessica Montgomery Polny, Graduate Researcher; Prof. John P. Wihbey, Ethics Institute/College of Arts, Media and Design

Last Updated: June 3, 2021

TikTok Overview

TikTok began as an application exclusively available in China, owned by the technology company ByteDance. A TikTok blog post in August of 2018 announced that TikTok had merged with Music.ly, a lip-synching music app based in the United States, and the app became globally available for download. The TikTok About page characterizes the platform as a “destination for short-form mobile video,” whose mission is to “inspire creativity and joy.”

Users can share videos up to one minute in length, to be displayed on a public feed. These feeds are curated for users, with recommended videos based on their interactions, such as searched hashtags and other videos they have liked and commented on. TikTok videos can be edited with filters, stickers, special cinematic effects, and “green screen” shared displays; and they can feature the use of a sound that is catalogued in the platform. These sounds can be music or dialogue clips chosen by the platform, or saved and repeated from another user’s video. Users who have at least 1,000 followers can also stream live videos, where viewers can tune in and interact in a chat feed.

TikTok has included labels to indicate which filters were in use, to identify an advertisement, or to provide a warning for sensitive content. The platform’s primary strategy for misinformation has been to provide alternative, authoritative information, by implementing platform-curated pages and banners to provide context. Context labels became more prevalent in response to real-world events, especially the U.S. 2020 elections and COVID-19. The majority of implemented labels do not necessarily prevent users from interacting with harmful content.

The following analysis is based on open source information about the platform. The analysis only relates to the nature and form of the moderation, and we do not here render judgments on several key issues, such as: speed of labeling response (i.e., how many people see the content before it is labeled); the relative success or failure of automated detection and application (false negatives and false positives by learning algorithms); and actual efficacy with regard to users (change of user behavior and/or belief.)

TikTok Content Labeling

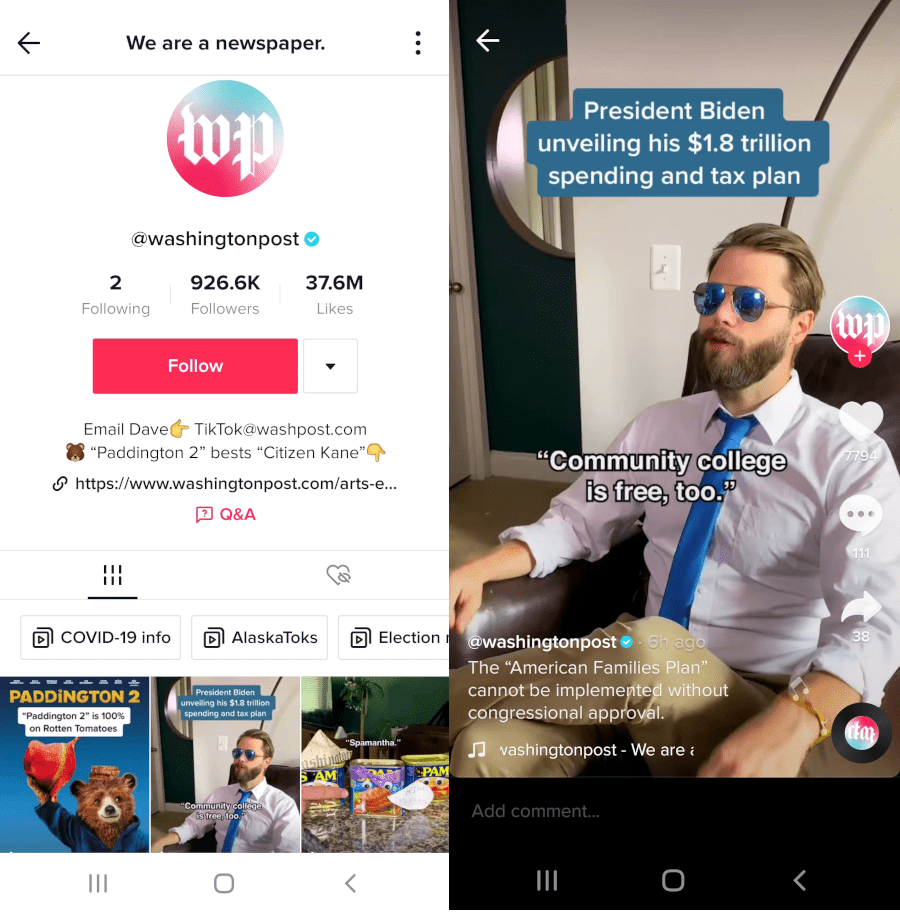

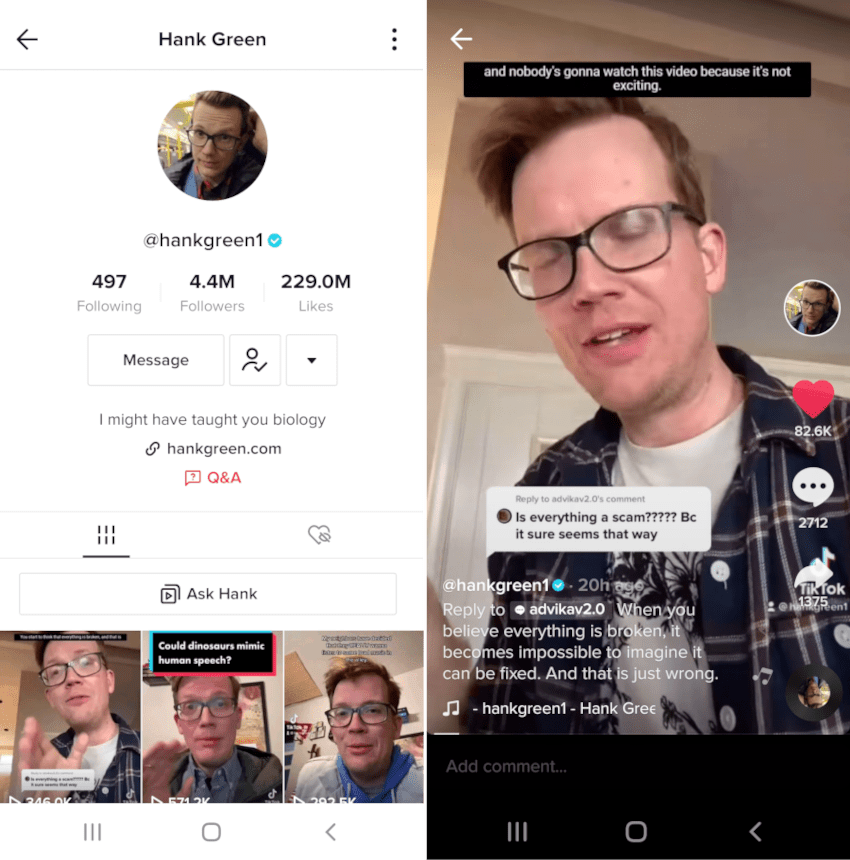

One year after global release, the TikTok newsroom published an article to provide users with information to identify authentic accounts. Verification required users to meet certain standards for a high number of followers and regularly curated content. This verification is indicated by a blue badge and checkmark icon next to the username, to provide “an easy way for notable figures to let users know they’re seeing authentic content,” according to the newsroom post. According to the platform guidelines on integrity and authenticity, accounts that imitate a notable person or public figure to mislead users or promote spam will not be verified and may be subject to removal.

TikTok has made some efforts to provide users with authoritative information on the app, such as hosting a video series on media literacy in the summer of 2020, “Be Informed”. This series attempted to provide users with information to be more media literate, which TikTok defined as “having the ability to access, analyze, evaluate, create, and act using all forms of communication.”

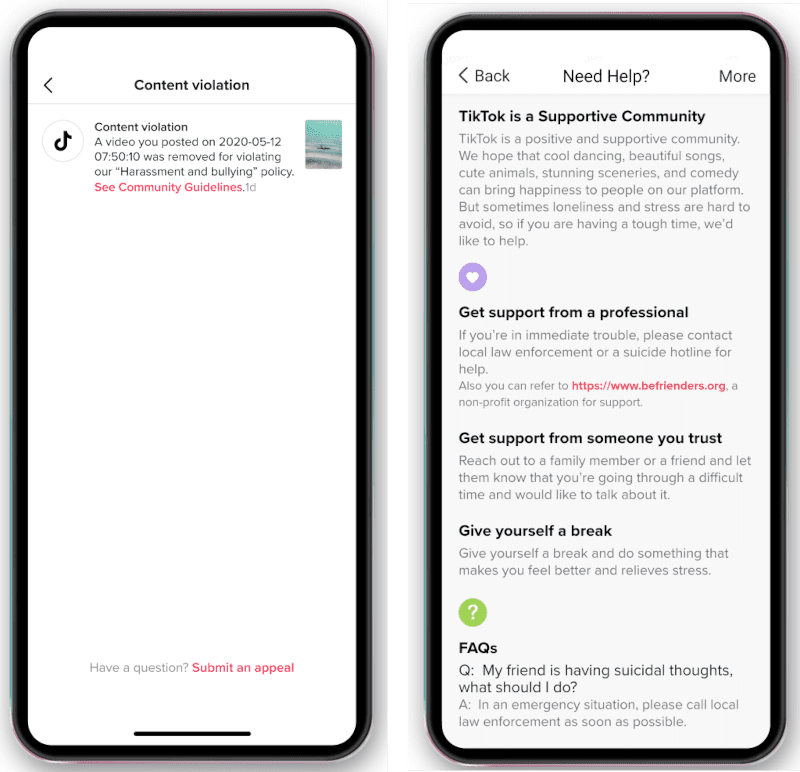

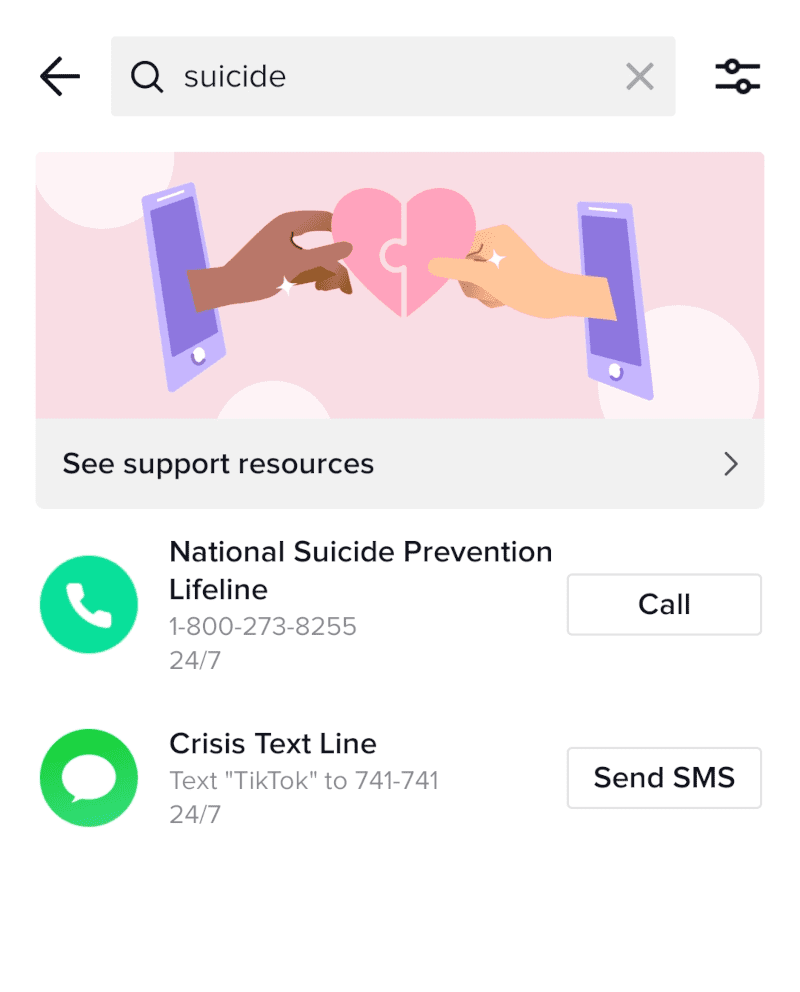

Most of TikTok’s content moderation efforts were focused on removal, and a newsroom post in October 2020 announced new features for content removal and reporting. Users would receive a notification of a video removal when viewing an account page. The creator of the removed content received a direct notification with an explanation of the policy in violation and a link to make an appeal. Further, when a video subject to removal indicated self-harm or suicide, a notification to the content curator would also provide links to mental health resources.

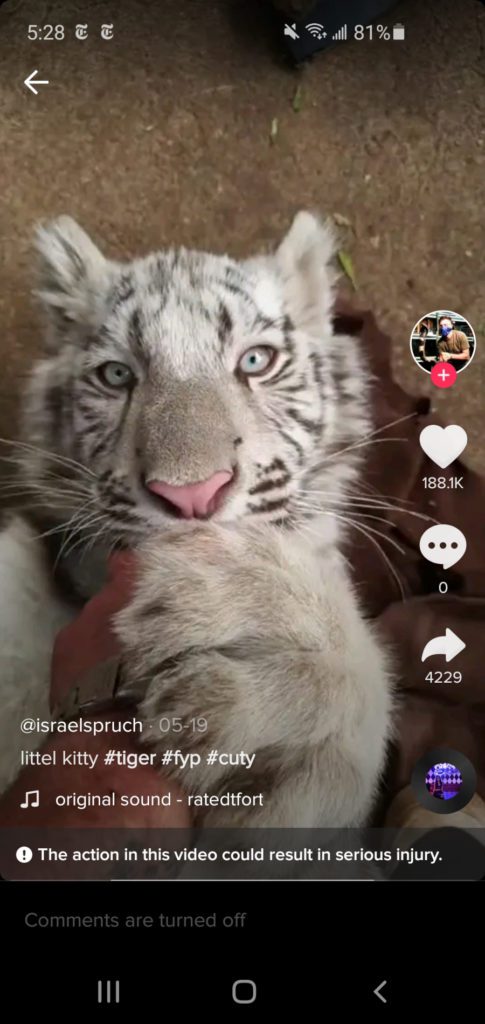

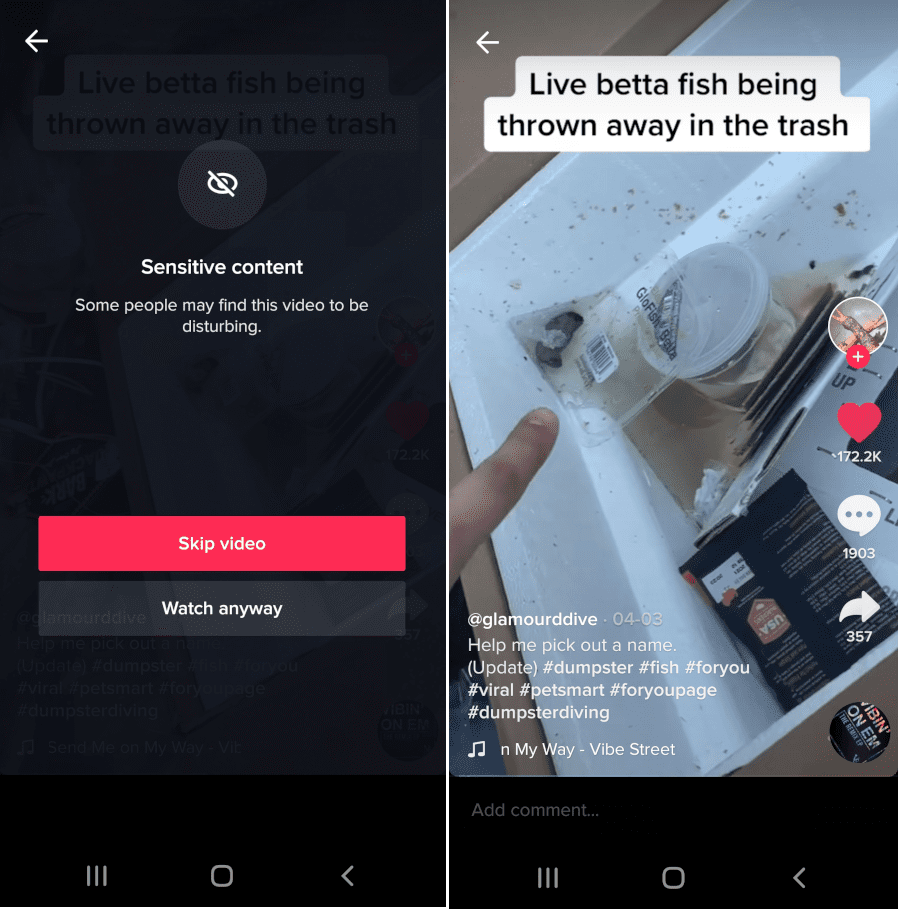

TikTok announced that it would be updating community guidelines for the platform in a newsroom post in December 2020. New labels appeared on videos that “depict[s] dangerous acts or challenges,” as well as interstitials for content that was “graphic or distressing” and may be sensitive for some viewers. These labels were applied at the discretion of the platform, according to community guidelines.

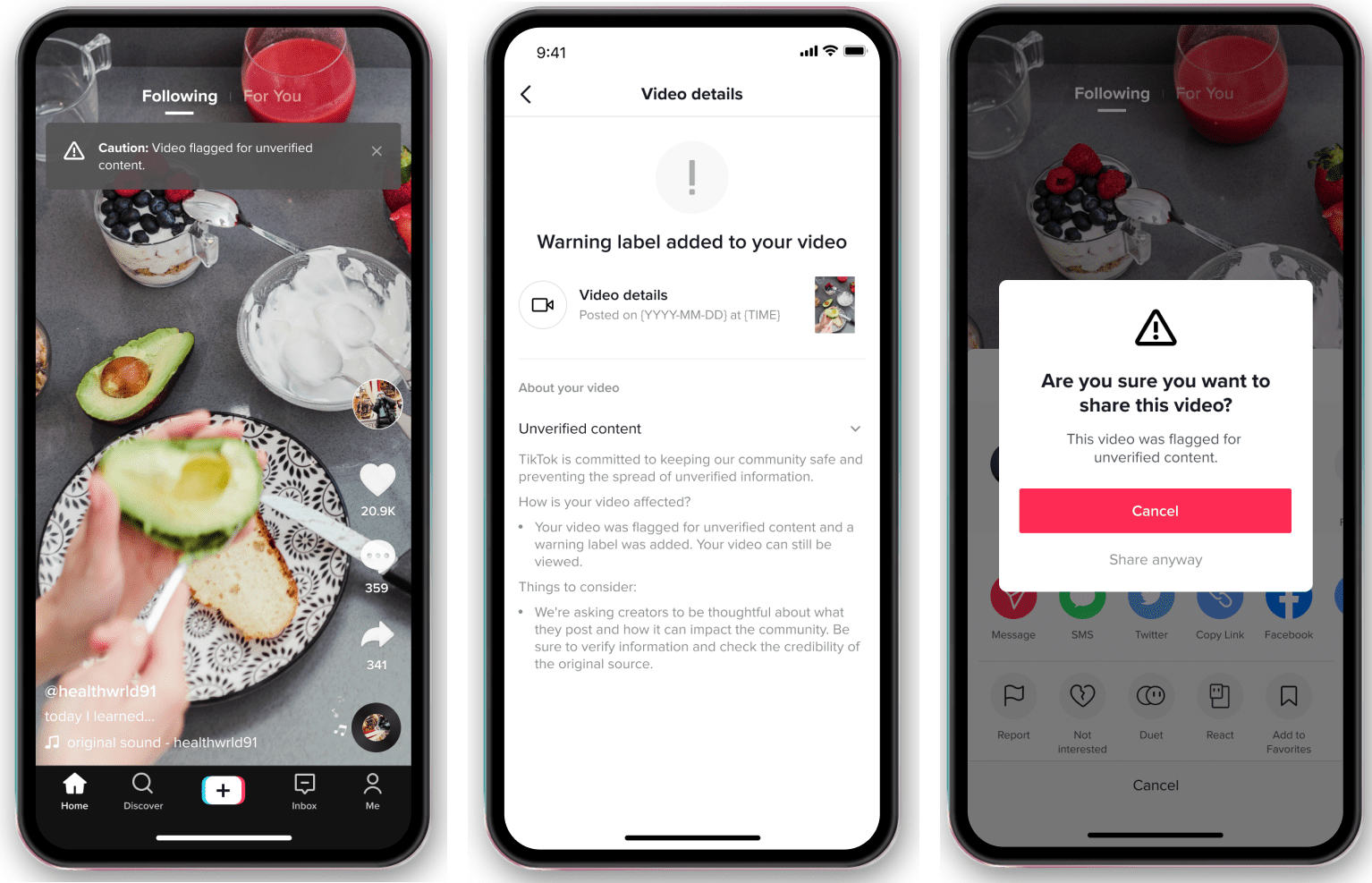

User reporting was utilized to flag harmful content. TikTok would implement labels for contextual information on certain topics. But when a piece of content is under review, it may potentially cause harm when shared. That is why in February of 2021, TikTok introduced sharing prompts that warned users of unverified content.

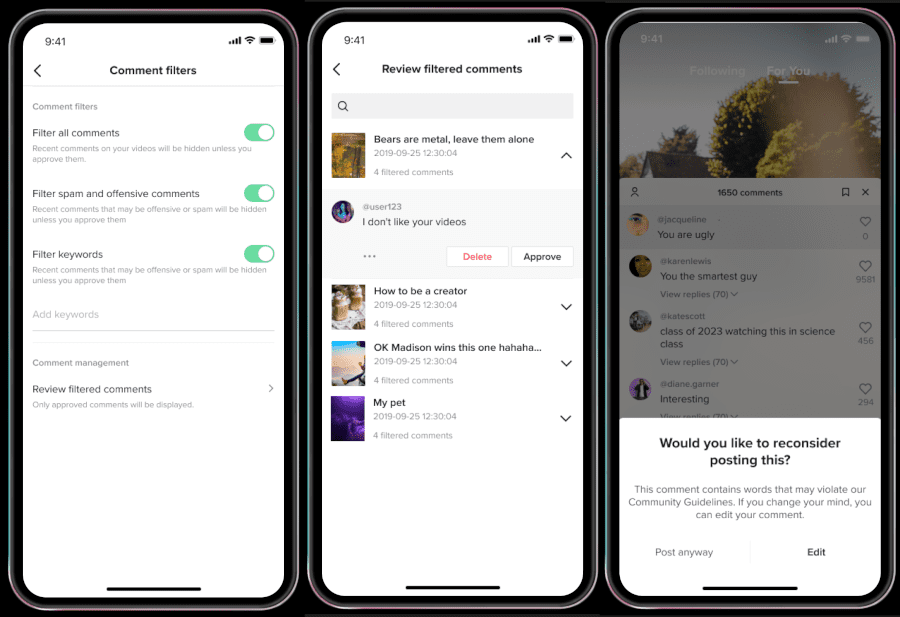

In an attempt to moderate hate speech and offensive content in the comments section of videos, TikTok introduced a new commenting prompt that asks users to “consider before you comment.”

TikTok and the U.S. 2020 Elections

In preparation for the upcoming presidential elections, TikTok updated its policies on political ads in October of 2019. Across the platform, political ads were prohibited, including “paid ads that promote or oppose a candidate, current leader, political party or group, or issue at the federal, state, or local level – including election-related ads, advocacy ads, or issue ads.” TikTok reasoned that advertisements on political and social issues disrupt their mission for entertainment and creativity. However, there were no rules that prohibit general-user political content, unless it violated the community guidelines. Other advertisements in permitted categories were required to have a transparency icon to indicate sponsored content.

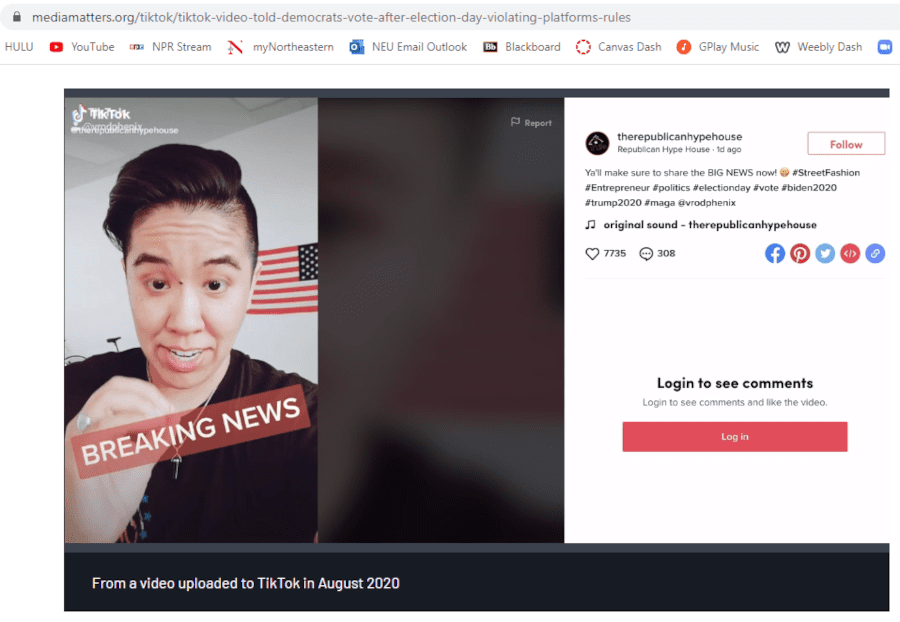

In August of 2020, the TikTok Newsroom published an article on how the platform would be responding to the upcoming presidential elections. This article, “Combating misinformation and election interference on TikTok”, outlined three measures (quoted directly here):

- We’re updating our policies on misleading content to provide better clarity on what is and isn’t allowed on TikTok.

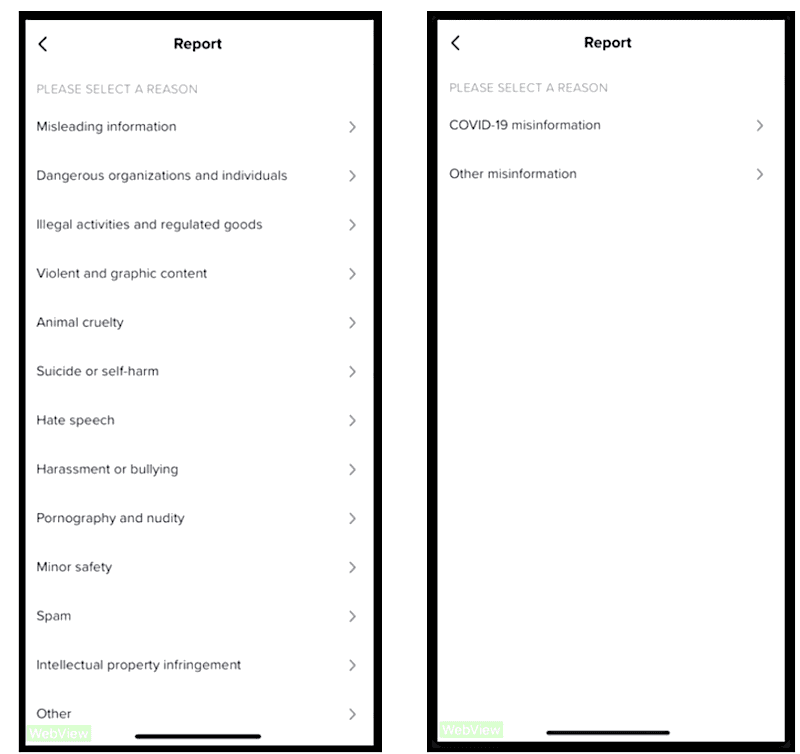

- We’re broadening our fact-checking partnerships to help verify election-related misinformation, and adding an in-app reporting option for election misinformation.

- We’re working with experts including the U.S. Department of Homeland Security to protect against foreign influence on our platform.”

The TikTok newsroom announced in January 2020 its efforts against political misinformation on the platform in response to the U.S. 2020 elections. First, in attempting to increase integrity, the platform removed content that is “meant to incite fear, hate, or prejudice, and that which may harm an individual’s health or wider public safety.” Second, with new reporting options, users had the option to report “misleading information,” adding to the previous categories of offensive content. Third, TikTok supported “healthy community interactions” by curating in-app notices on election and civic information with related hashtags. These notices provided users with a platform-curated page on local election information, as well as reiterating community guidelines regarding political misinformation.

The TikTok Newsroom also detailed new misinformation efforts, “An update on TikTok’s efforts in the US”, in February of 2020. Hashtags were a key factor for content labeling on TikTok, where hashtags of certain topics are subject to contextual information banners. Notices were applied to videos with specified topics — including the U.S. 2020 elections — and provided information from the community guidelines on “misinformation that could cause harm to our community.” For the elections, this included promoting accounts of political officials, as well as redirecting to local state websites and directories on civic engagement.

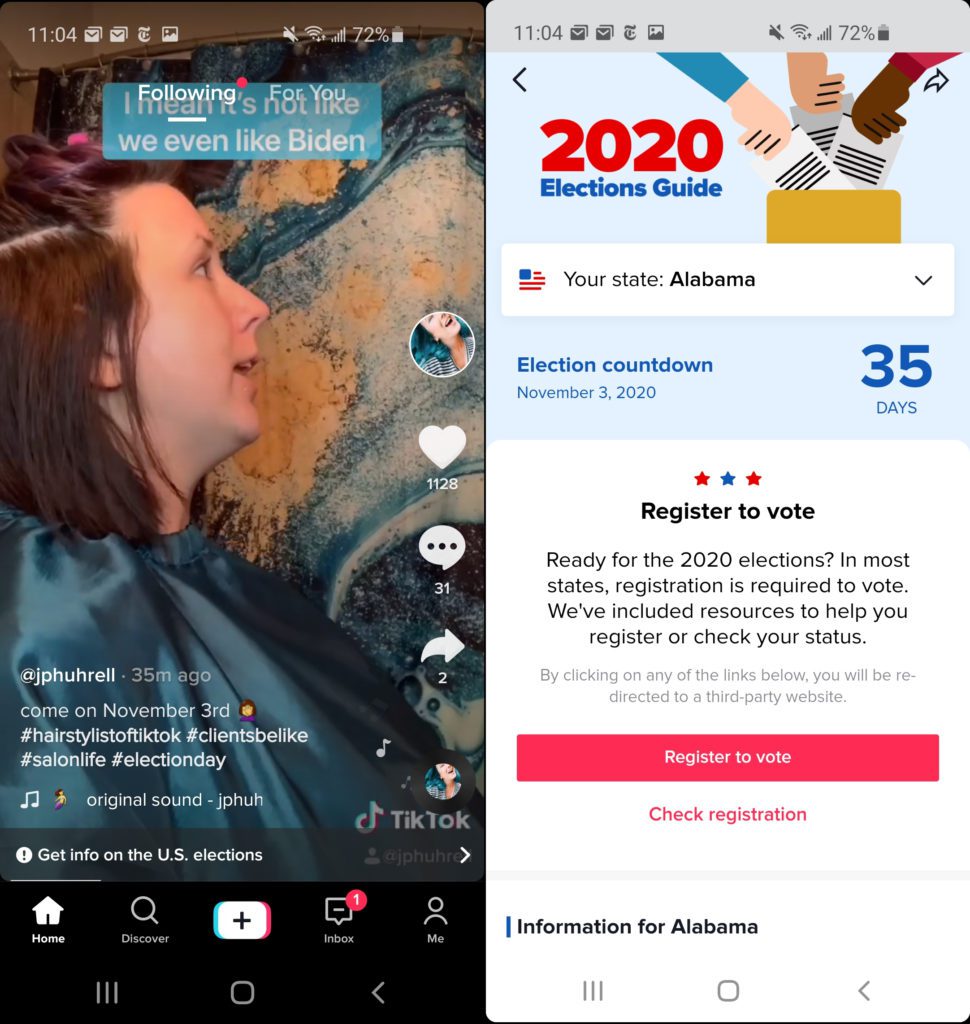

When the election information banners initially launched in January, the link sent users to promoted in-platform content on TikTok for election information. Then, in further attempts to provide authoritative information on elections and civic engagement, TikTok launched an in-app page for election information in September of 2020. In this election guide, users could view their voter registration status, register to vote, and browse promoted content with hashtags related to political topics. To encourage users to visit this page, TikTok placed a banner at the bottom of videos “relating to the elections and on videos from verified political accounts.”

TikTok and COVID-19

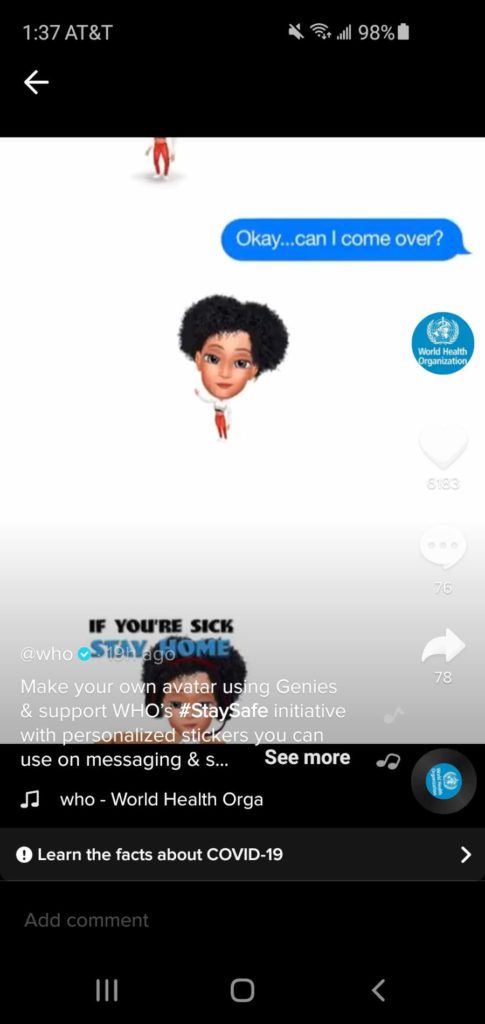

In response to COVID-19, in February of 2020 the TikTok Newsroom published “An update on TikTok’s efforts in the US,” detailing updated misinformation efforts; these included contextual information banners for COVID-19, for any health misinformation that could potentially cause harm. TikTok promoted videos from the World Health Organization and provided a link to the platform’s official page on health and safety.

In an attempt to increase the capacity of content moderation for COVID-19 on Tiktok, the platform created the Content Advisory Council in March of 2020. The aim of this council was to address policy and moderation on “critical topics around platform integrity, including policies against misinformation and election interference.” The council was composed of experts in technology, policy, and content regulation, in order to guide TikTok’s efforts for reporting, removing, and labeling content.

In 2020, user reporting was already implemented on the app with categorical updates for particularly concerning topics. According to a newsroom post on COVID-19 in April, users were able to specifically select “COVID-19 Misinformation” when flagging content. Reports under this category were said to be prioritized for internal review, and sent to third party fact-check organizations for verification.

TikTok Research Observations

The Integrity and Authenticity clause under Tik Tok community guidelines prohibits “content that is intended to deceive or mislead any of our community members endangers our trust-based community. We do not allow such content on our platform. This includes activities such as spamming, impersonation, and disinformation campaigns.” There is no clarification in the platform policies regarding what is considered “misleading” or not, such as the exception of a video that may satirical or critical as opposed to being a misleading hoax. The broad scope of these three categories of misinformation lacked corresponding detail in terms of evaluation policies. While the platform prohibits “misinformation that causes harm to individuals” on their guidelines for misinformation, there are no criteria provided to outline what is considered particularly harmful content.

When implementing information banners, TikTok relies on the hashtag system to direct content moderation. That means a user who speaks on election misinformation may not be flagged if their account is not verified as a political individual, or they do not use an election-related hashtag. Despite this narrow purview, content on TikTok consists of numerous components such as video, audio, and effects, hashtags, comments, and the algorithmic calculations of interactions. TikTok algorithms are curated for virality on the public feed, as opposed to being curated for interpersonal information sharing like other social media platforms.

In an attempt to maintain integrity on the platform, the information banners were initially implemented on the platform in response COVID-19 and the U.S. 2020 elections. While the platform created policy in response to the virality of these real-world topics, content labeling and direct moderation against misinformation had apparently not been considered in previous stages of platform development. The banners are curated to promote reliable information, as opposed to implementing barriers against misinformation. The friction of these labels would be more effective if all banners on the platform were instead an interstitial, as with the sensitive media content warnings.