WhatsApp (Facebook Inc.)

The Evolution of Social Media Content Labeling: An Online Archive

As part of the Ethics of Content Labeling Project, we present a comprehensive overview of social media platform labeling strategies for moderating user-generated content. We examine how visual, textual, and user-interface elements have evolved as technology companies have attempted to inform users on misinformation, harmful content, and more.

Authors: Jessica Montgomery Polny, Graduate Researcher; Prof. John P. Wihbey, Ethics Institute/College of Arts, Media and Design

Last Updated: June 3, 2021

WhatsApp Overview

In 2014, Facebook announced its acquisition of WhatsApp, a popular global SMS application. It is a free platform that enables messaging text, phone calls, and video calls, as well as other media such as voice clips, videos, and photos. While an internet connection or mobile data is required to operate the app, there are no restrictions on the number of calls or messaging.

Unlike the other public social network sharing platforms, users who share content on WhatsApp are protected by double-encryption. This end-to-end encryption on WhatsApp means that only the users in communication with one another have access to the content shared, and it is not visible for any sort of content moderation by the platform.

The lack of content moderation by WhatsApp limits the capacity for labeling misinformation or harmful content — and regulation of information generally on the platform. The platform must rely mostly on user reports and third party fact-checks. Most of the content moderation implementations on WhatsApp do not involve labeling specific content, but rather identify a risk or notify a user of a certain messaging function. Overall, the rise of private messaging apps, in general, presents challenges to content moderation efforts.

WhatsApp Content Moderation

As WhatsApp’s content is double-encrypted private messaging, most of the examples here are examples provided by the WhatsApp Blog and FAQ center.

As a subsidiary company of Facebook Inc., WhatsApp provided fact-check resources through the International Fact Check Network (IFCN) on WhatsApp so that users could text fact-checkers to verify information or report false news. WhatsApp also provided users with guidelines and safety tips for misinformation on the platform, including how to report false news and insights on media literacy.

WhatsApp does not place fact check labels for messaging on their app, due to the privacy measures for double-encrypted content, but it does apply labels to certain messaging functions. There are general messaging warning labels for links that may be a hoax or spam, or users to Google search their links – but there are not evaluative labels for the contents of the links themselves. The platform places more focus on moderating functions, such as restricting forwarding capabilities, as opposed to reviewing message content. Labels regarding basic function appear, such as to indicate when a message has been forwarded.

As a private SMS application, WhatsApp differs greatly from the content moderation and labeling practices of the other Facebook Inc. social media platforms. Policies and guidelines for WhatsApp focuses on informing users how to identify content that is a hoax, spam, or other type of misinformation. However, as mentioned, end-to-end encryption upheld for app users limits the platform’s ability to review content, unless it was either in viral circulation in public groups or reported by individual users.

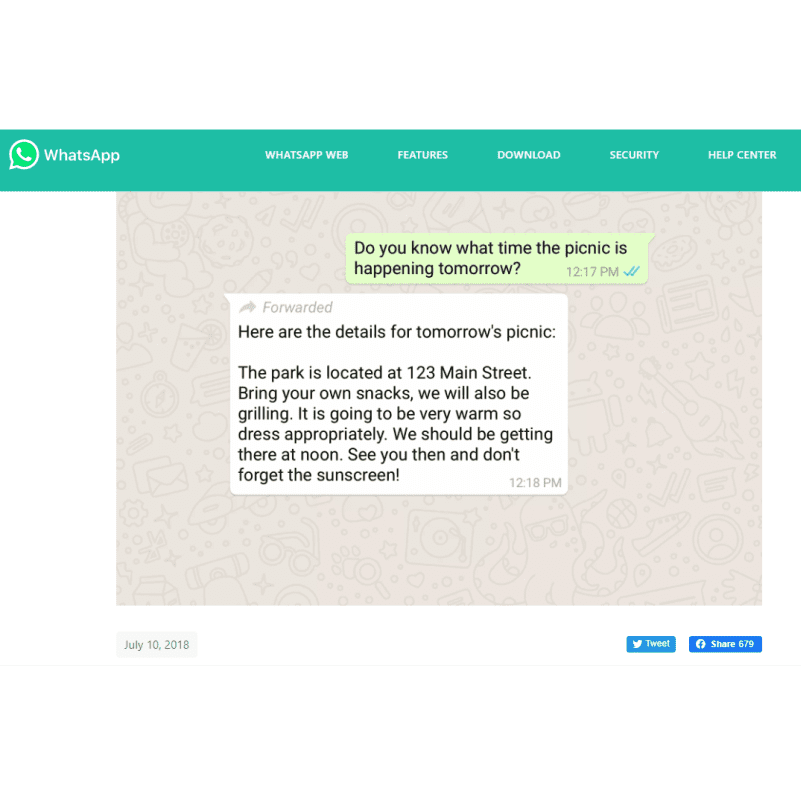

The most common label for WhatsApp messages were forwarding labels, introduced in July 2018 along with a general application update. Messages that had been forwarded are labeled within the message box, in the top left-hand corner in grey text. The label includes both an icon of a leaning arrow, and simple text with the term “Forwarded.”

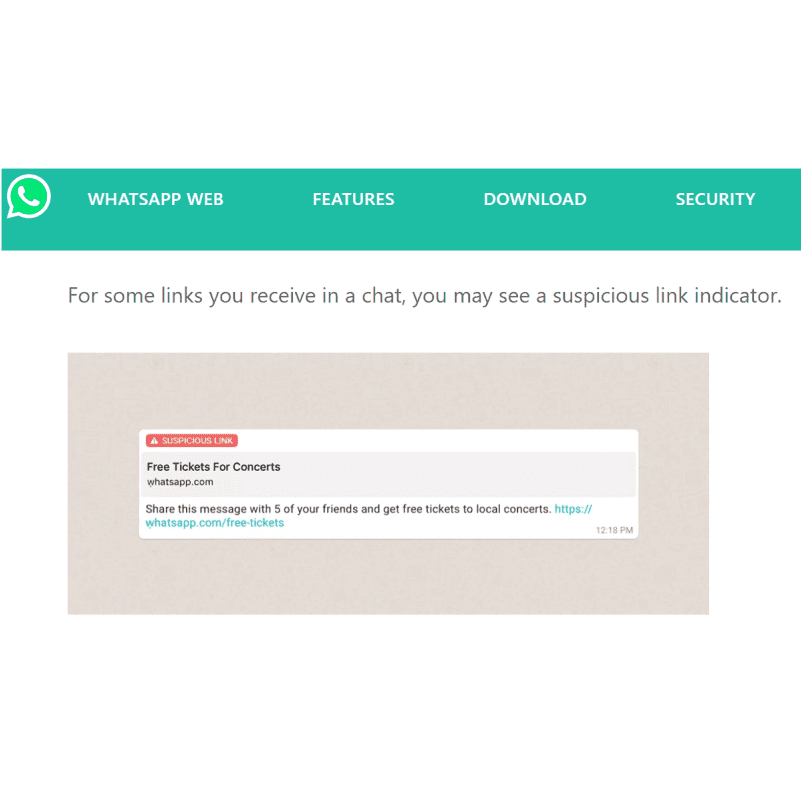

WhatsApp provides labels that indicate scams and hoaxes, which are often accompanied by misinformation or harmful content. In September of 2018, WhatsApp introduced the suspicious link label. The bright red in-message label indicates links sent within the app that may lead to an illegitimate or malicious website. However, the link would still be highlighted blue within the message and available for click-through.

In an attempt to increase friction against misinformation from unverified sources, in January 2019 WhatsApp also numerically limited the number of messages to be forwarded to five chats at once. While these forwarding labels and modifications were a form of content regulation by the platform, it was not an actively implemented evaluation on misinformation as much as it was placing preventative functions and increased friction on messaging.

WhatsApp, U.S. 2020 Elections and COVID-19

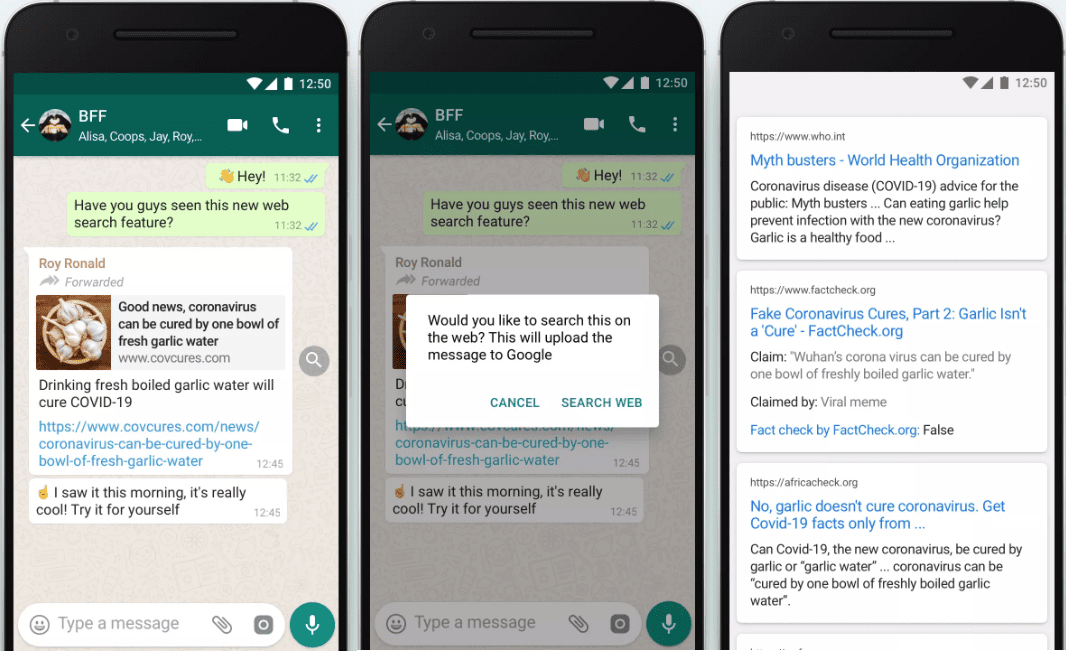

The criteria presented by WhatsApp for labels on forwarding and links as outlined in a company post, “How to use WhatsApp responsibly,” largely placed both content evaluation and responsibility on app users. More resources to encourage users to seek out authoritative sources became available in 2020, as concerns rose over misinformation regarding the U.S. elections and COVID-19. To encourage better user practices for information verification, WhatsApp added an in-message “search the web” feature in August of 2020. This click-through pop-up notice had users search a link on Google, in an attempt to help verify legitimacy and offer publisher context, rather than opening the link directly into a browser.

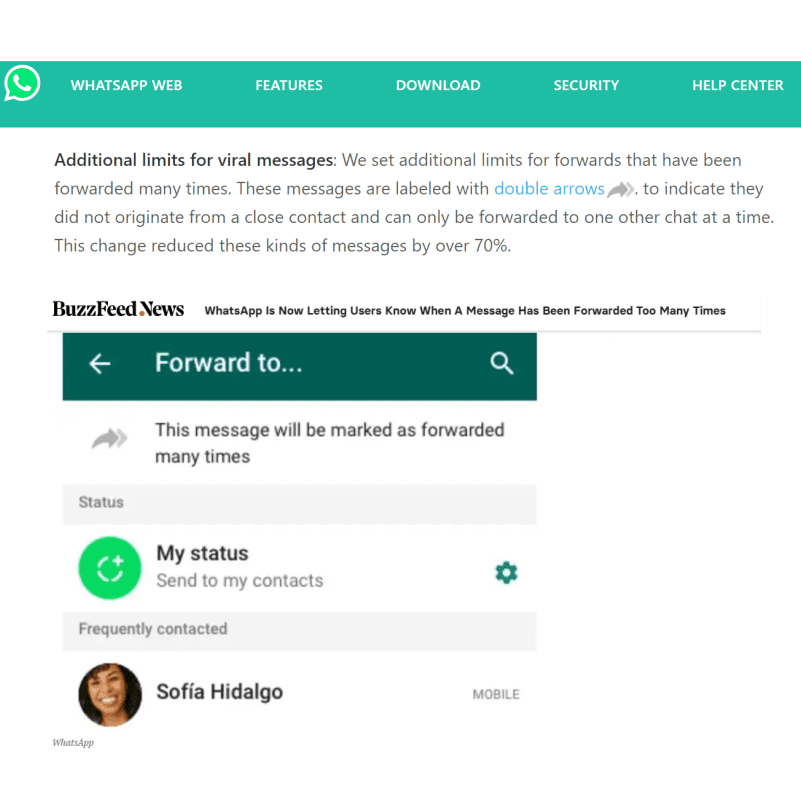

To prepare for the abundance of content regarding the 2020 presidential elections, WhatsApp also added the “Double Arrow” label for forwarding moderation. In the top-left corner of a message in grey text, the double-arrow icon appeared with plain text “Forwarded.” This icon was created to inform users that content has been forwarded multiple times — that the message does not originate from the user’s contacts — and therefore could potentially carry misinformation due to its viral nature.

In response to COVID-19, WhatsApp announced in April of 2020 that forwarding was further limited to one contact at a time when a message also contained a double-arrow content warning. WhatsApp also provided internal resources for information through its Coronavirus Information Hub, and fact-check messaging service. The responsibility of checking information still focused on user discretion and reporting, as opposed to implementing content labels or third-party fact check-based moderation by the platform. For instance, a criteria list for rumors and fake news was added to WhatsApp in response to COVID-19, but this resource was intended for users and not as platform moderation guidelines.

WhatsApp Research Observations

WhatsApp has an entirely different content moderation approach from other Facebook Inc. platforms, as its primary function as an SMS messenger application is different than the functions of other, more openly public social networking platforms. This is why the content-specific labeling taxonomy of Facebook and Instagram provided limited support in helping any would-be moderation efforts in WhatsApp private messaging functions.

Although WhatsApp implemented no substantive content labeling and evaluation strategies compared to other Facebook Inc. platforms, it attempted to provide context for users by having the forwarding and spam warnings embedded into the messages themselves. This is an attempt to provide an immediate indicator for potentially harmful content. The platform’s friction strategy, such as it is, was increased in response to COVID-19 and the U.S. 2020 elections.

Resources to access reliable information were made available on WhatsApp; the platform also added functions for user reporting. However, again, these were not directly providing friction against harmful misinformation for individual users. Labels that indicate spam and faulty links may have protected users from scams and some types of misinformation, but not necessarily other forms of content that can be shared without a link. Fake messages and doctored images, for instance, can be shared without a link extension. And while forwarding limits and labeling indicators can help contextualize potentially harmful content, they do not provide enough friction to stop large groups from gathering and sending out content from a shared source.

In sum, it remains a challenge both to implement content moderation on this application and to evaluate the relative successes and failures of WhatsApp in this regard. Because it is focused on private communication as opposed to having a public feed available for fact-check review, WhatsApp highlights the tradeoffs offered by double-encrypted messaging.